After exploring the ingredients and recipes of ASR fine-tuning in our first and second blogpost of this series, let’s now experiment with a real-world example: Marathi, my mother tongue. This time, we’ll see how fine-tuning the Whisper model on Marathi data can improve its transcription quality and what obstacles arise along the way.

To keep things accessible, we’ll use an “off-the-shelf” Whisper model and publicly available data from Mozilla Common Voice. The goal here is not to provide a full technical tutorial, but to highlight the key challenges in fine-tuning an ASR model for a low-resource language like Marathi.

If you’d like to try it yourself, check out the full Hugging Face Whisper fine-tuning tutorial here.

As of today, the available common voice corpus for Marathi is under 30 hours, of which only around 70% is validated. Considering fine-tuning a state-of-the-art Whisper model, fine-tuning on 25 hours of data could sufficiently bring down the WER further, but it is not sufficient to improve the accuracy of the model to achieve negligible WER for transcription.

Besides the data size, there is also the complexity of dialectal diversity. Owing to its diversity and digital scarcity in remote regions, the substantial dialectal variation of Marathi is often ignored. Regional dialects like Varhadi, Ahirani, Marathwada, Satara-Karad, Kolhapuri and Malvani, differ in pronunciation, word choice, and even intonation patterns. A generic model trained only on “standard” Marathi may struggle to generalize, especially compared to the maturity of English or Hindi ASR today.

Tokenization Challenges in Marathi

As seen previously in ASR fine-tuning pipeline, before training the model, tokenization on training batches is performed. A tokenizer disintegrates text into smaller units called “tokens” which are then used by Whisper (transformer) model. This step is critical, as poor tokenization can significantly degrade ASR accuracy in low-resource languages. A set of tokenizers for Marathi and similar languages are being developed by researchers and developers as a part of various University projects e.g. GitHub source.

Marathi uses the Devanagari script, like Hindi, but with its own phonological quirks. Nasalization, diacritics (matras), aspirated consonants, and compound graphemes complicate tokenization and alignment. Most ASR pipelines, even multilingual ones, assume consistent character-sound mapping, which isn’t always the case here.

English tokenization commonly involves splitting text into words based on spaces and punctuation, while Marathi tokenization involves splitting at the character, word, or subword level, with special consideration for matras which are symbols that vowels transform into when applied to consonants.

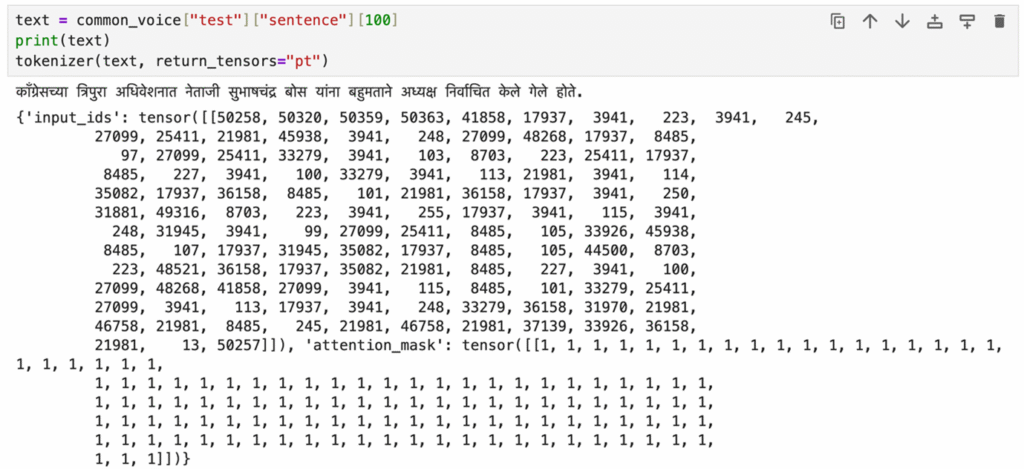

For better understanding, let’s compare how tokenization behaves in English and Marathi.

- English Tokenization: Splitting of text into individual words based on spaces and punctuation. For example, the sentence “This is a mouse.” would be tokenized into [“This”, “is”, “a”, “mouse”, “.”]. Another form of tokenization is at the level of individual characters. For example, the same sentence would be tokenized into [“T”, “h”, “i”, “s”, ” “, “i”, “s”, ” “, “a”, ” “, “m”, “o”, “u”, “s”, “e”, “.”]. After this, each token will have corresponding ID (a number) assigned to it, which will be then passed into the model training.

- Marathi Tokenization: Tokenizing happens at the character level which can be problematic as it may separate matras from their base characters. Proper handling of matras is crucial for preserving the meaning of Marathi words. Off the shelf tokenizers for Devnagari based scripts are more biased towards Hindi. Marathi is a morphologically rich language, meaning words have complex forms. Subword tokenization can be particularly helpful in preserving context in morphologically complex languages like Marathi.

Examples:

- English: The word “to ask” would likely be tokenized as a single word.

- Marathi: The word “विचारणे” (vicharNe) might be tokenized into [“व”, “ि”, “च”, “ा”, “र”, “ण”, “े”] (character) or [“विचार”, “णे”] (subword contains verb = noun + infintive suffix). The subword approach is generally preferred, as it preserves the meaning of the word. In essence, both English and Marathi tokenization aim to break down text into manageable units for NLP models, but Marathi tokenization presents unique challenges due to its morphology and the importance of diacritics. Some of these challenges are resolved in the Indic languages based open-source tokenizers mentioned earlier.

Figure 1 shows character-level tokenization for Marathi vs. English. In Whisper, the character tokenizer’s limitations, specifically the maximum token length, can impact training by restricting the model’s ability to process long sequences of text or audio. This limitation is particularly critical in conversational or narrative speech where contextual continuity is essential. It can lead to a loss of context in longer conversations or audio segments, potentially resulting in reduced accuracy, especially in tasks requiring detailed transcriptions or long-range dependencies. To mitigate this issue, researchers often adjust the “max-length“-parameter in training arguments, allowing the model to handle longer sequences and thereby helps in achieving better fine-tuning results.

After understanding tokenization challenges, it is crucial to consider the broader data ecosystem for low-resource languages. Finally, there’s a lack of widely available annotated speech corpora. Initiatives like Mozilla’s Common Voice include Marathi, but coverage is minimal and often biased toward scripted speech. This limitation underscores the importance of leveraging every available resource efficiently when working with low-resource languages. Using the Whisper’s pipeline from HuggingFace, fine-tuning “large-v2” model with Common Voice 11.0 Marathi corpus gives considerable improvement on WER (see Figure 2) but it is not in the ballpark of any modern-day English ASR quality.

As Common Voice contains volunteer-based contribution, it tends to underrepresent spontaneous speech patterns and dialectal variation. There may be inconsistencies in audio quality, annotation accuracy, and speaker diversity. However, as mentioned earlier, this is an ongoing effort and the WER mentioned in the above table could be improved with more data in general. On the other hand, the question for dialects remains open and significant efforts in crowd-sourced and non-profit projects are needed to collect more data. Many such projects like ai4bharat, OpenSLR and GramVaani have been carried out to place languages like Marathi on the same stage as English, French, German etc. in the domain of NLP and ASR. The most recent one by Indian Institute of Science (IISc Bengaluru) is ambitious and the largest dataset compilation until date, which upon completion would contain 150,000 hours of audio across all districts in India.

Key Methods for Language Adaptation in ASR Models

Having explored the challenges of tokenization and dataset limitations, it is important to understand how ASR systems can adapt to new languages and contexts. Language adaptation involves integrating entirely new language into the model’s knowledge base to improve its performance across diverse environments. The three main types of adaptation are:

- Accent and Dialect Adaptation: Fine-tuning the model with diverse ASR data containing accents and dialects. This is essential for improving accuracy across different regional data.

- Environmental Adaptation: Adapting the ASR model to different environmental conditions, such as background noise, multiple speakers. It enhances the robustness of the model, allowing it to perform well in noisy or challenging environments.

- Domain adaptation: Fine-tuning the ASR model to a specific domain or industry, such as healthcare, finance or customer service. This allows the model to recognize specialized vocabulary and jargon, significantly improving its performance in context-specific applications. One can also leverage entity recognition approach to make the model adapted to the expected domain.

Together, these adaptation methods help improve the accuracy and robustness of ASR models, making them more versatile and effective across various languages, accents, environments and industries.

Overcoming Challenges in Fine-tuning for Low-Resource Languages

Fine-tuning ASR models for low-resource languages presents unique challenges:

- Data Scarcity: Collecting high-quality annotated data for underrepresented languages is a significant challenge, as these languages often lack the necessary resources to train effective models.

- Diversity of Dialects: As discussed earlier, collection of dialectically diverse data is crucial to make model more applicable and realistic. The real challenge here is how to get statistically fair sample from different regions and one has a risk of biasing the model.

- Resource Constraints: Collection and processing of data, preprocessing and training ASR models requires high quality equipment and GPU based computational resources, which in some cases may not be readily available in regions where these languages are spoken.

- Bias Amplification: Limited data also can aggravate bias, leading to unfair or inaccurate outcomes in speech recognition tasks.

Acknowledging these challenges is essential, as it lays the groundwork for implementing effective strategies to overcome them.

Strategies for Enhancing ASR Models for Low-Resource Languages

To address these challenges mentioned, several strategies can be employed:

- Data Augmentation: Techniques such as noise addition, pitch modulation, and text-to-speech generation can help expand small datasets, making them more diverse and robust.

- Cross-Lingual Transfer: Leveraging models pre-trained on phonetically or linguistically related languages can help fine-tuning models for new low-resource languages, even when available data is limited. This technique is being largely applied by ASR researchers for many low resource languages.

- Community Engagement: Collaborating with local communities to collect and annotate data ensures better representation and more accurate models for low-resource languages.

By combining these approaches, researchers can significantly boost the performance of ASR systems in low-resource language settings, laying a stronger foundation for inclusive language technology.

Future Directions in Fine-Tuning ASR Models and Bridging the Gap for Low-Resource Languages

Fine-tuning state-of-the-art ASR models is a dynamic and evolving field. From its historical roots in acoustic and language model adaptation to modern challenges posed by low-resource languages, fine-tuning remains integral to achieving the next level of ASR performance. While challenges persist, advancements in data augmentation, transfer learning, entity-based fine-tuning (vocabulary enhancement) and parameter-efficient fine-tuning methods (e.g., LoRA or adapters) offer promising pathways toward higher accuracy and domain generalization.

For the research community, the key question now is how to leverage these methods to create models that not only perform well in controlled benchmarks but also generalize effectively in real-world scenarios.

Fine-tuning remains a powerful mechanism for adapting ASR models to specific domains, languages, or acoustic environments. In the context of low-resource languages, this process is not only technically challenging due to limited corpora and high dialectal variance, but also essential for improving linguistic coverage in global AI applications. Strategies such as data augmentation, self-supervised pretraining, and cross-lingual transfer leveraging typologically related languages is likely to drive further improvements.

From a research perspective, integrating community-sourced corpora with robust evaluation frameworks is crucial to understanding model behavior beyond standard WER metrics, particularly for spontaneous speech and dialect-rich contexts. With the convergence of large-scale pretraining, efficient fine-tuning methods, and collaborative data collection, ASR systems are expected to become more effective across diverse languages and in real-world scenarios.

In the long term, these developments will not only expand the applicability of ASR but also contribute to more equitable access to speech technologies, providing a compelling research agenda at the intersection of NLP, Speech processing, and multilingual AI.