Artificial intelligences, as known from science fiction movies, are mostly General Artificial Intelligences. They can solve any task through a combination of accumulated knowledge and logical reasoning, especially when the task has never been solved before. Developing such Artificial Intelligence is increasingly referred to as the Holy Grail of AI.

Currently, Machine Learning techniques are used to learn specifically defined tasks from a collection of examples. Examples include facial recognition in images or translating text between two languages. Once the model has been trained, it generally lacks the ability, unlike humans, to solve related problems with abstract reasoning. For instance, a facial recognition model may not readily identify a person wearing a face mask unless trained on such examples. In the article On the Measure of Intelligence, François Chollet describes the need for a benchmark that can accurately quantify the general intelligence of AI. This would be equivalent to an IQ test for AI. As a result of his considerations, he released the Abstraction and Reasoning Corpus.

The Abstraction and Reasoning Corpus

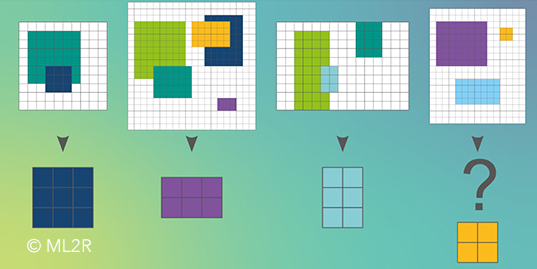

The Abstraction and Reasoning Corpus (ARC) consists of many different tasks in the form of image data. Each image consists of a rectangular grid of any size (between 1×1 and 30×30 pixels) with cells filled with one of nine colors. From two to four training examples with solutions, the AI should learn the logic of the respective task and then apply it to one or two additional example images without predefined solutions. Typical tasks require an understanding of geometry and logical reasoning. For example, shapes of a specific color need to be moved, cut, duplicated, or continued (see the image below). Such tasks are typically easily and quickly understandable by humans.

A sample task from the ARC. The goal is to cut out the shape with the smallest area from each image in the top row. The AI is provided with the first three pairs for training. The last image is used to evaluate whether the AI has learned the task’s logic.

The difficulty with this dataset lies firstly in the fact that there are many different tasks with fundamentally different logics. Around 400 tasks are provided in the training dataset, all of which must be solved using the same approach. Secondly, an additional challenge arises because not only can the image sizes vary between tasks, but also different sizes may occur within the same task. Sometimes, even the output size may not be equal to the input size, for example, when certain shapes are to be cut out of the input. However, many Machine Learning methods expect fixed input and output dimensions.

To test the dataset, François Chollet organized a public challenge on the Data Science platform Kaggle. Registered groups received the dataset and could submit computed solutions for evaluation on Kaggle. A public leaderboard then listed the number of correctly solved tasks for each team. With the approach described below, we achieved a ranking among the top 30 out of over 900 participating groups at the end of the challenge.

Solution approach using grammatical evolution

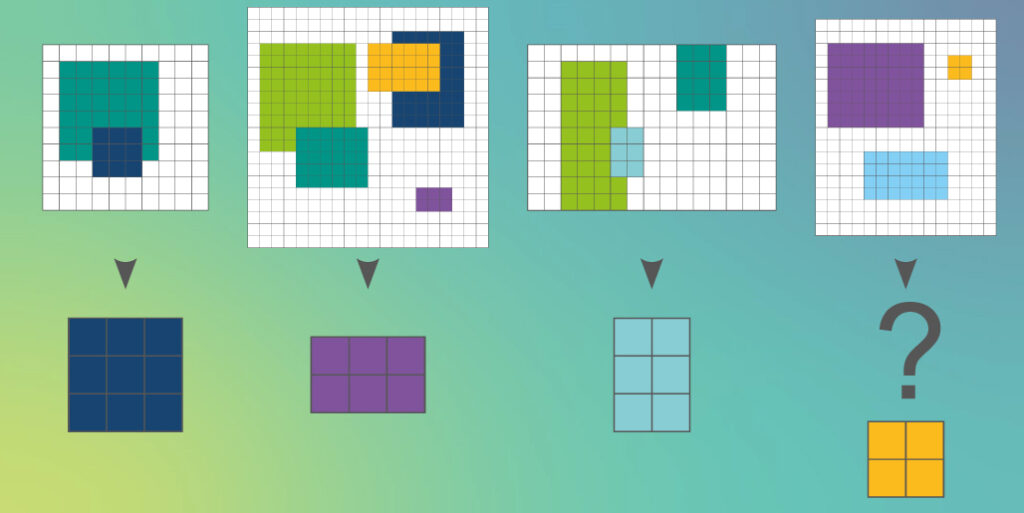

Initially, we pursue the approach of defining operations on grid images as a domain-specific language. In addition to the images, we define a layer object, consisting of a list of several images. This approach is also common in image processing software like Adobe Photoshop or GIMP. The grammar of the domain-specific language consists of four different operation types:

- T (Image -> Image): Operations that directly process an image and return an image (crop, rotate, mirror, etc.)

- S (Image -> Layers): Operations that divide an image into multiple layers (duplicate, split into color channels, etc.)

- L (Layers -> Layers): Similar to T, but for layer objects (sort layers based on specified criteria, filter, etc.)

- J (Layers -> Image): Inverse of S (merge layers into one image, etc.)

An automaton for generating valid image processing algorithms. X represents a single image, while X* represents a layer object. It is stipulated that both the initial and final states must be an image, ensuring that every generated expression leads to a valid image.

Using an evolutionary algorithm, we generate syntax trees from the defined grammar and mutate, recombine, and select them based on their quality on the example data. This approach is repeated for each task in the dataset. We assume that a correct syntax tree has been found once it correctly solves all the example data. We then use it to solve the remaining tasks without solutions. If no syntax tree solves all example tasks within a certain time window, the task is skipped.

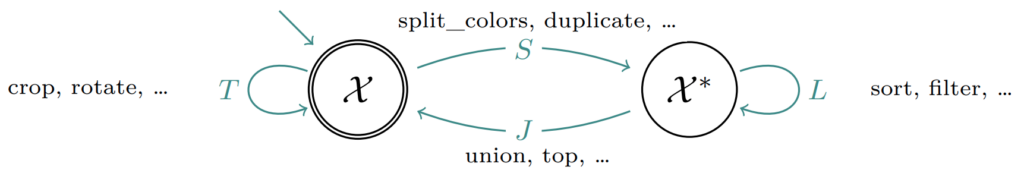

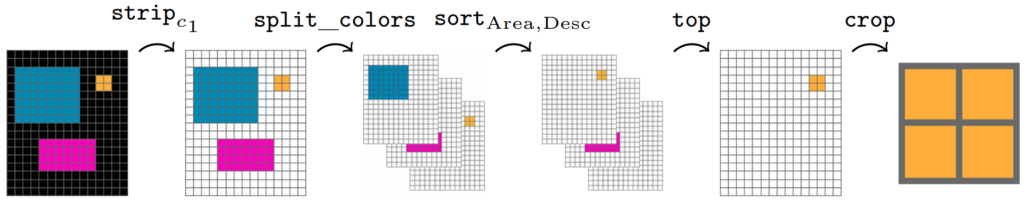

Generated solution using our approach to the aforementioned sample task. First, the color “c1” (black) is removed from the image. Then, the image is divided into individual layers based on the existing colors and sorted in descending order by the area of each color. With “top,” the top layer is then selected and cropped.

With this approach, we managed to solve 3% of the secret test tasks from which the public leaderboard was created. While this may seem low, we achieved a ranking of 28th out of over 900 groups. This highlights how challenging it is to find a good solution for the ARC, as the strong diversity of tasks makes it difficult to define a consistent set of predefined functions from which expressions for various tasks can be generated. Each additional function also adds complexity and enlarges the search space. Although the ARC Challenge provides an interesting insight into the possibilities of General Artificial Intelligence, it also clearly shows how far it still lies in the future.

More information in the following paper:

Solving Abstract Reasoning Tasks with Grammatical Evolution Raphael Fischer, Matthias Jakobs, Sascha Mücke, Katharina Morik. Lernen, Wissen, Daten, Analyse (LWDA), 2020, PDF.