In the last blog post, we learned how context-intensive embedding vectors for interpreting words can be calculated using association modules. We also noted the need for a prediction task that allows the training of the model’s unknown parameters. This is where the BERT (Bidirectional Encoder Representations from Transformers) model comes into play. Developed by Jacob Devlin and his colleagues at Google in 2018, BERT is encountered daily in Google’s search engine, serving as a pretraining approach for various language processing and interpretation problems. But how does this model work?

Architecture of the BERT Model

To predict words within a sentence, a randomly selected subset of the words is replaced with the term “[MASK],” also known as a masked token. The model then requires each replaced word to be predicted solely using the corresponding embedding of the last layer. This means, based on the context words surrounding the masked token, the model attempts to predict what the masked word should be. More precisely, the model predicts the probability of the replaced word, ideally aiming for a high probability.

Architecture of the BERT model: The pretraining of the model is performed with the prediction of masked words.

The input consists of the word- and position embedding vectors of the input text. Approximately 15% of the words are randomly chosen and replaced with “[MASK].” The base version of the model has embedding vectors of length 768 and uses twelve layers. The resulting embedding vectors are transformed by a fully connected layer, and their output serves as the next embedding vectors for the corresponding words. To improve the trainability of this association layer, bypass connections are employed to pass parts of the inputs around the association modules. Additionally, through a process called layer normalization, the mean and spread of the embedding vectors are normalized to 0 and 1, respectively. The calculated embedding vectors of an association layer then serve as inputs for another association layer, and this process repeats. The BERT base model uses a total of 12 stacked association layers.

From the final embedding vectors of the last layer, the probabilities of the masked output words are then predicted. Since only the embedding vector of the masked word is used as input to predict the word probabilities, the model is forced to encode as much information about this word as possible in the vector. BERT is capable of using not only words preceding the masked word as context but also those following it. This results in a highly informative context-dependent embedding vector for the respective masked word.

Properties of BERT

The BERT base model has a total of 110 million parameters with twelve layers, and BERT-Large with 24 layers has 340 million parameters. In addition to predicting masked words, BERT also had to predict whether the next sentence is a randomly selected sentence or the actual subsequent sentence. However, this task had little impact on the model’s prediction capabilities.

For BERT training, the English Wikipedia and a book corpus with 3.3 billion words were used. These words were represented by a total of 30,000 common words and word parts. Rare words are composed of word parts. No annotations by humans were required for the texts, making the training process unsupervised [German post link: https://lamarr-institute.org/de/blog/welche-arten-von-maschinellem-lernen-gibt-es/] in machine learning. Sequences of up to 512 words could be considered. The training used the gradient descent method [German post link: https://lamarr-institute.org/de/blog/optimierung-im-maschinellen-lernen/] and took four days on 64 particularly powerful graphics processors. During this training, it is possible to “retroactively” determine the embedding vectors in the input for all words and word parts.

BERT has shown excellent results in predicting masked words, and the additional layers significantly increase accuracy. For example, if 15% of the words are masked in the training data, BERT can predict the original words (or word parts) with an accuracy of 45.9%, even though in many cases, several values at a position would be plausible.

BERT Allows Representing the Strength of Associations Between Words

It is possible to graphically represent the strength of associations between individual words for the trained model. These associations naturally depend on the input words and the composition of the individual embedding vectors. Each association module considers different aspects and establishes relationships between different words.

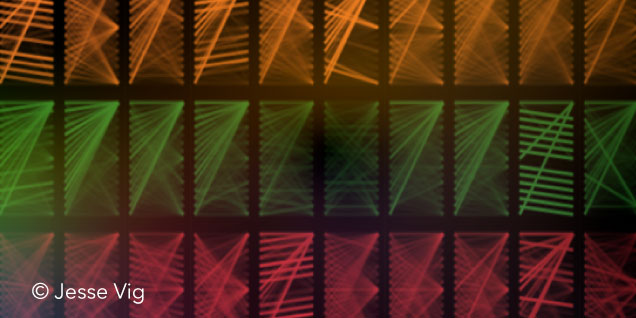

Representations of associations between words in different association modules.

The above graphic illustrates the associations of two modules in layer 8 for different texts. Here, “[CLS]” symbolizes the beginning of the text, and “[SEP]” indicates the end of a sentence. The two left graphics contain the association between a direct object and a verb (red lines). In the right graphics, the red lines represent the association of adjectives and articles with the noun. During training, it was observed that these association modules focused on representing syntactic relationships, while other association modules took on complementary tasks. The assignment of representation tasks to association modules emerges from randomly chosen initial parameters.

The following graphic shows, for the text “[CLS] the cat sat on the mat [SEP] the cat lay on the rug [SEP],” the structure of all association modules of the twelve layers. It is evident that the special symbols “[CLS]” and “[SEP]” play a significant role in many association modules. On the other hand, there are several associations with very different shapes that establish specific relationships between the words of the input text.

Overview of all associations of different association modules for the sentence from the third illustration

BERT Allows a Reliable Interpretation of Word Meanings

During training, BERT is tasked with answering questions like “The leaves on the trees are [MASK].” Thus, in addition to the syntactic rules of language, BERT must also grasp substantive connections. It has been shown that the BERT model and its derivatives can acquire a wealth of knowledge about language and its meaning through unsupervised training. It is possible to adapt these models to specific semantic tasks through supervised fine-tuning with relatively few training data. The achievable prediction accuracy through this transfer learning is significantly higher than with the previous approach, as the BERT model can interpret linguistic patterns much better. This is demonstrated in the series of articles on language understanding through transfer learning. The monograph provides a broad, easily understandable overview of transfer learning and deep neural networks.

Key Takeaways about BERT

The construction of the BERT model was based on the observation that the spelling of words is independent of their meaning. Since spelling reveals little about the meaning of words, the meaning of words is represented by embedding vectors. Because the meaning of a word depends on the surrounding words, context-dependent embeddings are required. They can be determined, for example, through a series of association modules.

The BERT model consists of a series of layers in which different association modules are calculated in parallel, producing meaningful embedding vectors. To predict masked words, the parameters of these association modules are optimized to predict the masked words as accurately as possible. This evaluates the entirety of the text for word prediction, and the model captures many syntactic and semantic relationships in natural language. Through fine-tuning, it is possible to solve many problems in the interpretation of natural language with this model. The model demonstrates that a bidirectionally trained language model can better understand the meaning of words than if only the previous words are used as context. The applications are versatile in today’s world and will be presented in upcoming blog posts.

More information can be found in the corresponding publications:

Devlin, J. et al. 2018: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, PDF.

Manning, C. 2019. Emergent linguistic structure in deep contextual neural word representations. Vortrag auf dem Workshop on Theory of Deep Learning: Where next?, Link.

Paaß, G. und Hecker, D. 2020: Künstliche Intelligenz — Was steckt hinter der Technologie der Zukunft? Springer Nature, Wiesbaden, Link [in German].

Rönnqvist, S. et al. 2019: Is multilingual BERT fluent in language generation? arXiv preprint arXiv:1910.03806, PDF.

Vig, J. 2019: BERTVIZ: A Tool for Visualizing Multihead Self-Attention in the BERT Model. ICLR 2019 Debugging Machine Learning Workshop, PDF.