As the most widely used 3D data format, point cloud recognition has become an area of increasing research focus. However, as deep learning models continue to improve their performance, the increasing complexity of model architectures raises concerns about their trustworthiness, especially for data such as point clouds that are often used in safety-critical applications like autonomous driving. To address this challenge, a previous blog post presented an explanation method based on a surrogate model for point clouds. In this article, we will now propose further research on the explainability of point clouds.

The Explainability of Point Clouds

The main goal of explainability research is to make the decisions of machine learning models more transparent and interpretable to humans. Different explainability methods correspond to various pathways to achieve this goal. One such method is the LIME method for point clouds. This method is an attribution-based local explainability method that answers the question of which part of the instance is critical for a specific decision. However, understanding the prediction principles of deep neural networks from a single perspective can be challenging. Attribution-based explanations lack ground truth, making it difficult to assess their correctness. Additionally, local explanations cannot provide insights into how the model behaves on the entire dataset. To address these limitations, two methods will be proposed to reveal the decision basis of point cloud models from different perspectives: an Activation Maximization-based global explainability method and a fractal window-based non-deep learning approach.

Global Representativeness

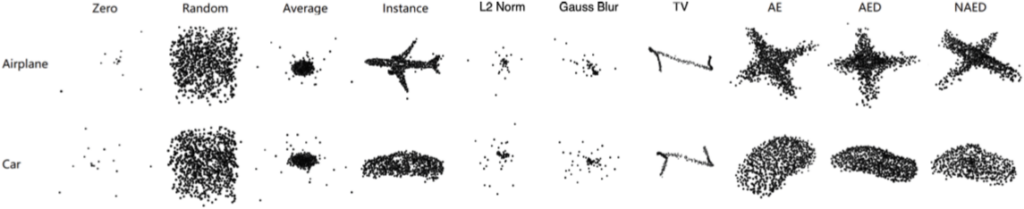

A fascinating question in deep learning is what a model considers to be the ideal input. A previous study proposed an instance-based approach to visualizing global representation called Activation Maximization (AM). The basic idea behind AM is to perform gradient ascent optimization on specific neurons in the highest layer of the model, known as logits, to generate an input with the highest confidence, which is considered the most globally representative instance. This technology has been extensively applied to images, enabling clear outlines of objects to be generated.

However, visualizing the global representations of point cloud models using AM faces further challenges due to the complex data structure. To create a point cloud-specific global instance-based explanation, we focus on three main aspects:

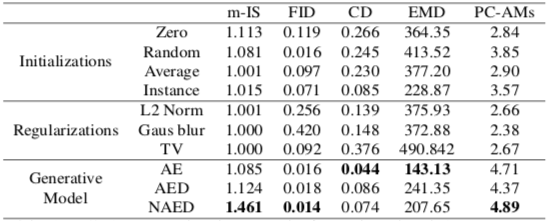

- The extent to which the explanation is globally representative. It depends on the activation level of selected neurons. A higher confidence score indicates a more globally representative input.

- The perceptibility of the explanations to humans is crucial. Generally, the closer the explanation is to an existing object, the easier it is to perceive. We quantitatively evaluate this metric by comparing similarity to samples of the same category from the dataset.

- The diversity of explanations is also important, as it provides a more intuitive understanding of the internal mechanisms of the model. A higher number of non-repetitive explanations contributes to a better understanding of the model’s decision-making process.

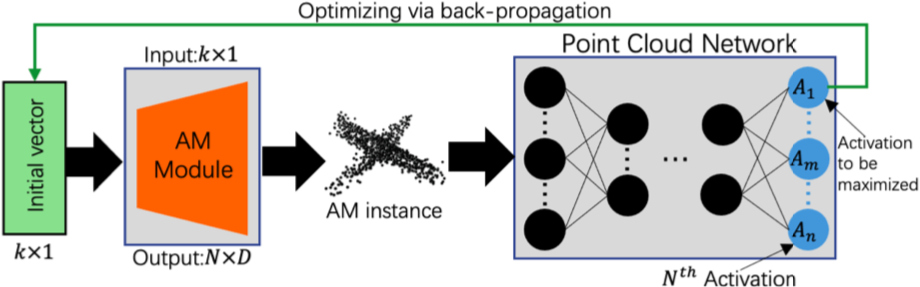

To address the challenges of applying Activation Maximization to point cloud models, we first filter out the subset of the data that is easily recognizable by humans. From there, we search for samples that are highly representative for the entire data distribution. Our approach to global explanation involves a generative model and a point cloud classifier (more on classification in machine learning in this blog post – in German).

The generative model is an Autoencoder with a few key modifications:

- a discriminator for distinguishing generated samples from ground truth samples

- two latent distance losses for controlling the quality of the generated samples in latent spaces

- Gaussian noise added to the encoder during training to improve the stability of the generations.

The above module is connected before the classifier to be explained and a specific neuron (normally the logits, indicating a specific class) is selected from the latter. Then a random initial vector is fed into the generator to produce global explanation samples that are both perceptible and representative by minimizing the loss function, which is the difference between the generation loss and the target activation value. We also add random noise during optimization to promote diversity and avoid generating the same result repeatedly.

Because of the structural distinctiveness, regular AM techniques used on images cannot produce meaningful explanations for point clouds. However, the proposed method generates highly perceptible and representative 3D structures for specific classes. To facilitate comparison, we suggest a quantitative evaluation metric that incorporates all three essential elements: representativeness, perceptibility, and diversity.

Inherent Interpretability

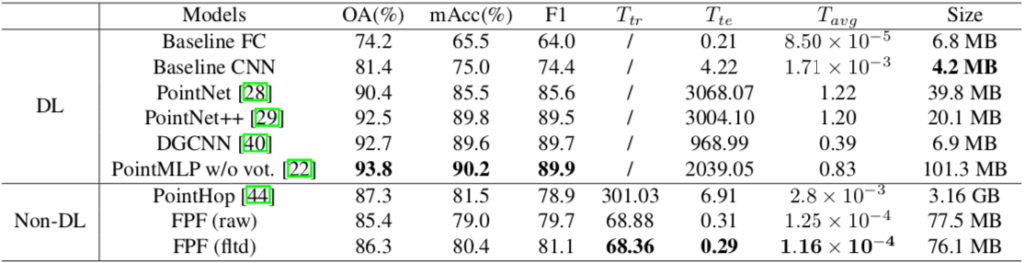

An alternative to post-hoc explainability methods is to increase the transparency of the model itself. This approach can reduce computational costs and avoid potential biases that may be introduced by explainability methods. However, explainable models often have lower performance compared to black-box ones. Most point cloud models are based on deep neural networks because they are capable of learning complex spatial features. Nonetheless, researchers have pointed out that it is feasible to achieve similar performance using an interpretable model that can extract effective features.

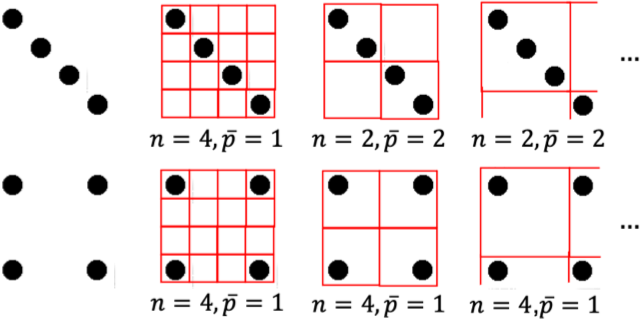

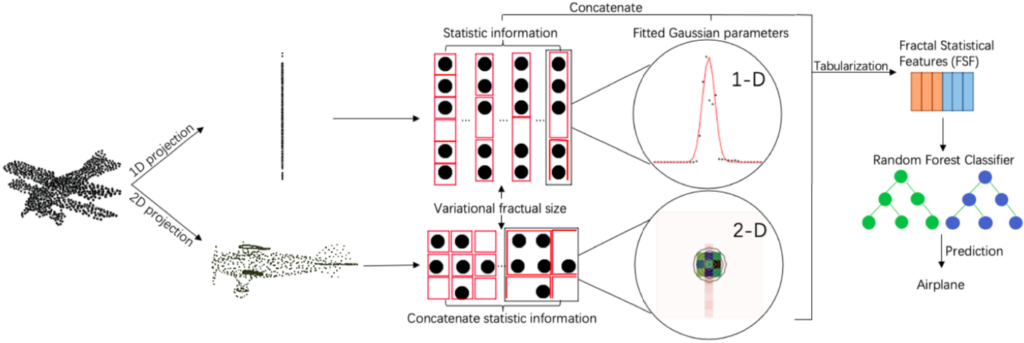

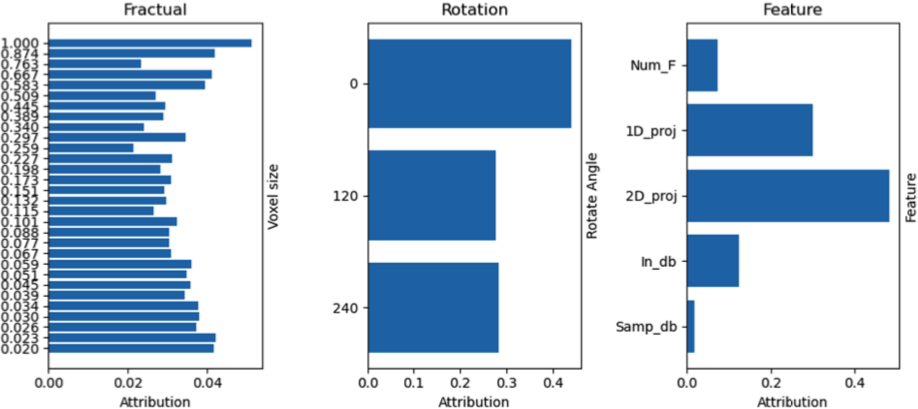

We present a feature extraction technique for point clouds called “fractal windows”. This method involves sampling points with different window sizes to generate a sequence of windows, and then extracting statistical features from each window to obtain tabulated features. The Statistical features can be concatenated with any custom feature vector, including but not limited to quantity, average value, variance, etc. By utilizing this approach, the intricate spatial structure of point clouds is transformed into tabular data, which is better suited for interpretable models.

The classification process can be divided into four main steps. First, the input is augmented (e.g., rotated) and projected onto one- and two-dimensional spaces along the X, Y, and Z axes. Second, fractal windows are generated from which statistical features are manually extracted. Third, Gaussian fitting is used to calculate the distributions of the one- and two-dimensional fractal windows. Finally, all fractal features are concatenated and fed into a random forest to obtain predictions.

Overview: The structure of fractal window-based classifier. The image is from this paper: https://openaccess.thecvf.com/content/WACV2023/papers/Tan_Fractual_Projection_Forest_Fast_and_Explainable_Point_Cloud_Classifier_WACV_2023_paper.pdf

Our non-deep learning approach demonstrates performance comparable to that of deep learning models, while offering the advantage of being trained and executed on the CPU with acceptable processing times. This reduces computational costs compared to deep learning methods.

Random forests offer improved transparency compared to black-box neural networks, although they are still not fully comprehensible models. Intrinsic interpretations can be generated using Gini impurity, which eliminates the need for additional post-hoc calculations. Post-hoc explanations can also be used, as the model can be easily retrained multiple times due to the significant increase in training speed, which mitigates the out-of-distribution problem caused by perturbations (see ROAR).

Schließlich ist der vorgeschlagene Klassifizierungsansatz in hohem Maße anpassbar, da jedes statistische Merkmal verkettet werden kann. In Zukunft wollen wir Methoden zur Merkmalsverarbeitung erforschen, die tiefe neuronale Netze potenziell übertreffen könnten.

Toolkit – Explaining Point Cloud Models

You found this information useful and want to try it out yourself? Here you go: the code is available on GitHub: https://github.com/Explain3D/PointCloudAM and https://github.com/Explain3D/FracProjForest.

Instructions for building the working environment can be found in the repository. For more information on the proposed approaches and experiments, please refer to the corresponding paper.

- Visualizing Global Explanations of Point Cloud DNNs

Tan, Hanxiao. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2023. Pdf - Fractual Projection Forest: Fast and Explainable Point Cloud Classifier

Tan, Hanxiao. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2023. Pdf