Using simple language brings clarity to our everyday written language and opens the door to a broader audience, including children, language learners, and those with cognitive impairments. In our previous exploration of text simplification, we underscored the lack of diversity in available datasets. Addressing this gap, we created a dataset in both German and Simple German, comprising 708 aligned documents and over 10,000 matched sentences. Join us as we delve into the details of the creation process, pointing out a few intricacies during the data collection and the evaluation of our sentence alignments.

A new aligned Simple German corpus

This year we published the first publicly accessible aligned Simple German dataset. What does „aligned“ mean? Our dataset is aligned in two dimensions: our dataset pairs German articles with their counterparts in either Simple German or Plain German (the difference between both forms of simplification was described in our last blogpost). Beyond article alignment, we present the analysis of different methods for sentence alignment — a domain still under active research. That means, for each sentence in a German article, we want to find all sentences in the Simple German article that would constitute a translation. In this way, our dataset is aligned on both the article and the sentence level. We will explain the methods and our forms of evaluation in detail in this blog post.

© Vanessa Toborek

Tracing the data trail: The five steps of our data creation process

Our dataset’s creation took place in a five-step process outlined in Figure 1. Step one “web search” was to conduct an extensive web search, tapping into bilingual internet resources that cover diverse topics. In our case, „bilingual“ refers to German and some form of simple German. With the help of internet crawlers, we automatically extracted the textual data from the websites. From all bilingual resources that we’ve found, we had to discard a few that were not crawlable due to a highly inconsistent website structure. This leaves us with eight internet resources covering a range of different topics from general health information and information about various social services to German news articles. In an effort to future-proof our dataset against the dynamic nature of the internet, step two “archiving” consisted in archiving all articles using the WayBackMachine by the internet archive. In step three “crawling”, we crawled the entire websites resulting in html files which still contain the original formatting of the articles. We used the html files for step four “parsing”, in which we parsed the HTML files for the raw text data. For that we ignored images, html-tags, and corresponding meta-data like e.g. bold writing and paragraph borders. Additionally, we transformed enumerations, which are frequent in simple language, into comma-separated text. The resulting article-aligned dataset forms the foundations for our subsequent sentence alignment in step five.

Precision in sentence alignment: Unpacking step five

Automatic sentence alignment can be described as the problem of being presented with a German and Simple German article, each consisting of a different number of sentences. The goal of the sentence alignment is to create a list of sentence pairs, in which each pair consists of a German sentence and its (partial) simple version. The sentence alignment involves three stages:

- Transforming the raw text into sentence lists and performing some light pre-processing,

- computing similarity scores, and, finally,

- employing a sentence matching algorithm.

Employing the python library spaCy for sentence identification, we proceed with the text pre-processing. All punctuation, especially the hyphens between compound nouns in Simple German, is removed. We will convert the text into lowercase only for similarity measures using TF-IDF vectors. We will not convert the text into lowercase when using the precomputed word vectors as they differ for words like e.g. „essen“ (to eat) and „Essen“ (food). Finally, we remove all gender-conscious suffixes from the German articles. With this we are referring to word endings used in inclusive language to address female as well as other genders, not endings that transform male nouns into their female form. We remove these endings as they are not used in Simple German texts. This pre-processing is only used to facilitate the sentence alignments, but will not affect our final corpus. The next step is the computation of similarity measures. In order to calculate and compare the similarity of sentences, we need a numerical representation of them. There are different approaches for transforming text into numbers and the eight similarity measures that we’ve compared cover three different ways to do so: tf-idf vectors, word embeddings, and sentence embeddings. After the transformation of the sentences into vectors, each similarity measure uses the cosine similarity in one way or the other to measure the similarity between them. The first group of similarity measures („4-gram“, „bow“) transforms the sentences into TF-IDF vector representations. These vectors are computed by calculating for each word in a sentence how often it occurs in a given document (term frequency) and how many articles in the entire corpus contain this word (inverse document frequency). The second group („avg“, „cosine“, „bipartite“, „CWASA“, „max“) uses pre-trained language models that provide vector representation for each word by calculating word embeddings. The difference between the similarity measures is how they combine the word representation into a unified sentence representation.

© Vanessa Toborek

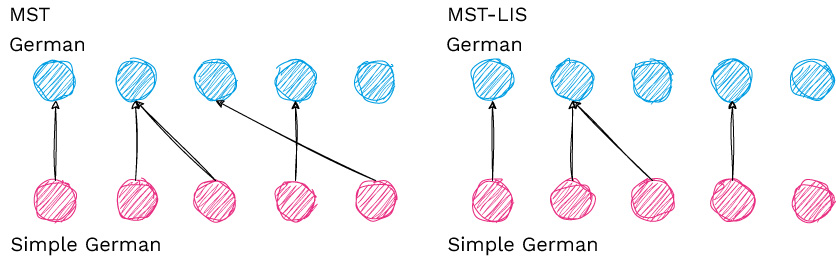

Finally, there is one similarity method in the third group that uses the pre-trained language model Sentence-BERT (SBERT) to directly calculate sentence embeddings. Lastly, we can use the list of sentences and their corresponding similarity scores to compute the final sentence matchings. Given the different similarity measures, we calculate the similarity between each sentence in the German and the Simple German article. Then, we compare two different sentence matching algorithms: Most Similar Text (MST) and MST with Longest Increasing Sequence (MST-LIS). Both algorithms will pick for each German sentence the most similar Simple German sentence. But while the one algorithm (MST) does so naively, the other (MST-LIS) additionally considers the order of information inside each article and will only match two sentences if they do not violate the order of the previously matched sentences. Given the eight similarity measures and two matching algorithms, we arrive at 16 different ways to calculate our final sentence alignments. In the following we will see how we evaluated the quality of each approach.

© Vanessa Toborek

Alignment Quality: A dual evaluation approach

We follow a two-fold approach to evaluate the different sentence alignment algorithms on our newly created dataset. First, we created gold standard alignments for 39 articles, calculating the F1 score to evaluate our methods. The F1 score is the harmonic mean between precision and recall, a metric that takes into account not only how many sentence matches were correct, but also how many potential sentence matches were missed. Complementary to our first evaluation, we sampled over 4,500 potential sentence matches and given always two sentences, an annotator is asked to make a yes/no decision whether the two presented sentences are a (partial) match or not. While this kind of evaluation does not allow any conclusions about the number of missed matches (i.e. recall) or the relation to explanatory sentences, we argue that it gives a different perspective on the quality of the computed alignments. For a more detailed discussion of the two manual evaluation methods, please refer to our publication „A New Aligned Simple German Corpus“. Figure 2 shows the results for both evaluation methods. The results are similar, but let’s look at them more closely. In general we can see that the more restrictive matching algorithm MST-LIS achieves higher accuracy and precision. Further, the methods using TF-IDF vector representations belong to the low performing methods. The similarity methods SBERT and max take turns in performing best depending on the evaluation method. Our final recommendation leans towards employing the max similarity measure in combination with MST-LIS, prioritizing precision over recall in our sentence alignments. Nonetheless, regarding the overall F1 score, we suggest to use these sentence alignments with caution as we expect many misaligned sentence pairs. Figure 4 shows exemplary alignments from our corpus.

© Vanessa Toborek

Closing Insights: Navigating the Final Corpus

In this blog post we took you with us on our journey to create a new corpus in German and Simple German. We showed you how to create a dataset from scratch using online resources. The machine learning models we train are only as good as the datasets they are trained on. For this reason, we always have to be aware of the potential biases inherent to our data. Especially with data collected from the internet. Therefore, we release the dataset with an accompanying Datasheet. This document is a structured approach to document potential biases by providing answers to questions about motivation, details concerning data acquisition, maintenance, and much more. Beyond its primary use for training machine learning models in text simplification, the dataset stands as a versatile monolingual corpus, offering applications in latent space disentanglement and fine-tuning pre-trained models.

If you want to delve deeper, click here for the publication: „A New Aligned Simple German Corpus“.