As a form of computer vision data, point clouds are widely used in the navigation as well as the localization of self-driving cars and robots due to their excellent representation of 3D scenes. In these fields, security and reliability is a high-profile issue: Accidents caused by AI manipulation need to be avoided as much as possible. So far, however, there are challenges for both the diagnosis of models by Artificial Intelligence (AI) practitioners and the trust of users in decision-making. Most Machine Learning (ML) models suffer from non-transparency to the extent that one cannot fully retrace the basis on which the AI makes decisions. Adjusting the model according to the causes of failure is also challenging. Therefore, research on the trustworthiness of point cloud models, i.e., their explainability, becomes imminent. Unfortunately, to date, very little research has been done on the explainability for the models processing point clouds compared to images. Our work hence aims to adapt an existing explainability method, LIME, to point clouds and to improve the reliability of the generated explanations by exploiting the properties of point cloud networks.

Point cloud deep neural networks

Similar to images composed of pixels, point cloud instances consist of individual points as the fundamental unit. Typically, a point contains two types of information in six dimensions: The first three dimensions are spatial coordinates, which represent the position on the X,Y and Z axes, respectively, and the last three dimensions contain information about the chromaticity, which is expressed as a traditional RGB value. Unlike images, the position of a point is expressed through the coordinates, not implicitly in the index of the image. We hereby face the problem of disorderliness. The physical adjacency of the points in the point cloud instance is independent of the order in which they are arranged in the data. This violates the way convolutional kernels work and therefore traditional CNNs are not applicable. Fortunately, new point cloud neural networks have been proposed in recent years, and their performances are becoming increasingly satisfactory.

Explainability methods

Due to safety concerns of AI applications, the explainability of Machine Learning models has been gaining attention as an emerging research area (related discussions can be found in: Why AI need to be explainable). Explainability can be broadly divided into global and local methods, according to the object of explaining. The global explanations are intended to provide the user with an overview, e.g. the general decision rationale of the model, or the overall data distribution. Local explanations are dedicated to providing a basis of decision-making for individual instances.

The popular local explainability methods include two categories: gradient-based and perturbation-based. Gradient-based approaches require access to the internal structure of a Machine Learning model and are also known as white-box methods – examples are the Layer-Wise Relevance Propagation (LRP), Taylor Decomposition and Integrated Gradients. The perturbation-based approaches only acquire the relationship between the inputs and outputs to infer the feature importance and are referred to as black-box methods – examples are KernelShap and LIME.

We subsequently focus on LIME (Local Interpretable Model-agnostic Explanations), a powerful explainability method that can theoretically explain any black-box model. Given an instance, LIME first samples out a large number of mutations around it by perturbation. Subsequently, these mutations are fed into the model to be interpreted in order to obtain labels. Utilizing the mutations and their labels, a simple and interpretable surrogate linear model is trained, that mimics the decisions of the model to be explained as closely as possible in the vicinity of the given instance. Finally, by observing the weights of the linear surrogate model, the feature importance of the original model’s prediction can be inferred.

Graphical representation of the LIME method: Positive and negative examples (the Xs and Os) are sampled around the instances to be explained (the bold X) and used to train a locally explainable surrogate model (the dashed line).

LIME for point clouds

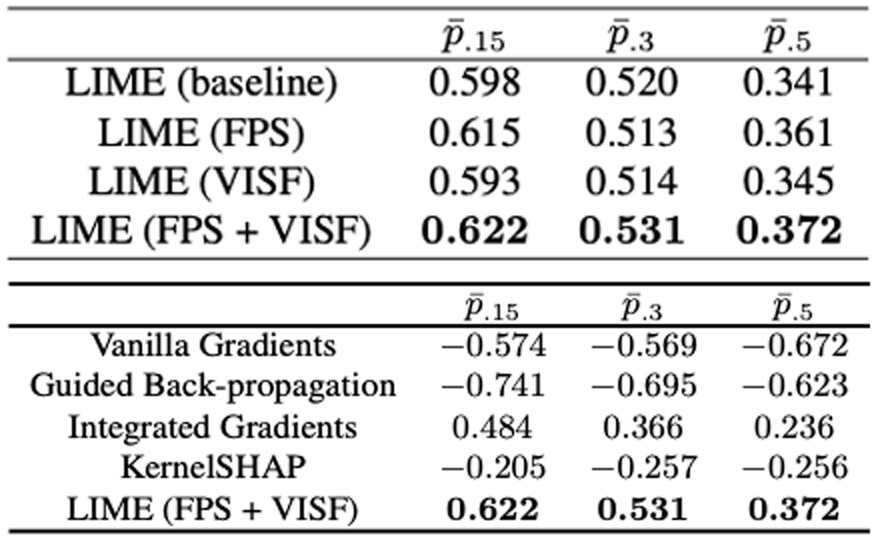

Although LIME explains any model, the plausibility of the explanations may be further enhanced if the characteristics of point cloud networks are strategically exploited. In our work, we mainly incorporate two tricks to enhance the performance of the surrogate models: Farthest Points Sampling and Variable Input Size Flipping.

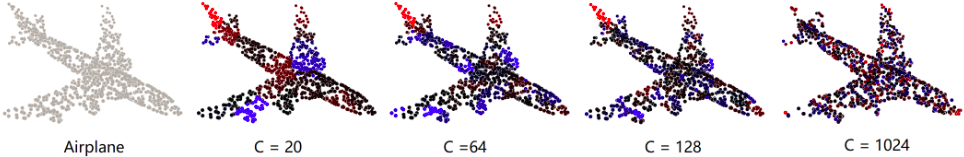

A demonstration of the input instance and its four explanations with different sizes of superpoints. The red and blue portions represent positive and negative attributions to the classification, respectively.

Farthest Points Sampling (FPS)

As with images, treating each pixel as an input feature would greatly increase the computational complexity. However, this is hardly feasible in practice, especially for those high resolution images where the exploding processing time is not acceptable to the user. The existing solution is to treat the clustered superpixels together as one feature. However, this begs the question: How does one cluster superpoints more rationally?

In images, a semantic segmentation allows one to obtain suitable superpixels, which is unfortunately not applicable to point clouds. The most intuitive answer is random segmentation. However, if the segmentation is not uniform, the main attributions will be concentrated on large superpoints.This is of no use for the explainer, since it is common sense that segments containing more points are more important. To address this issue, we introduced the K-means clustering algorithm with FPS. A starting point is hereby randomly selected and the point farthest from the first K points is subsequently sampled as the K+1st one. This simple and intuitive way forces the initial centers in K-means clustering to be uniformly distributed on the instance surface. Experimentally, this module has proven to improve the plausibility of the explanations.

Variable Input Size Flipping (VISF)

When perturbing images one often faces a difficult choice: What exactly is the substitution value for perturbation? Existing studies have presented several solutions, such as zero values, randomly sampled numbers or average of the instances. These seem to be feasibly intuitive, while in fact they do not have a strict theoretical basis, and choosing by intuition may seriously impact the plausibility of the explanation. We overcome this issue by exploiting the structural properties of point cloud networks. Since point clouds are not indexed, the networks have no requirements on the size of the input. Therefore, removing the points that need to be perturbed directly from the input point cloud is an alternative solution without concern for any information residuals.

Quantitative Evaluations

Two quantitative metrics are referred to by our work for the surrogate model-based explainability approaches: local fidelity and plausibility. Local fidelity assesses the behavioral similarity of the surrogate model to the one being explained. It is straightforward by simply monitoring the difference between the prediction scores of the surrogate model and the one to be explained on the perturbed training dataset. Plausibility examines the accuracy of the generated explanation for prediction and is rather difficult to determine as the ground truth explanation is not available. The ablation test is an intuitive option: An explanation is hereby deemed reliable if the confidence of the model in its prediction drops significantly after the part with the largest attribution in the explanation is perturbed. Similarly, we introduced VISF in the ablation test of the point cloud as well to make it more thorough without worrying about the remaining information in the ablated examples.

Toolkit – Explaining Point Cloud Networks

Our toolkit of agent-based interpretable methods for point clouds is available on Github:

The whole project is built on pytorch, and has been tested on Python 3.6 with pytorch 1.7.0. The default classification model is PointNet, but since the explainability approach based on the surrogate models is model-independent, it can be replaced with any alternatives. We also provide the evaluation tools described in the article, and the entire evaluation process is performed on the ModelNet40 dataset.

Further information in the respective paper:

Surrogate Model-Based Explainability Methods for Point Cloud NNs

Hanxiao Tan, Helena Kotthaus. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2022, PDF.