Human-centred Machine Learning strives for a tight coupling of human and computer intelligence and a synergistic collaboration between ML models and human users. To take advantage of human intelligence in developing ML models, the ML paradigm known as interactive Machine Learning engages human users in a tight interaction loop of iterative modification of data and/or features to improve model performance. To play an active role in model development, the users need to understand what the machine is doing and how their inputs will affect the model’s behaviour. Understanding ML models is also critical for deciding whether they can be adopted for practical use. Whilst this problem is being addressed in the research field of Explainable AI (XAI), there is a tendency to admit that model “explainability” does not necessarily mean that the model is actually properly explained and understood by humans. XAI methods often do not explain models in terms and concepts that humans use in their reasoning; as a result, the explanations cannot be linked to human mental models. Another problem is that a model which is interpretable in theory may be incomprehensible in practice due to its size and complexity.

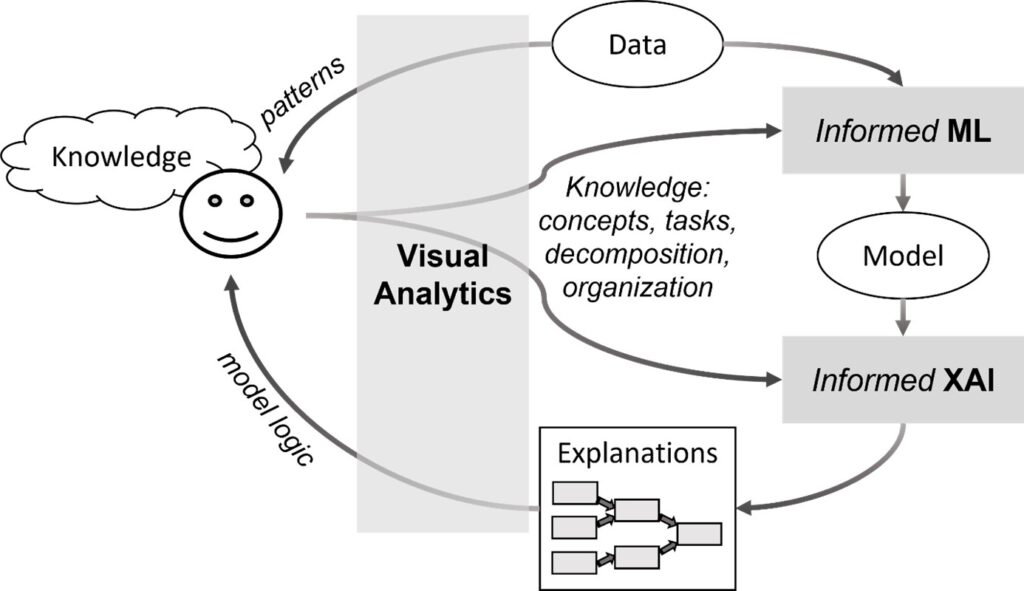

The grand challenge that human-centred ML aims to solve is to bridge the gap between ML methods and human minds, i.e., to develop approaches in which Machine Learning, on the one hand, adapts to human goals, concepts, values, and ways of thinking, and, on the other hand, takes advantage of the power of human perception and intelligence. Visual Analytics (VA) can contribute to solving this challenge by exploiting the power of visualization, which is not only an effective way of communicating information to humans, but also a powerful trigger for human abstractive perception and thinking.

Visual analytics for informed ML and XAI

Visual Analytics is a natural partner of Machine Learning and Artificial Intelligence in research, both in involving users in ML processes and in explaining ML to users. Combining human and machine intelligence is the central idea of VA. While Machine Learning focuses more on the machine side and strives to make use of human intelligence for improving ML models, VA takes the human perspective and considers machine intelligence as an aid to human reasoning. In human-centred ML, Visual Analytics can play the role of a facilitator of the communication between humans and computers in development, use, and explanation of ML models.

Informed ML means involving prior knowledge in the process of deriving models from data. It is typically assumed that the knowledge is explicitly represented in a machine-processable form. Visual Analytics can support acquiring knowledge from a human expert, including expert’s prior knowledge and new knowledge acquired by the expert through interactive visual data analysis. Following the paradigms of interactive ML and informed ML, models are developed in close interaction between Machine Learning algorithms and humans, so that human knowledge and human-defined concepts are transferred to the algorithms and used to build computer models. This process is supported by interactive visual interfaces provided by Visual Analytics. The knowledge and concepts that have been acquired from the human experts are not only used to build the models, but also to generate explanations of the models for the users. The methods for doing this, which still need to be developed, can be called “informed XAI”, in analogy to informed ML.

Rule generalisation for structured representation of model logic

An ML model that is “transparent by design”, or a transparent mimic model intended to explain a black box, may consist of a huge number of components, such as rules or nodes of a decision tree. While each component alone can be easily understood by a human, the whole construct may be far beyond human comprehension. Therefore, the model logic needs to be presented to humans at a suitable level of abstraction that allows for an overview, while keeping all details accessible on demand. Our idea of informed XAI suggests that an XAI component learns from a human expert how to organize model components into meaningful information granules at suitable levels of abstraction.

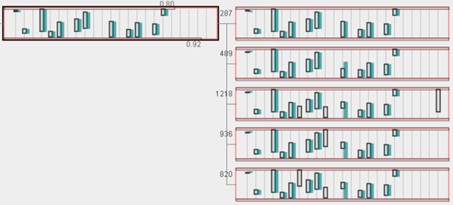

As a step towards this long-term goal, we have explored possibilities for abstracted representations of rule-based models. The idea is to represent groups of similar rules by more general union rules. A union rule not only substitutes multiple original rules, but also typically consists of fewer logical conditions than each of the original rules. Thus, the resulting set of union rules, even with additionally possible exceptions from them, becomes more comprehensible. In this way, we construct a simplified descriptive model of the transparent but practically incomprehensible ML model. The achievable degree of simplification is not restricted by the requirement to preserve the prediction quality of the original model. In this way, our procedure is different from the multitude of known approaches for training more compact rule-based models. Union rules of the descriptive model can be explored in detail by tracing the hierarchy of more specific rules that were involved in the derivation of the union rules.

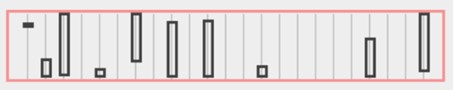

The idea of rule generalisation can be illustrated by a visual representation of a rule as a rectangular glyph containing multiple vertical axes for the features involved in the model. Vertical bars represent the value intervals of the features used in the rule.

The following image shows how a group of similar rules (represented by the glyphs on the right) is united to form a more general union rule (the glyph on the left). The cyan-shaded bars in the glyphs represent the feature value intervals of the union rule.

In the next steps, we want to develop approaches for involving a human expert in the process of building comprehensible descriptive models. A human expert can not only supervise the process of organising and abstracting model components but can also organise low-level features used in the model into higher-level domain concepts which will be more meaningful to users.

Our ideas are presented in more detail in the following paper:

Natalia Andrienko, Gennady Andrienko, Linara Adilova, and Stefan Wrobel: Visual Analytics for Human-Centered Machine Learning. IEEE Computer Graphics & Applications, 2022, vol. 42(1), pp.123-133 Link