In the previous blogposts of the RL for robotics series, we’ve already explored the concept of Guided RL and discussed its key features through three different robotic tasks. In particular, we introduced the Guided RL taxonomy – a modular toolbox for integrating different sources of knowledge into the RL Pipeline. This blog post now explores the first practical deployment of Guided RL to a real-world robotics challenge: Learning dynamic locomotion for a two-wheeled robot.

evoBOT Robot – A Research Platform for Highly Dynamic Motions

© Fraunhofer IML

The evoBOT, developed at Fraunhofer IML, is a dynamically stable system that is based on the principle of an inverse compound pendulum (see Fig. 1). Unlike conventional robots, this system does not require an external counterweight to maintain its balance. The evoBOT’s unique design allows it to keep itself always balanced, which makes it possible for the robot to navigate different and uneven surfaces, even when there are slopes. The evoBOT’s compact and lightweight design further enhances its versatility and mobility. The system’s high agility and flexibility make it suitable for a wide range of applications, including collaborative tasks that extend beyond the traditional logistics context. As a modular system, the evoBOT can be customized to meet specific requirements, and its adaptability makes it well-suited for use in complex urban environments.

In contrast to existing solutions that are limited to performing simple operations such as pushing and pulling logistical goods, the evoBOT offers a wide range of functionalities that can be combined and upgraded as needed. These functionalities include the ability to handle and transport objects, as well as to turn them around. The system’s bio-inspired design further enhances its user-friendliness, which lowers the inhibition threshold for interaction between humans and robots. Given its many advantages, the evoBOT can serve as a personal assistant for humans in a variety of settings. Its unique features and functionalities make it well-suited for use in environments where traditional robots may not be able to operate effectively. As a result, the evoBOT has the potential to transform the way humans interact with technology and to contribute to the development of new applications and use cases for robotics.

Guided RL Taxonomy – Selected Methods for the Application

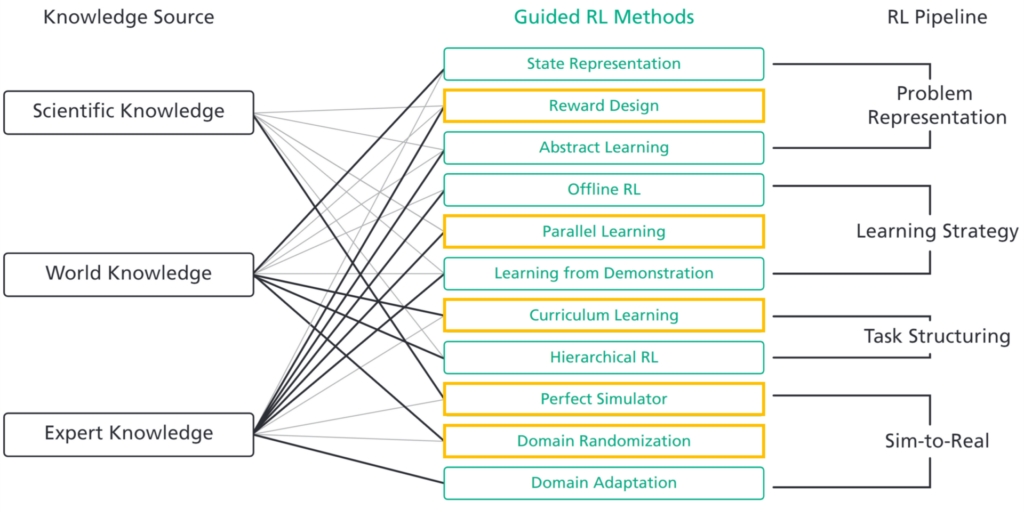

We explore learning-based control approaches for the task of dynamic locomotion of the evoBOT. In particular, the underlying neural network is supposed to compute appropriate wheel velocities in order to yield given velocity commands of the robot. Following the taxonomy of Guided RL (see previous blog post), a specific combination of different Guided RL methods is adopted to accelerate the training and improve the success for the real-world robotics application (see Fig. 2).

- Reward Design: To encode the desired behavior of the learned locomotion controller, we make use of a dense reward function. Overall, this function consists of multiple optimization criteria, including rewards for linear and angular velocity tracking, as well as penalizations for falling down, fast changes actions, and energy consumption.

- Parallel Learning: For training control policies in a simulation-based fashion, we use Isaac Gym, a recent GPU-based physics simulation framework for robot learning. Due to Isaac Gym’s efficient parallelization scheme, we collect experiences of a total number of 4096 robot instances that are trained simultaneously.

- Curriculum Learning: In order to further accelerate the training, we explore learning the task in a step-by-step manner. In particular, we increase the desired velocity commands for the robot to achieve in a linear way, so that the locomotion task is decomposed into first learning how to balance the robot and then slowly start moving with ever increasing linear and angular velocities.

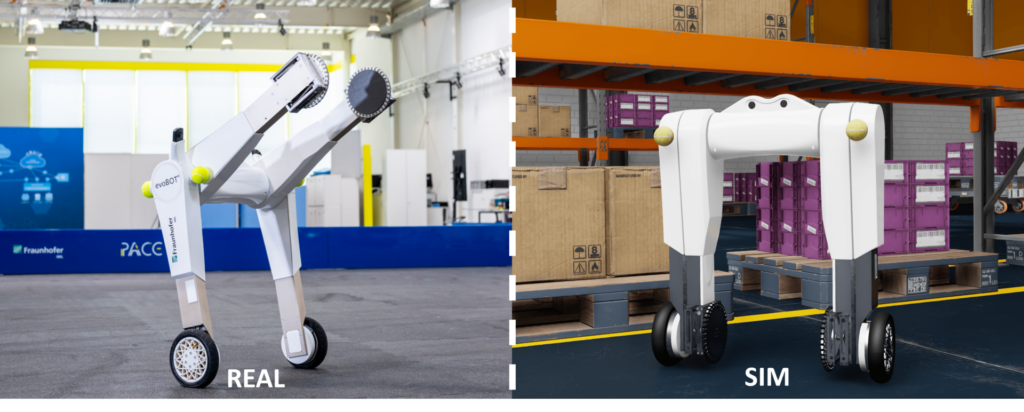

- Perfect Simulator: To reduce the Sim-to-Real Gap, we build a physically optimized simulation model of the evoBOT robot (see Fig. 1). To this end, masses and inertia tensors are recomputed from an accurate CAD model containing more than 300 separate parts with corresponding physical properties. Moreover, physical joint limits are incorporated into the simulation model based on measurements on the real robot.

- Domain Randomization: To account for remaining uncertainties in the simulation model, randomize the simulation environments. In particular, this randomization includes the robot dynamics (e.g. link masses, motor parameters), delays in the communication pipeline, as well as sensor noise estimated from real-world measurements.

© Fraunhofer IML

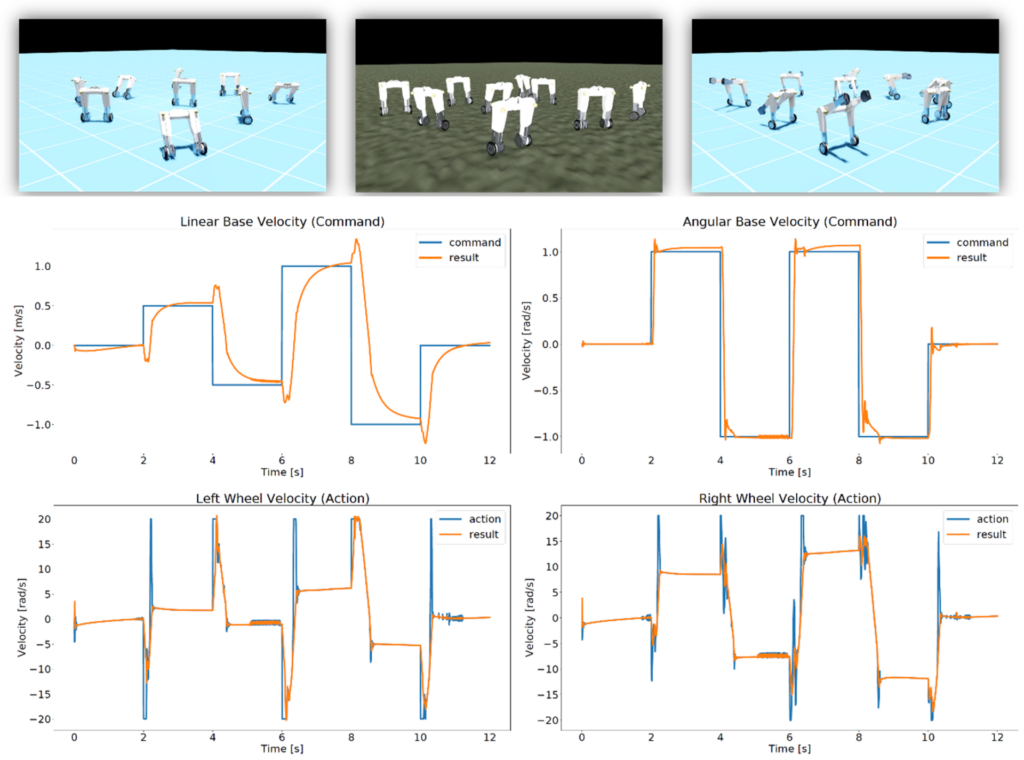

Experimental Results – Performance of Dynamic Locomotion

We train a single control policy for both robust balancing and dynamic locomotion with up to 1m/s of the evoBOT robot. Fig. 3 shows the resulting tracking performance of a trained policy for combined balancing and dynamic locomotion of evoBOT. The experiment contains several stages with increased levels of dynamics. In phase I (0-2 s), the policy is requested to balance itself from a difficult initial pitch position of up to 30 degrees. Phase II (2-6 s) consists of alternating linear velocity commands of ±0.5 m/s, while turn rates of up to ±1 rad/s are requested. In Phase III (6-10 s) both linear and angular velocity commands change instantaneously, before returning to dynamic balancing again. As the results indicate, the policy learns to respond to the given velocity targets in a highly dynamic manner, while operating at the physical boundaries of the system. On the one hand, to realize such rapid changes in linear velocity, the robot first needs to accelerate in the opposite direction to create the necessary tilting moment. On the other hand, the policy learns to exploit the limits of the physically constrained robot given by the motor dynamics in terms of reaching both maximum speed and acceleration limits.

© Fraunhofer IML

Summary

This blog post covered the application of Guided RL to the task of learning dynamic locomotion for evoBOT, a novel robot platform for research on highly dynamic locomotion. In particular, we reviewed the selected Guided RL methods for this use case and discussed the overall performance of the learning-based controller.

If you are interested in the robot platform, you can check out the evoBOT simulation model (available as open-source or directly contact our development team. For more details on the methodological approach and a comprehensive sim-to-real benchmarking, you can click the following link to the related publication, which was presented this year at the IEEE International Conference on Intelligent Robots and Systems (IROS).

P. Klokowski et al., “evoBOT – Design and Learning-Based Control of a Two-Wheeled Compound Inverted Pendulum Robot,” 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 2023, pp. 10425-10432, doi: 10.1109/IROS55552.2023.10342128, Link.