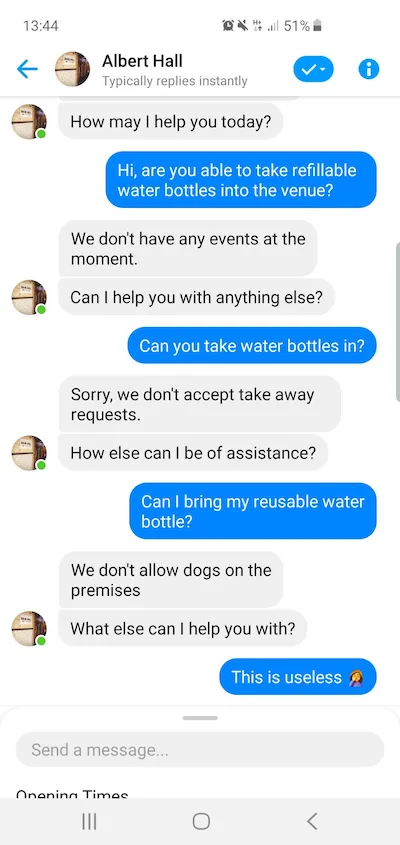

Our previous article briefly introduced chatbots and their automatic evaluation using language models like GPT2, BERT, and XLNet. There, we discussed the importance of open-domain dialogue systems. We showed how probabilities inferred by the language model (LM) correlate with human evaluation scores. And hence, they are suitable for estimating dialogue quality.

As discussed earlier, there are various criteria along which we judge a conversation. For example, a response can make good use of fluent language but be completely incoherent, i.e., unrelated to the preceding context. The LM-based evaluation is practical since it does not need supervision. However, it has one major downside — it presents only one score portraying the overall quality without providing insight into single criteria like fluency or coherence of the text.

Fear not, we have a solution to this challenge! However, before we go into the answer to the problem, we need to cover some groundwork: We almost always come across benchmarking in the context of AI and ML, regardless of the subfields. In natural language processing, a trendy one also drove a lot of the research and development of language models like BERT, XLNet, and many others. The General Language Understanding Evaluation (GLUE) benchmark provides resources for training, evaluating, and analyzing natural language understanding systems. It contains a total of eleven tasks that aim to assess a system’s language understanding abilities. The most famous example is sentiment analysis: Systems have to flag sentences as positive or negative. Another example is duplicate question detection: The systems have to decide whether two questions are semantically the same or similar.

How does GLUE help us to evaluate dialogue quality? By now, you probably have guessed that a machine learning model performing well on Corpus of Linguistic Acceptability can infer the fluency of a sentence. Similarly, we use pair-wise sentence tasks like Recognizing Textual Entailment (RTE) or Semantic Textual Similarity Benchmark (STSB) to check if a response is coherent with its preceding dialogue context. All of the pair-sentence tasks look for various semantic relations that can model dialogues as well. We do not use Winograd-NLI (WNLI) and Multi-Genre Natural Language Inference (MNLI) since they cannot be easily matched to the problem of dialogue evaluation.

First, we need models that have been trained on the GLUE tasks. We took a shortcut by re-using fine-tuned BERT instances, part of the TextAttack framework and available on the HuggingFace ModelHub. We use BERT because it is an established approach. However, we think one should be able to use any neural architecture. To validate the idea, we ran experiments on data that involve knowledge-based conversations evaluated by humans. The assessment was based on six criteria intended to demonstrate how (1) understandable, (2) natural, (3) contextually relevant, (4) interesting, (5) knowledge-based, and (6) overall high-quality the text was. Subsequently, the probabilities were compared with human annotations.

As expected, we have Pearson’s and Spearman’s correlations coefficients of up to 0.7. For example, the criteria knowledge-based and STSB have correlation rates of 0.7329 and 0.7173, respectively. Also, using “STSB”, we acquire correlations of 0.3620 and 0.3463 with the criterion contextually relevant. But this is not all!

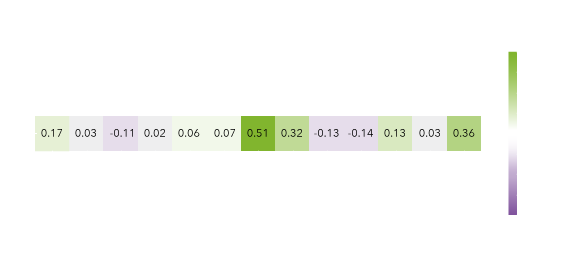

Now we have quality indicators for each of the criteria. It would also be great to have a mixture of those that would indicate the overall quality since the single ones did not correlate that well. We ran a linear regression using the single GLUE tasks as dependent variables and the overall quality criteria as a target. The composite indicator has correlation coefficients of almost 0.5. In contrast, the best single GLUE task has only 0.4.

All these correlation coefficients imply a relationship between the variables. In the last example, a value of 0.5 suggests that for every unit of increase of the Overall criterion the linear regression will respond by half of it. In other words, if the evaluator increases the score by 0.1, the model should do so by 0.05.

In the figure below, we visualized the learned weights from the linear regression:

Conclusion

We see that many of the tasks have influence – some of them are even quite strong. The weights with a “fact” prefix calculate the scores between the conversation knowledge base and the target response. We see that semantic overlap tasks like the Microsoft Research Paraphrase Corpus (MRPC) and STSB have the strongest role. The observation applies both to measuring the tasks against dialogue context and knowledge base.

In summary, while the correlation coefficients are decent, there is still some space for improvement. In the future, we will look into other such benchmarks that could possibly provide additional improvements.

More in the related publication:

Proxy Indicators for the Quality of Open-domain Dialogues

Rostislav Nedelchev, Jens Lehmann, Ricardo Usbeck, Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, 2021, PDF

Link to code: https://github.com/SmartDataAnalytics/proxy_indicators

This was a guest post from the SDA Blog.