Large language models can comprehend the meaning of content such as text and images and generate new texts and images themselves. Due to their vast range of applications, these models are also referred to as foundation models. Given the significant importance of moving images in our daily lives, it makes sense to extend these approaches to videos. Additionally, various use cases arise due to their high relevance. In the following post, we demonstrate how videos can be generated from texts using large language models.

Foundation models can comprehend texts and images

Since the introduction of GPT-3 two years ago, generative AI has made tremendous progress. Large language models are now capable of completing a starting text in a fluid and almost error-free manner.

The starting point is the technique of context-sensitive embeddings introduced in the deep neural network BERT (blog post in German). The meaning of a token is described by a long numerical vector, and depending on the context, different context-sensitive embeddings are generated (more on this in the German blog post: “Die Bedeutung von Worten durch Vektoren erfassen”).

It has been shown that images can also be broken down into a limited number of tokens consisting of small pixel areas, for example, 14×14 pixels. The Vision Transformer (Dosovitsky et al. 2020) assigns embeddings to these pixel areas and then uses the BERT algorithm to derive context-sensitive embeddings for these tokens. The process can be refined, for example, with an image classification task. On the ImageNet test set, the Vision Transformer achieved 88.5% accuracy, surpassing all previous models. This demonstrates that, with extensive training data, transformer models are advantageous for image processing tasks.

Videos can also be represented by tokens

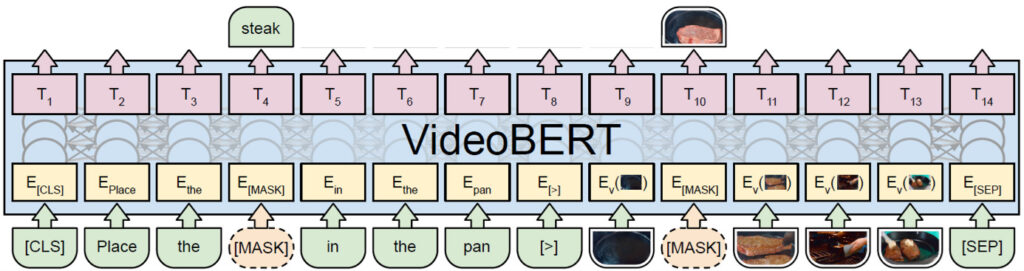

Videos consist not only of a single image but of many images in a temporal sequence, requiring the consideration of a large amount of data. VideoBERT (Sun et al. 2019) applies the BERT model to a joint representation of text and video images through tokens. The video is divided into clips of 30 images (1.5 seconds each), from which a temporal convolutional network generates embedding vectors of length 1,024. The clip embeddings are grouped into 20,736 clusters through k-means clustering and represented by “video tokens.” The language of the video is transformed into text through a speech recognition process and divided into sentences. At the same time, the text is converted into tokens using a tokenization algorithm based on a vocabulary of 30,000 tokens. The video tokens corresponding to the time period of the sentence are collected in a video token sequence. As shown in Fig. 1, the video tokens are appended to the respective text tokens and separated by special tokens.

Figure 1: VideoBERT extends text tokens with video tokens and predicts masked tokens in pretraining. This way, the relationship between text and image/video information is learned.

The model was pretrained with a video dataset of 312,000 cooking videos with a total duration of 966 days. When inputting a video and a text with many [MASK] tokens, VideoBERT describes the video through text. The quality of this video captioning is better than in previous approaches.

Meanwhile, there are various systems for processing videos along with other media. NÜWA (Wu et al. 2022) uses a joint token representation for text, images, and videos and, in pretraining, learns the connection between tokens of different media. After appropriate fine-tuning, it can perform eight different tasks (see Fig. 2), including generating a video from text. Here, it outperformed the performance of previous models. However, the resolution of the videos is still low.

Figure 2: The NÜWA model can perform eight different tasks.

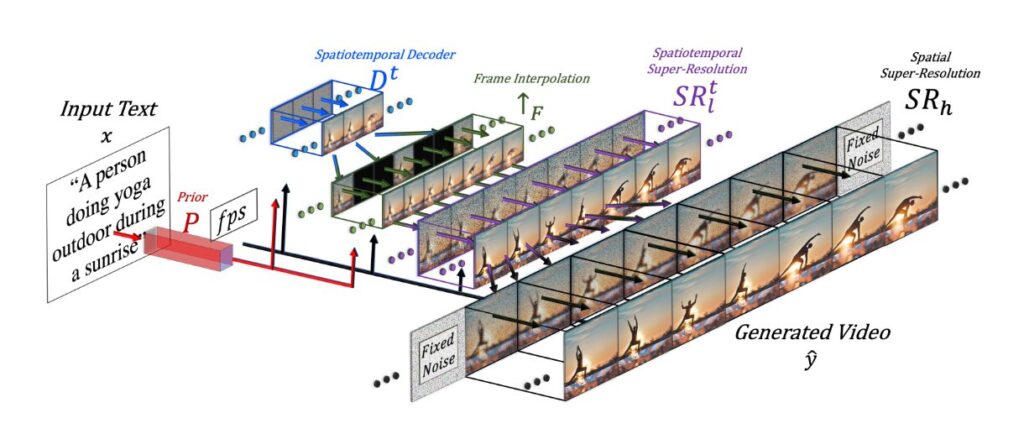

Make-a-Video generates high-resolution videos from textual descriptions

In the past year, models for video generation have been developed that can generate high-resolution videos from text. Make-a-Video (Singer et al. 2022) builds on text-image models with novel spatial-temporal modules. It accelerates the training of the text-video model without the need to learn visual and multimodal representations from scratch, and it does not require paired text-video data. The generated videos inherit the characteristics of the text-image model and its knowledge of diverse image types. But how does it work exactly? A text-to-image base model generates images for texts and is trained on text-image pairs. A prior network [latex]P[/latex] generates image embeddings for the given text tokens (see Fig. 3). From these, a spatial-temporal decoder network [latex]D^t[/latex] produces a series of 16 video images with a resolution of 64×64 pixels. These are then transformed into higher temporal resolution through image interpolation [latex]F[/latex]. Subsequently, using a spatial-temporal super-resolution network [latex]SR_{l}[/latex], the images are expanded to a higher resolution of 256×256 pixels. Finally, the super-resolution network [latex]SR_{h}[/latex] generates the output video in a final resolution of 768×768 pixels (see Fig. 3).

Figure 3: Architecture of the Make-A-Video model. This website shows a series of generated example videos with a maximum length of 5 seconds.

Imagen-Video uses diffusion models to increase resolution

Imagen-Video (Ho et al. 2022) is a similar model for generating high-resolution videos. It uses a T5 encoder-decoder to generate a series of 16 video images with a resolution of 40×24 pixels from an input text. Subsequently, a series of 6 models is used to gradually increase the temporal and spatial resolution to 128 video images with 1280×768 pixels.

Diffusion models are used to increase the resolution. A diffusion model describes the process of systematic and slow destruction of pixel images by gradually changing the pixel values through independent small perturbations. This results in a series of images [latex]x^{[0]}, … , x^{[T]}[/latex] that approximately follows the normal distribution. This process can now be reversed, i.e., step by step, a less noisy image [latex]x^{[t]}[/latex] can be generated from a noisy image [latex]x^{[t-1]}[/latex]. The associated diffusion model can be learned by the reconstruction of gradually disturbed images. It turns out that with this technique, small blurry images can be expanded with high reliability into larger, detailed images. This approach is successfully used in many current text-image models, such as DALL-E, Stable Diffusion, and Imagen.

Outlook

The inclusion of images and especially videos allows for connecting the meaning of words and sentences with processes in the real world. Foundation models can thus be considered as autonomous agents capable of establishing the connection between the tokens of a text and the sensory perceptions of the external world. In this sense, they can solve the symbol grounding problem, which has been a concern in Artificial Intelligence for many decades (Bommasani et al. 2021, p.42). This allows such models to acquire additional essential dimensions of the meaning of concepts.

Currently, advanced foundation models for videos are not available in Europe, as the European research landscape lacks the structures and computational capacity to train such large-scale models with billions of parameters. The LEAM Initiative (Large European Language Models) has set the goal of initiating such an infrastructure for foundation models in Germany. It aims to enable the training and research of large language models for the European language area. Generating high-quality videos for given texts has been chosen as one of the most important research goals.

References

On the opportunities and risks of foundation models.

Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., … & Liang, P. (2021). arXiv preprint

An image is worth 16×16 words: Transformers for image recognition at scale.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., … & Houlsby, N. (2020). arXiv preprint

Imagen video: High definition video generation with diffusion models.

Ho, J., Chan, W., Saharia, C., Whang, J., Gao, R., Gritsenko, A., … & Salimans, T. (2022). arXiv preprint

Make-a-video: Text-to-video generation without text-video data.

Singer, U., Polyak, A., Hayes, T., Yin, X., An, J., Zhang, S., … & Taigman, Y. (2022). arXiv preprint

Videobert: A joint model for video and language representation learning.

Sun, C., Myers, A., Vondrick, C., Murphy, K., & Schmid, C. (2019). In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 7464-7473). Link

Nüwa: Visual synthesis pre-training for neural visual world creation.

Wu, C., Liang, J., Ji, L., Yang, F., Fang, Y., Jiang, D., & Duan, N. (2022). In European Conference on Computer Vision (pp. 720-736). Springer, Cham. Link