Edge AI connotes the deployment of AI algorithms and AI models directly on local edge devices. As the number of Internet of Things (IoT) devices continues to increase, the demand for efficient data processing grows. However, optimizing these models for resource-constrained environments remains a challenge. In this blog post, we explore Splitting Stump Forests (SSF), a powerful compression technique for tree ensemble models, enabling the efficient deployment of Edge AI.

How IoT is Transforming Everyday Life

Have you ever wondered how a smart thermostat adjusts the temperature in your house? Or how your smartwatch monitors your physical activity and even analyzes your sleep patterns? Or how does a security camera recognize your face?

Those achievements are all possible through the innovations of the Internet of Things (IoT). This technology relies on sensors that collect data, which is then sent to cloud servers for algorithm processing to predict and respond to your needs. Since our surroundings are becoming increasingly “smart”, the global count of (IoT) devices is estimated to reach over 40 billion devices by 2030. As this number grows, so does the need for faster, more efficient data processing. This is where Edge AI comes in.

What is Edge AI?

Edge AI is the deployment of AI algorithms and models directly on local edge devices.

Many applications require real-time decisions, such as smart cameras for instant threat detection, autonomous driving systems adjusting their path, and industrial sensors triggering instant anomaly alerts.

This challenge is driving the adoption of Edge AI. By processing data locally, Edge AI reduces or even eliminates the need for data transmission, addressing concerns like connectivity, communication costs, network latency, and privacy. While edge deployment enhances responsiveness, achieving higher predictive accuracy often requires using ensemble models.

Ensemble Models on IoT Devices: What’s the challenge?

Ensemble models often outperform individual classifiers in various machine learning tasks. A random forest, for instance, reduces the variance of the predictions compared to a single decision tree, improving overall model performance. However, the cost of using random forests is significantly higher for resource-constrained devices. Using only a few small trees in a random forest can limit its predictive performance, while larger, higher-performing models are often difficult to deploy on devices with limited flash memory. This raises the following question:

Can we reduce model size without compromising predictive performance?

To address this, we present Splitting Stump Forests (SSF), a compression scheme designed for tree ensemble models, specifically random forests. In the following section, we will explain the method in depth.

Splitting Stump Forests (SSF)

In order to achieve efficient compression, our approach (1) extracts a subset of test nodes from a trained random forest to build a lightweight ensemble of splitting stumps that requires only a few Kbytes of storage. Afterwards, (2) input data is transformed into a multi-hot encoding and (3) a linear classifier is used to map the transformed data to the target domain.

Figure 1 shows the pipeline of the three primary steps described above.

First step: Selecting Informative Splitting Nodes

Splitting Stump Forest’s first step selects a subset of informative splits from a trained random forest.

To achieve this, we propose a selection criterion that favors split conditions leading to balanced subtrees. Specifically, we score each node in the decision tree based on the balance of training samples between its left and right branches.

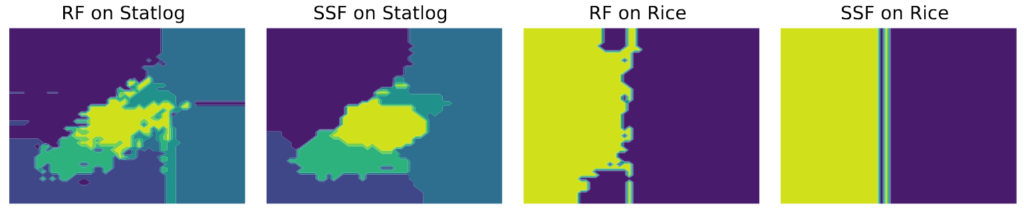

To understand why we select balanced nodes, let’s take a closer look at the figure 2. It shows the decision boundaries of both random forests and splitting stump forests on two-dimensional projections of the Statlog and Rice datasets. In the case of random forests, you might notice small, discontinuous regions. They occur when the split criteria cut off a relatively small portion of the training data with pure labels. This could be detrimental to generalization, leading to overfitting and making the model more sensitive to noise and outliers.

Figure 2: Example of decision boundaries in data classification between random forests (RF) and the splitting stump forests (SSF) on two-dimensional projections of two datasets.

But here is the surprising part: the method of splitting stump forests achieves almost the same level of accuracy despite using only 0.2% of the total nodes employed by the random forest.

Impressive, right? Now, let’s see what happens after selecting these balanced nodes.

Second step: Transforming Nodes to Stumps

The result of the previous step is a set of isolated nodes, each representing a feature and a split value, but not yet forming a structured model. To address this, we attach two leaves to each node, turning it into the root of a simple decision tree with only one level. Now, one can map the input data into a new feature vector, where the value ‘1’ in the feature vector indicates that the split condition of its root node is satisfied.

Third step: Lightweight Training of Splitting Stump Forests

To enable deployment to devices with limited resources, we use a linear model to combine the individual predictions from the decision trees. By applying logistic regression, we model the relationship between the transformed feature vectors and the target variable. The result is a model that is not only resource-efficient but also easy to interpret.

Exploring Splitting Stump Forest Performance

To test Splitting Stump Forest’s (SSF) performance, we evaluated it on 13 benchmark classification datasets primarily from the UCI repository with varying properties. This diverse selection enabled evaluation across varying complexities. We trained random forests using the Gini index reduction for splitting by varying the maximum depth of individual decision trees and the overall number of decision trees.

When it comes to predictive performance, SSF outperformed original random forests and state-of-the-art methods in most runs. What is even more impressive is that SSF significantly reduced model size by two to three orders of magnitude compared to other methods and its inference time was faster than the best-performing models of competing methods. Furthermore, the results confirm that the selected test nodes are informative and not accidental.

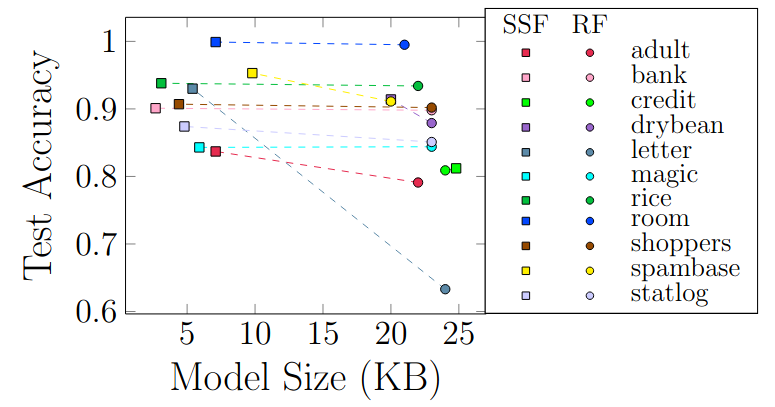

Analyzing Predictive Performance on a Space Budget

Driven by space constraints on small smart devices, we analyze the performance of Splitting Stump Forest within a strict space budget. To accommodate devices with limited storage capacity, we selected the best models that fit within just 32 KB or 16 KB of memory. Such models are suitable for deployment on microcontroller units like Arduino Uno and ATmega169P. As shown in Figure 3, Splitting Stump Forest models outperformed random forest (RF) models across all datasets, with a remarkable improvement of over 3% in five datasets. This shows that you don’t have to sacrifice performance for efficiency!

Figure 3: The plot shows the best model attained by RF and SSF with a final model size below 32 KB.

As a complementary experiment, we pushed the boundaries of compression, allowing a slight drop in accuracy. We aimed to identify the smallest SSF model within an accuracy margin of 2% compared to the random forest model. The results were impressive: the Splitting Stump Forest method achieved remarkable compression values of 0.012, 0.02, 0.017, 0.025, 0.037, and 0.005 for the Spambase, Shoppers, Adult, Room, Rice, and Bank datasets, respectively.

Are Selected Nodes Truly Informative?

To validate the informativeness of the selected nodes, we compared their predictive performance with that of a randomly selected sample of the same size. For a given dataset, we reported both accuracy and number of high-scoring nodes $n$. We then randomly sampled $n$ nodes from the entire set of nodes, transformed them into splitting stumps, and trained a linear model using their data representation.

We found that score-based stumps consistently outperformed their randomly chosen counterparts across most datasets. Furthermore, these high-scoring stumps also surpassed an equivalent-sized set of lower-scoring nodes, confirming that nodes with higher splitting power provide significantly more useful information.

Final Thoughts

To sum up, Splitting Stump Forests (SSF) compress a large random forest into a compact model without sacrificing accuracy. The compressed models are suited for resource-constrained edge devices.

These outcomes raise interesting questions for future research on developing practical deployment strategies for edge devices. This is crucial to ensure that the benefits of model compression can be fully realized in real-world applications following the ongoing integration of machine learning models in edge devices.

Do you want the full story? You can read the full paper:

Alkhoury, F., Welke, P. (2025). Splitting Stump Forests: Tree Ensemble Compression for Edge Devices. In: Pedreschi, D., Monreale, A., Guidotti, R., Pellungrini, R., Naretto, F. (eds) Discovery Science. DS 2024. Lecture Notes in Computer Science (LNAI), vol 15244. Springer, Cham. https://doi.org/10.1007/978-3-031-78980-9_1