Every year, several zettabytes of data are generated. However, these raw data are often unusable for Machine Learning algorithms. The challenge in Machine Learning is no longer just obtaining access to data but quickly acquiring labeled data of high quality. Let’s take the example of object recognition using CAPTCHA – a test to determine whether a human or a computer is operating a program: When users are asked to select images containing traffic lights, the label “traffic light” or “no traffic light” is assigned to the images they click or don’t click.

Through the process of labeling, labeled data is created, allowing a Machine Learning algorithm to build a statistical model to classify previously unseen images. In this case, the classes “traffic light” and “no traffic light” are assigned. See the blog post “What are the types of Machine Learning?” for more information on classification.

Challenge: Some labels can only be assigned by experts

In the context of object recognition, we may also want to recognize stop signs, children, or potholes. Labels can, therefore, be used in a versatile manner. Depending on the type of classification task, the labeling process can also be complicated. For the identification of a traffic light, basic knowledge is sufficient. It becomes more challenging for classification tasks that require complex background knowledge such as determining if a tumor is present based on an ultrasound image. This task requires expert knowledge. The labeling process is complicated in such cases because only a few individuals are eligible for labeling. Since large amounts of data are typically required, labeling is correspondingly tedious and time-consuming.

Active learning relieves annotators

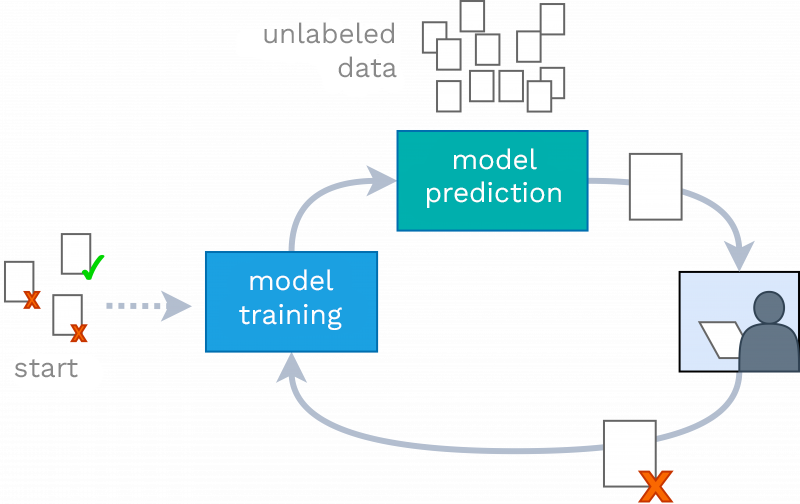

One way to reduce the effort is to use active learning, a subcategory of supervised learning. Active learning refers to a learning algorithm that selectively chooses data points from a set of unlabeled data points to be labeled next. Labeling can be done by a human or, for evaluation purposes, by the algorithm itself. The selection of data points is based on a selection strategy. A commonly chosen selection strategy is uncertainty sampling, which selects the data point with the lowest confidence. The lowest confidence means that the algorithm is least certain about which class this data point belongs to. The basic idea is that the model with fewer data points achieves the same or higher classification accuracy (e.g., 90% correctly recognized) as a model that uses all data points. When the learning process stops is defined by a stopping criterion.

The process in detail

Below, we describe the process of active learning using object recognition and uncertainty sampling as the selection strategy. At the beginning, we have a set of image data and a person who is supposed to label them.

- A small part of the data is labeled to ensure that we have examples of images with traffic lights and images without traffic lights.

- The model is trained.

- The model predicts the class of all unlabeled images.

- The image with the lowest value, i.e., the greatest uncertainty, is selected and shown to the person for labeling.

- The person indicates whether a traffic light is visible in the image or not, and the information is stored.

- If the stopping criterion is not reached, the process returns to step 2 (model training).

It is possible to provide the person with several images directly in step 4. The advantage is that training does not have to start anew after each image, which can take a lot of time depending on model complexity. The number of data points that a person can process cognitively is ten. For example, the number of links in a Google search is limited to ten per page. How to define the stopping criterion depends heavily on the application scenario and it is also determined by the available resources.

Application scenario: Active learning in autonomous driving

A major player in data generation is the automotive industry. It is roughly assumed that the sensors in an autonomous vehicle produce at least 5 terabytes of data per day. Labeling these amounts of data is almost impossible. This is precisely where active learning comes into play. In this case, the algorithm selects video images that are difficult to classify because the images, for example, contain objects that have never been seen before. A team from graphics processor and chip developer Nvidia found that in the detection of pedestrians and cyclists, accuracy is higher with active learning than with human selection.

Active learning is interesting when (1) many unlabeled data are available, and labeling is expensive and time-consuming, and (2) a selection bias is expected in manual data selection. For example, a selection bias was evident in Nvidia’s study: Humans typically chose shots from a single driving session, while the active learning algorithm selected images from many different driving sessions. Active learning is currently not utilized enough in practice, but it is gaining relevance through recent developments. Especially regarding the need for trustworthy AI, the approach will become more important since active learning allows involving a human in the learning process.