The most common way to identify users on websites and devices is through passwords. They have been used for decades for identification, are familiar to users and developers, and are easy to use. However, on their own, passwords are not particularly secure. Users tend to reuse the same password on multiple sites and use simple, short passwords consisting of common character strings and dictionary words. Thus, people often exhibit recognizable patterns when creating passwords. Studying these patterns and using the identified regularities to create new, more secure passwords is the focus of the research area known as password guessing.

In collaboration with the Federal Criminal Police Office in Wiesbaden, researchers from ML2R (now the Lamarr Institute) have investigated how deep learning algorithms can be applied in the field of password guessing.

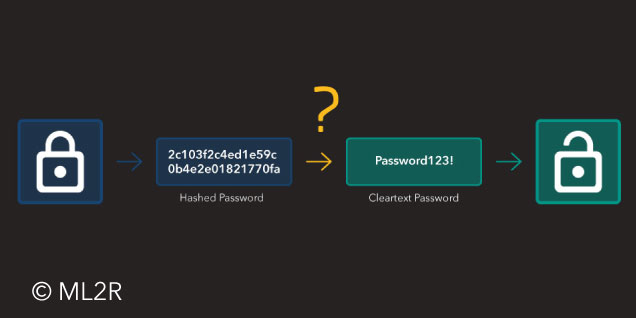

Password-guessing algorithms attempt to reconstruct passwords to test the security of systems.

Password guessing: Analyzing password datasets

Password guessing is an active research field that seeks to identify statistical patterns in known password datasets and use them to efficiently generate new passwords. The field has seen significant breakthroughs with deep learning in recent years. Therefore, our research focuses on a broad collection of deep learning and probabilistic models to generate new, more secure passwords. These new passwords can then be used to test IT security in companies (penetration testing): If one of the generated passwords grants access to the system, the set passwords were too weak. Users can also use these algorithms to test the strength of their own passwords: If the algorithm can derive their password from known datasets, a stronger password should be chosen. Theoretically, the password of a system protected only by a password can be guessed by trying all possible passwords (brute forcing). In practice, this method fails due to the number of possible combinations for passwords even just a few characters long. Therefore, algorithms are used to filter out the possible character combinations that are more likely to be used as passwords.

Password-guessing algorithms mainly rely on statistical analysis and the application of rules. On one hand, frequently occurring character strings in the dataset are counted and recombined, while on the other hand, rules can be created to generate new passwords from known ones. Examples include adjusting capitalization or adding numbers and letters.

How deep learning advances password analysis

The advantages of deep learning models in analyzing passwords are clear: Machine Learning algorithms have repeatedly proven they can recognize patterns in unstructured data that humans would never find. Additionally, they don’t have to follow rigid rules for text generation and are very flexible in their capabilities.

In our research, we develop novel generative deep learning models that independently analyze password datasets and generate new passwords whose quality can match or even surpass established methods. The architectures also allow targeted generation of passwords according to desired patterns or example passwords.

We conduct a thorough empirical analysis in a controlled framework using known datasets (RockYou, LinkedIn, Youku, Zomato, Pwnd). These datasets have become standard in research and consist solely of passwords (i.e., without associated usernames/email addresses). Our results not only identify the most promising models powered by deep neural networks but also illustrate the strengths of the different approaches in terms of generation variability and sample uniqueness. We examined three approaches:

Auto-encoder: An auto-encoder consists of an encoder and a decoder that first compress and then restore a data point (in this case, a single password) from the compressed version. The goal is to learn a compact version of the password that contains all the necessary information to restore it as accurately as possible. To generate new data points, a compressed data point is created (by sampling from a probability distribution) and passed to the decoder, which generates a password from it. An interesting property is the nature of the space of all compressed data points: similar data points are encoded into similar compressed data points. Sampling from the vicinity of an encoded data point generates new passwords that also look similar. Thus, existing knowledge about target passwords can be included in the generation process.

Generative Adversarial Networks (GANs): GANs represent the current state of research for the artificial creation of images, though they are harder to apply to text. A GAN consists of two independent networks: a generator network that creates new data points from random input data (e.g., samples from a distribution function) and a discriminator network that receives these artificial data along with real data and decides which data points are real and which are artificially generated. Through training, the discriminator becomes better at distinguishing artificial and real data (passwords), and the generator learns to create realistic artificial passwords from random data.

Transformer-based language models: Transformers have revolutionized the field of natural language processing in recent years. A well-known version of the transformer-based architecture is GPT-2, a so-called language model. Language models read text and at each point try to predict the next word. By comparing the actual next word with its prediction, the model is trained. Passwords must therefore be combined into a long continuous text of random passwords for the training. To generate new text, a sentence beginning is given to the model, and the model continues the sentence with the most appropriate words. To generate new passwords, a “sentence” can be started with a random character string, and the model generates a long text of passwords.

An interesting variant of language model training is pretraining and finetuning. The model is first trained on a large corpus of English text data and then passed on password data. Developers provide models that have been trained on large amounts of data with significant computational resources and expensive hardware. In practice, this type of training shows advantages when the password dataset is relatively small. Training on normal language seems to give the models information about general language that can then be transferred to password data (for more information on how transformer-based language models work using BERT, see our articles: Capturing the meaning of words through vectors and BERT: How do vectors accurately describe the meaning of words?).

Auto-encoders can exploit the structure of compressed data points to generate passwords with specific properties. Here: All passwords contain the word ‘love’.

Deep Learning models as flexible password generators

All the mentioned models have shown the ability to generate realistic passwords that are not already part of the training dataset. Many of these new passwords are found in other password datasets, meaning the generated strings are not random but have actually been used by humans as passwords. Thus, the models have learned what passwords look like and what structures they have from simply reading a dataset and can independently create completely new passwords based on these learned factors. Additionally, the trained models can read passwords and compare them with the training data, allowing users to be warned about insecure passwords. Auto-encoder-based models also allow the specific properties of the passwords to be generated to be determined.

We see that deep learning-based models can not only keep up with rule-based and laboriously human-adjusted approaches but also enable functions that were hardly feasible with these conventional methods. Future research will focus on applying insights from the vast world of password-guessing algorithms to enhance the performance of the mentioned models. Details on the models used, training, results, and comparisons with existing algorithms are summarized in a preprint in collaboration with the Federal Criminal Police Office:

Generative Deep Learning Techniques for Password Generation David Biesner, Kostadin Cvejoski, Bogdan Georgiev, Rafet Sifa, Erik Krupicka, 2020, arXiv, PDF.

An extended version was published as a conference paper at ICANN 2021:

Advances in Password Recovery using Generative Deep Learning Techniques

David Biesner, Kostadin Cvejoski, Bogdan Georgiev, Rafet Sifa, Erik Krupicka, Artificial Neural Networks and Machine Learning – ICANN, 2021, Link.