In many cases, Machine Learning models, especially deep neural networks, achieve a quality comparable to human work or even exceed it for narrowly defined tasks. Nevertheless, they are not perfect systems—errors, such as in predictions, do occur. In some cases, when the given data does not allow for perfect predictions, they are even inevitable. For instance, the electricity consumption of a household in the next hour depends heavily on individual decisions made by the residents, which can at best be indirectly inferred from previous consumption patterns. A perfect prediction is not possible in this case. In many instances, such errors are uncritical, especially when they can be easily detected, and the process can be repeated at will. For example, speech recognition and control systems can be retried if requests are not understood. On the other hand, there are critical applications where these conditions are not, or only partially, met. Here, faulty predictions from the AI application can lead to personal injury or financial losses (such as property damage or opportunity costs). In these cases, a well-calibrated estimation of output uncertainty can help (i) avoid errors by identifying problematic outputs and increasing reliability, and (ii) enable human intervention or other mitigation strategies.

In this contribution, we first explain the relevance of uncertainty estimation with two practical examples. This is followed by a brief theoretical overview of the different types of uncertainty in data-driven modeling and the requirements that should be placed on an uncertainty estimator.

Uncertainty estimation in practice

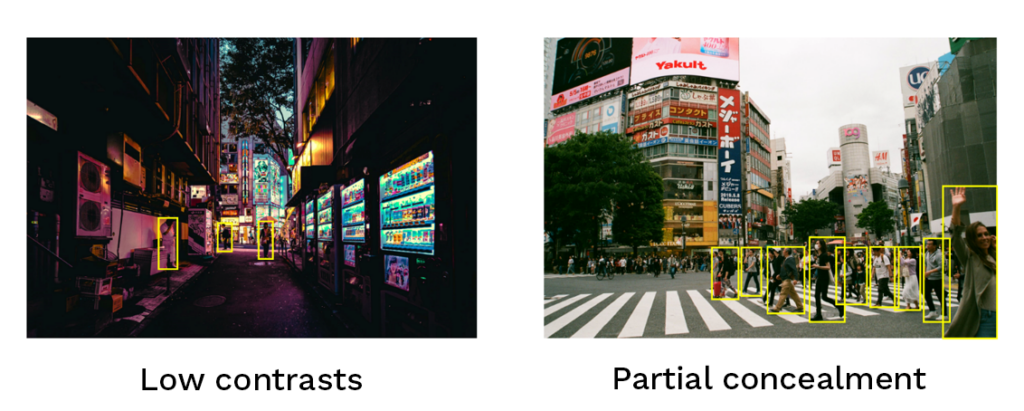

A typical example, due to its high demands and complexity, is autonomous driving. Here, it is particularly important to ensure the detection of vulnerable road users (VRUs, such as pedestrians). On the one hand, misjudgments or uncertainties regarding the position and trajectory of road users, which can arise from partial occlusion, for example, should be detected early and factored into the planning (for example, a pedestrian, as shown in the figure below on the right, may be largely obscured by other pedestrians or vehicles, making them only partially detectable). On the other hand, the unreliable detection of the “object” type also poses a potential risk, for example, if a highly dynamic VRU, such as a cyclist, is mistakenly identified as a much slower VRU, like a pedestrian. Here, an uncertainty estimate can help by giving special consideration to this road user in the planning with more caution.

However, even outside of protecting human life, “uncertainty” makes relevant contributions. In a production line, for instance, Automated Optical Inspection (AOI) can be used for quality assurance of a serially manufactured product. A faulty prediction can lead (at best) to unnecessary waste as high-quality products are discarded, or (at worst) to the further processing or sale of a defective product. With a well-calibrated uncertainty, the performance of the AI application may not increase directly, but it could indicate which products would benefit from further review (for example, by a human). This would reduce waste and minimize quality drops (assuming independent checking by human and AI).

While the two examples mentioned come from the field of computer vision, uncertainty arises in nearly all areas of Machine Learning and affects all common problem types, be it classification (e.g., AOI) or regression (e.g., electricity consumption). Being able to reliably estimate it is therefore one of the key directions for trustworthy AI (see safety methods for AI).

Figure 1: Uncertainty in Machine Learning comes in many forms and differs from data type to data type. Some cases can be illustrated particularly well for image data: For example, low contrasts (e.g. in night shots, left image) make processing by an ML model just as difficult as the (partial) overlapping (occlusion) of people or objects (right image).

What are the sources of uncertainty?

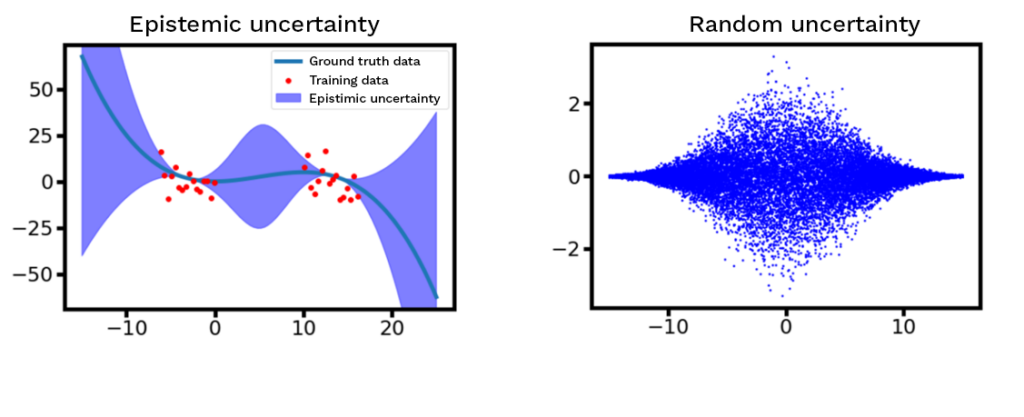

Uncertainties in model predictions can stem from various sources. Aside from minor model weaknesses that are difficult to avoid, the literature often distinguishes between two fundamental types or causes: epistemic and aleatoric uncertainty (see figure below).

In the first case, the data on which the ML model has been trained does not cover the entire range of possible input data, and the model must interpolate or extrapolate between or outside the seen data points. In these areas, no fully reliable statement can often be made about the expected outcome (i.e., the “true” course of the data in the diagram). This form of uncertainty is common in problems with low data density relative to their complexity, where strong generalization between data points is required. It is, therefore, also a standard problem for AI applications used in an “open world context,” where not all possible input data can be known in advance. This applies especially to AI applications with open interfaces, such as those freely accessible on the internet or in public spaces (e.g., camera systems of autonomous vehicles), where interactions or inputs are not always predictable. In the case of pedestrian detection for autonomous driving, for example, a pedestrian in a lion costume might present an unseen challenge to the application, which can arise around the carnival season. If the AI application does not extrapolate appropriately for this case, at least an epistemic uncertainty estimator should flag the person as a new “object.”

The second type of uncertainty, aleatoric uncertainty, refers to inherent uncertainties in the data. Prototypical examples are noisy datasets, such as the aforementioned electricity consumption of a household. Generally, this concerns problems where the data does not allow for a clear decision. This can apply to complex tasks beyond the regression example. For instance, in segmentation (i.e., pixel-accurate object detection), human experts may disagree on the exact object boundaries. This occurs, for example, in the medical field when distinguishing between healthy and diseased tissue. To make results from AI applications for such tasks more meaningful, it can be helpful to represent these discrepancies as (aleatoric) output uncertainty.

Especially in complex problems, both types of uncertainty (with varying degrees of significance) usually arise. To reliably operate AI applications in these environments, it is often helpful if the reliability of their statements, be it classification or the prediction of continuous numerical results, can be assessed or their uncertainty estimated.

Figure 2: Schematic representation of two types of uncertainty. Epistemic uncertainty (left) is particularly pronounced in areas where the model has not seen any data. Aleatory uncertainty (right) is a property of the data that does not reveal a deterministic relationship between x and y values. Here, as shown, the uncertainty, i.e. the width of the data distribution, can depend on the specific x-value.

Requirements for uncertainty estimators

Especially in applications with an “open world context,” the estimation of epistemic uncertainty can be used to detect structurally new data and thus protect the model from output errors. In addition to this capability, which also finds application in areas like active learning, there are two central requirements for uncertainty estimators to be useful. First, is the calibration often discussed in the literature and already mentioned above: If an AI algorithm makes a classification statement, such as about the suitability of a tested product, with 99% confidence, the expectation is that only one out of 100 such evaluated products is unsuitable. Similar statements apply to regression for predicted target intervals, within which the true value is expected to lie with 99% (or any other) probability. Calibration is thus a “performance measure” not for the actual AI application but for the quality of the associated uncertainty estimation. This is often measured using the “Expected Calibration Error” (ECE). Besides this (global) calibration, the existence of sufficient correlations between uncertainty estimation and actual model quality for a given input is crucial to initiate further steps, such as mitigation strategies like human review in the AOI example above. This necessitates an input-dependent uncertainty estimation.

Conclusion

Despite their high performance, AI models rarely operate completely error-free. Especially in safety-critical applications, it is important to detect faulty predictions as early as possible to prevent damage. A well-calibrated uncertainty estimation can contribute to this as a form of “self-control.” For this reason, Fraunhofer IAIS is working on solutions and technologies for reliable AI. More information on related projects can be found here.