Neural networks are highly suitable for image classification and are already widely used today, delivering outstanding results on benchmark datasets such as the ImageNet dataset (90% success rate). They are a popular means of solving various image processing tasks, including the analysis of medical images such as MRI scans, the identification of plant species from photos (study in German), and the classification of traffic signs in autonomous driving. Hostile attacks on these Machine Learning prediction models, including in traffic scenarios, could lead to significant (human) harm.

Three learning phases of a neural network classifier

Through neural networks (NN), properties, for example, from training images, can be assigned to individual classes. For this purpose, the classes of the training examples must be known in advance. The distinction of the classes based on the existing image features can be learned by a neural network. This is a discrimination function, allowing images to be sorted into one of the known classes (note: in some applications, there is an additional rejection class if none of the known classes apply). This function is also called a classifier. In the article on deep learning, you’ll find a more in-depth explanation of how neural networks function.

The training of an NN classifier consists of several phases, with different data being considered for each phase. In this case, we consider supervised learning, which involves specific feedback loops.

Training phase: The network extracts certain properties from training data, which are then used to form the classification function.

Validation phase: Subsequently, the classification performance is determined with other images. Based on the result, the learned distinction of the classes is adjusted so that the network delivers better results in the future.

With this improved classification function, another training phase is now undergone, and its results are then validated again. This process is repeated until a certain number of iterations has been reached.

Test phase: At the end of the training, the final classification performance is determined with a test set that is unknown to the network so far.

It’s important that the different sets do not contain the same elements, as the network will simply memorize the given images instead of analyzing the properties of the images. Additionally, it may happen that very good results are achieved in the validation phase, but no good classification is observed in the final testing. This phenomenon is called overfitting, where the classification has been too adapted to the known training and validation data.

Division of the data set into training set, validation set and test set

In terms of information and capabilities, the attack surface of a network can be defined by what an attacker possesses. The more information an attacker has about a Machine Learning model, the more options are available for an attack, and the more precisely attacks can be tailored to a model. It is possible to corrupt a model in any of the three phases described above of the learning process.

Most commonly, attacks on trained and tested models occur through so-called explorative attacks, where attempts are made to create false classifications or obtain information about the model. This includes, for example, spam emails that, with clever alterations to the content such as deliberate spelling mistakes, are not recognized and filtered out by spam filters. In this case, an attacker does not have access to the training process or the structure of the model but only has the ability to pass data to the trained model. Therefore, these types of attacks fall into the testing phase, where no influence is exerted on the model.

White-Box and black-box attacks

In principle, attacks on the testing phase can be divided according to the information the attacker has about the model. White-box and black-box attacks can be distinguished.

In a white-box attack, the attacker has knowledge of the network’s structure, the type of training procedure, and the data with which the network is trained. This allows them to analyze the model and its weaknesses and deliberately introduce small deviations into data to create misclassifications.

In a black-box scenario, an attacker does not have this knowledge but only sees the input data and the results that the network outputs. During an attack, for example, various manipulated images are given to the network, and based on the output, where possible weaknesses in the neural network might be. Basic information about manipulated inputs can be found in our article on so-called adversarial examples.

The goal of these attacks is to equally cause a misclassification of the network and apparently make as few changes as possible to the original data. This way, a human cannot determine that an attack has been carried out. The induced misclassification of the neural network can also have massive consequences in practice. For example, it could prevent an autonomous vehicle from correctly recognizing a traffic sign and therefore behaving accordingly.

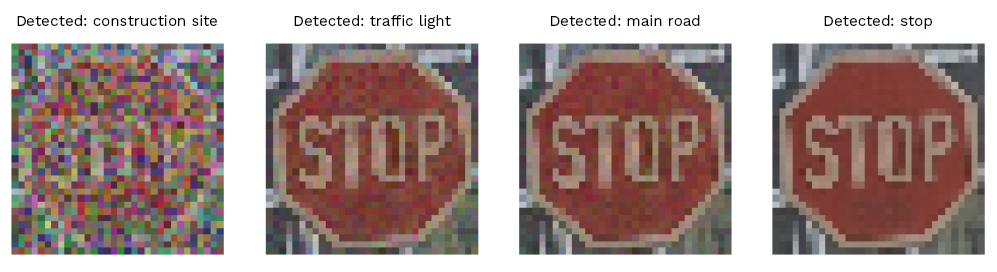

Application of a black-box attack

We demonstrate how easily the classification of a Machine Learning procedure can be broken in the following example:

We work on a dataset of German traffic signs from Ruhr University Bochum (GTSRB). A classification model is trained on this data. Various attacks can be executed using the Adversarial Robustness Toolbox from LF AI or IBM. Here, for example, we use the Hop-Skip-Jump attack. In this black-box attack, good adversarial examples are iteratively sought, which minimally change the original image while effectively attacking the ML model. Ultimately, the best example for the attack is used.

From left to right: Iterative search for a good adversarial example, i.e. one that changes as little as possible, using the hop-skip-jump method with simultaneous classification by the model. Far right: the original image for comparison. The attack generates a marginal change in the values so that the resulting image is no longer recognized by the network as a stop sign, but as a signal for a priority road.

Defense against a black-box attack

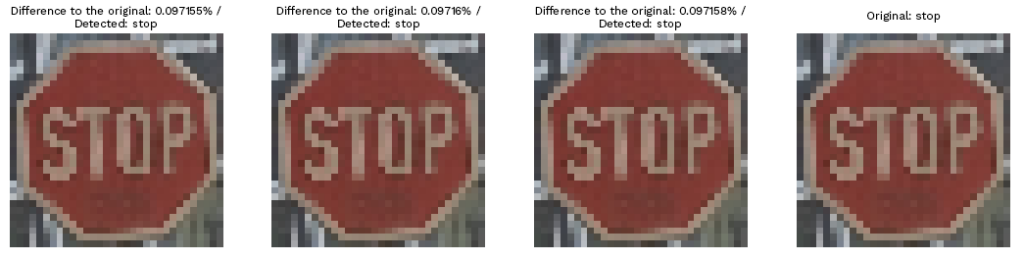

Our example illustrates the dangers of hostile attacks on Machine Learning prediction models. In the specific use case, we countered the attack by including adversarial examples in the training data. Manipulated data was thus learned to some extent by the ML model, making its classification not as easily broken. We used DeepFool for this purpose. This is a generator for adversarial examples specifically designed for deep neural networks and is also included in the Adversarial Robustness Toolbox. Because the network already learns certain manipulation patterns during training, attacks via the Hop-Skip-Jump method must become more specific. In our use case, after the defense strategy we employed, mere manipulation by the attack was no longer sufficient to cause the model to misclassify.

The iterative search in the hop-skip-jump method adapts to the more robust model and refines the manipulation. This is only visible via the difference to the original image. The changes are no longer sufficient to falsify the classification.

As diverse as the forms of attacks on Machine Learning models are, so are the countermeasures developed by the research community. Therefore, in the development of industrial and productive systems with Artificial Intelligence, robustness against attacks should always be considered. If necessary, the existing defense mechanisms can be used to protect Machine Learning procedures and resulting models to a certain extent. An overview of various defense strategies against adversarial examples is provided in the article by our colleague Linara Adilova.