In recent decades, digitalization has gained increasing importance, transforming nearly all aspects of our lives. One area where digitalization has enabled significant advancements is Machine Learning (ML). With the availability of ever-larger datasets and more computational power, complex algorithms can now be applied. However, larger datasets also bring challenges, particularly in processing. A central challenge in processing is dimensionality reduction. One method for dimensionality reduction is Linear Discriminant Analysis (LDA). Below, you will learn how LDA can be used as a tool for reducing the dimensionality of large, high-dimensional data and why dimensionality reduction is a key part of Machine Learning for overcoming the curse of dimensionality.

What is dimensionality reduction?

Dimensionality reduction is a process in which the number of features, also known as the dimensions of a dataset, is reduced. The goal is to decrease the dataset’s complexity, making it easier to analyze and interpret. One important reason for applying dimensionality reduction is the “curse of dimensionality,” which states that the more dimensions a dataset has, the more data points are required to make reliable statements about patterns and relationships in the data.

By applying dimensionality reduction techniques such as Principal Component Analysis (PCA) or Linear Discriminant Analysis (LDA), the number of dimensions in a dataset can be reduced. New features are created as combinations of the original features, but only the most important information is preserved in these new features to ensure an accurate representation of the data (learn more about how much data Artificial Intelligence really needs in this related blog post).

Dimensionality reduction is thus an essential part of Machine Learning, helping to overcome the curse of dimensionality and enabling complex datasets to be analyzed and interpreted more effectively. The important features of the data are determined by the chosen algorithm. For example, an algorithm might aim to identify the features where the data differ the most. The type of data being analyzed also determines which algorithms are most suitable, alongside the specific goals of dimensionality reduction. A distinction is made between classifiable and non-classifiable data. The latter refers to datasets whose data points cannot be grouped into different categories or classes. Often, the goal of dimensionality reduction techniques for non-classifiable data is to analyze the structure or composition of the dataset. Classifiable datasets, on the other hand, contain data points that are assigned to specific classes. Two data points in such datasets can be compared not only based on their features but also on their labels, which provide information about the assigned classes. A potential goal of an algorithm based on classifiable data might be to separate data points from different classes (read more about classification in the blog post “Classification in Machine Learning”).

Selecting or combining features?

There are two types of dimension reduction procedures. It is important what the resulting characteristics of the dimensional reduction look like.

In feature selection, the reduction is carried out by omitting unimportant features. To do this, the features must be evaluated in advance by an algorithm so that the less important features are not included in the reduced data set. Feature projection algorithms reduce the data set by combining several features. The aim of LDA is to reduce the features in such a way that the reduced data points can be optimally separated by a straight line.

Figure 1: The difference between a feature selection algorithm and feature projection (LDA) is shown using the example of a dataset on irises, a plant genus within the iris family.

Figure 1 shows a dataset with data points from three different iris species and the results of dimensionality reduction through feature selection and feature projection. The data points in the Iris dataset are described by two features, petal length and sepal length, and lie in a two-dimensional space.

In feature selection, we choose the feature “petal length.” Information about sepal length is lost, and our data is described using only one feature. The data now lies on a one-dimensional line. It can also be observed that selecting the feature “sepal length” would better separate the classes in the data.

In the case of feature projection (LDA), the data also lies on a one-dimensional line. Unlike feature selection, however, information from both features is incorporated into the reduction. It is evident that the individual classes are better separated by feature projection than by feature selection. However, it is also noticeable that the values of the reduced features differ significantly from the original values, unlike in feature selection.

Linear Discriminant Analysis (LDA)

LDA is a feature projection algorithm for dimensionality reduction that works with classifiable data. This means that LDA is a method where the original features in a dataset are projected onto a smaller number of new features. Its goal is to select new features that enable the best possible separation between different classes within the data.

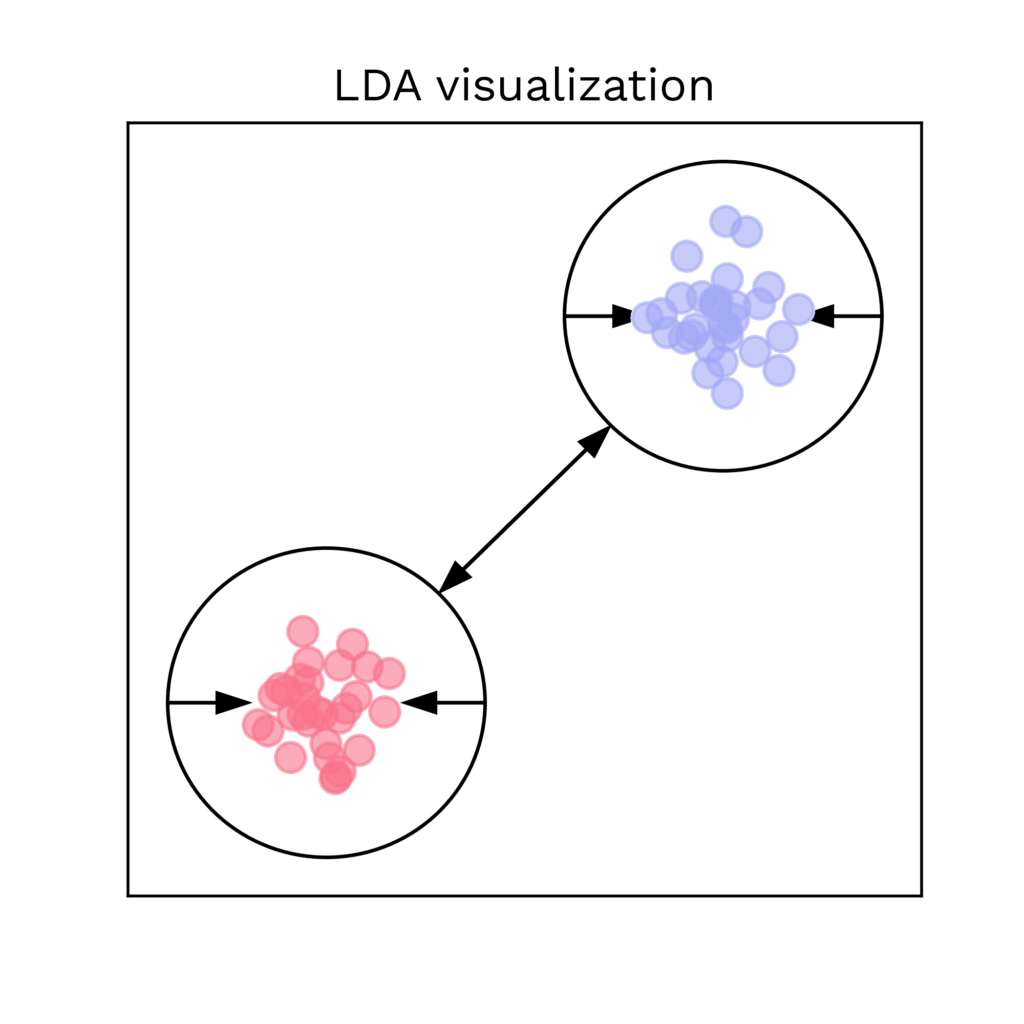

LDA achieves this by considering variances within the dataset. It aims to maximize the variance between classes (inter-class variance) while minimizing the variance within a class (intra-class variance). In other words, it seeks a view of the data where data points from different classes are as far apart as possible, while data points from the same class are as close as possible.

Figure 2: Illustration of how an LDA algorithm works.

By combining the maximization of inter-class variance and the minimization of intra-class variance, the new variables are chosen to allow the best possible separation between the classes in the dataset (see Figure 2).

Limits and restrictions

While dimensionality reduction is a useful method for analyzing and visualizing complex data, the following limits and restrictions must be considered:

- In general, reducing data always involves some loss of information, which can lead to incomplete or inaccurate analysis. Reduction is most effective when the original dataset can naturally be described by fewer features or dimensions.

- The accuracy of Linear Discriminant Analysis also depends on factors such as the number of data points and the presence of outliers in the dataset. Too few data points can lead to overfitting, meaning the reduced features are too tailored to the sample and do not generalize well to new data. Conversely, outliers can fundamentally distort results.

- It is crucial to identify and remove outliers in the dataset before performing dimensionality reduction.

- Most importantly, LDA does not work well if the centers of different classes overlap or their data points are heavily mixed.

- In Figure 3, the original data is so mixed that dimensionality reduction using LDA does not allow for class separation. There are cases where dimensionality reduction is not advisable, as it could distort the properties of the data and lead to worse results for ML algorithms.

Figure 3: The original data is so heavily mixed here that the dimensional reduction with LDA does not separate the classes. The accuracy of the data is distorted and the ML algorithm delivers poorer results.

Dimensionality reduction as preprocessing for ML algorithms

In conclusion, there are various dimensionality reduction techniques for different problems and objectives. Feature selection and projection algorithms can be applied to either non-classifiable or classifiable data, reducing the data load for ML algorithms. LDA, in particular, can analyze classifiable data and enable linear separation. However, the limitations and constraints of LDA must always be considered, as not every technique is suitable for every dataset.