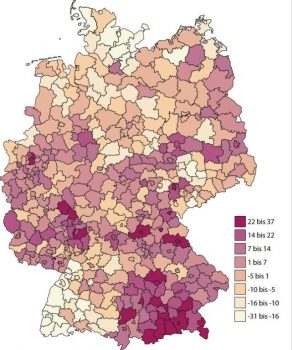

In criminal law, the severity of a judgment’s sanctions depends on a variety of criteria, such as the gravity of the offense or the offender’s criminal record. However, analyses conducted by researchers at the Max Planck Institute for the Study of Crime, Security, and Law (MPI-CSL) revealed another correlation: the punishment levels for the same offense can vary significantly in some regions of Germany. For instance, judgments in Bavarian regions are, on average, about 37% harsher than the nationwide average.

Results of an MPI-CSL study (in German): The map shows the percentage deviation of the severity of sanctions in legal judgments per court district from the federal average.

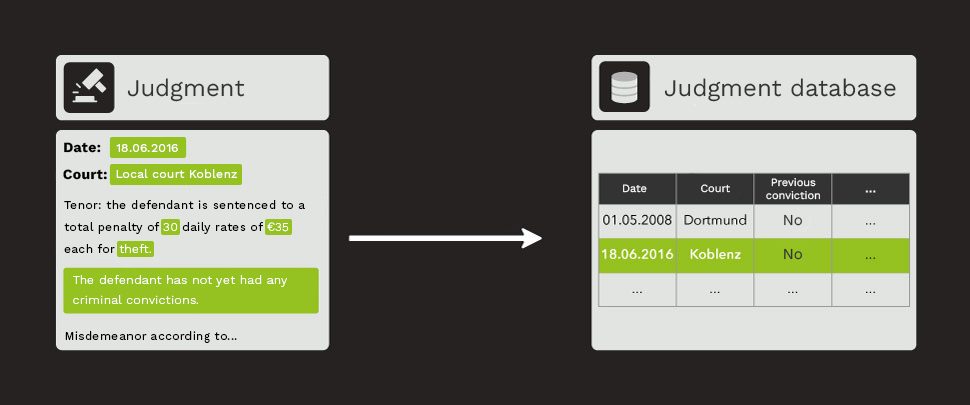

To further investigate this matter, a more in-depth analysis of judgment texts is required. However, this presents many challenges. Firstly, information about the course of events and the sentencing is not structured, but rather it needs to be inferred from the document justifying the judgment on a case-by-case basis. Secondly, around 800,000 judgments are rendered in Germany annually, and the manual processing of these is unfeasible. This is where information extraction comes into play, which deals with methods for the automated recognition of information in text and pursues the goal of converting unstructured information into structured information.

Document classification and information extraction: From text to table entry

Since 2018, Prof. Dr. Dr. Frauke Rostalski, Chair of Criminal Law, Criminal Procedure Law, Legal Philosophy, and Comparative Law, and her team at the University of Cologne have been dedicated to exploring how information can be extracted from court judgments. Since 2019, we have been working collaboratively within ML2R (now the Lamarr Institute) framework on the application and evaluation of technologies to automate this process.

In automatic processing, we aim to extract two types of information, as depicted in Figure 2:

- Some information types, such as the type of conviction (acquittal, fine, custodial sentence) or the existence of a previous conviction of the accused (yes / no), can only assume a fixed number and characteristics of values. The aim of these information types is to automatically decide for each document which of these values should be assigned. We refer to this as document classification.

- For some other information types, such as the date of the judgment or the amount of the penalty, there is no such restriction. They can, in principle, take any value. To extract this information from the text, the passage containing the information must be identified, and each word is assigned a class indicating whether the word contains the corresponding information or not.

Extraction of structured information from judgment texts.

There may be additional correlations between these types of information. For example, a judgment in which a prison sentence was imposed does not contain any information on the amount of a fine. It is also known for this application domain that offenders with a criminal record, for example, are more likely to receive a prison sentence than offenders without a criminal record. This background knowledge should be additionally considered. These knowledge-integrating ML methods are referred to as Informed Machine Learning.

In the following, we will describe how we use classification methods to automatically identify information in judgment texts and how we ensure that existing background knowledge is considered.

Finding the right content with Machine Learning

To extract all information from each document, two types of decisions need to be made: at the document level—such as whether the judgment mentions a criminal record—and at the word level—such as whether a word contains one of the sought-after pieces of information, such as the amount of the penalty. Various types of supervised Machine Learning methods can be employed for the automation of these classification decisions. These methods require training data, meaning that annotated judgment texts are needed for model training, indicating which classes were assigned to the documents and words. Based on these examples, the model learns to reproduce the decision made as accurately as possible and to generalize to unseen documents.

Benefiting from transfer learning and language models

In order to learn from annotated texts how the underlying decision problems are solved, the first step is to find a vectorial representation for the text. To do this, each word in the document is mapped to a numerical vector, also known as an embedding vector. Due to their complex, layered structure, many methods based on neural networks are very good at first finding such a representation for words or sentences and then learning to solve the classification problem based on this. One disadvantage of such methods is that they require a large amount of training data, which is rarely available to us in practice.

This is why we use transfer learning. This means that we first pre-train the model on a problem for which we already have a lot of training data available and then retrain it on another problem. In our specific case, we use a model that has been pre-trained on a very large German-language text corpus for representation. The algorithm learns in a self-monitored way: the training data is generated directly from unlabeled texts by removing individual passages that the model is then supposed to reconstruct.

Only data is insufficient: Achieving the optimal outcome with logic

The methods described above can be used to learn a model that is able to identify every piece of information in a judgment text. However, classification decisions are made independently of each other. In order to find a global solution for each judgment document, i.e. a series of internally consistent decisions, we use a method from the field of statistical relational learning: probabilistic soft logic. This makes it possible to describe a probabilistic graphical model using logical rules. We use this to integrate background knowledge about dependencies into the model in an understandable way. For example, we ensure that no fine is expected in a judgment in which a custodial sentence has been imposed, or that probation is only anticipated in connection with a custodial sentence. In addition, uncertainties in the prediction of the individual classes are considered in order to find a global solution that is as consistent as possible.

Beyond data-driven learning: Achieving the best outcome with background knowledge

As seen, the combination of the presented methods allows us to transform initially unstructured data into structured data. The presented project thus forms the basis for a long-term collaboration with the domain experts at the University of Cologne and is an important first step towards a more transparent sentencing process in Germany. By using methods that do not exclusively operate on data-driven principles, we can directly integrate existing expert knowledge into the Machine Learning process. The exploration of methods for information extraction is relevant not only in this legal domain but also in various other domains, such as the processing or summarization of patient information or the analysis of contracts in the financial sector.

For more information, refer to the accompanying paper:

Using Probabilistic Soft Logic to Improve Information Extraction in the Legal Domain. Birgit Kirsch, Sven Giesselbach, Timothée Schmude, Malte Völkening, Frauke Rostalski, Stefan Rüping: LWDA, 2020, PDF.