In the first part of our blog post series on Guided Reinforcement Learning (RL), we have already seen the potential of RL for the robotics domain and how it emerges as a powerful tool for learning robot control. However, as a mostly data-driven approach, it struggles in the efficient collection and use of training data as well as training effective policies for real-world robotics deployment. One potential way towards overcoming these challenges is the integration of additional knowledge and combining the strengths of data- and knowledge driven approaches (read more in our blog post Informed Machine Learning – Learning from Data and Prior Knowledge). This blog post explores the idea behind the concept of Guided Reinforcement Learning and provides insights into the overall pipeline, novel taxonomy, and results of the evaluation study.

Pipeline – Integrating Knowledge into the Training

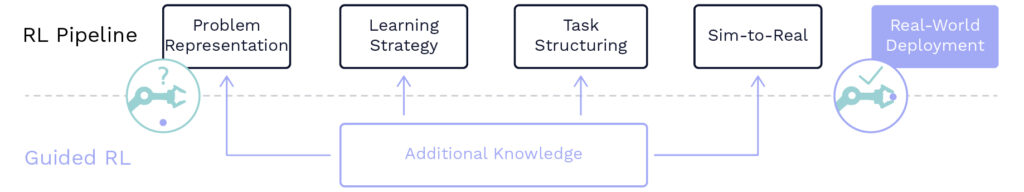

The knowledge itself can be integrated at various levels of the RL pipeline (compare Fig. 1). For instance, it could be integrated at the core of the learning problem formulation, namely the representation of the agent’s observation or action spaces. Besides this, additional knowledge also could be integrated into the learning strategy itself or by providing additional context and structure to the learning task ahead. Lastly, if a simulation environment is adopted for generating synthetic training data, the trained policies likely are facing the sim-to-real gap. To this end, additional knowledge can be integrated to further reduce this gap in order to deploy the trained RL policies to the real world.

© Fraunhofer IML

Taxonomy – A Modular Toolbox for Reinforcement Learning

With the idea of Guided RL in mind, one could ask how to practically apply the idea of integrating additional knowledge to training intelligent behavior for a real-world robot. To this end, a novel taxonomy (see Figure 2) has been developed that provides a structured overview of which types of knowledge can be helpful (Knowledge Sources), what specific methods can be used (Guided RL Methods) and where these can be practically integrated into the learning pipeline (RL Pipeline). Three types of prior knowledge form the basis for most Guided RL methods, namely scientific knowledge (e.g., algebraic equations), world knowledge (e.g., facts from everyday life), and expert knowledge (e.g., hyperparameter tuning). These knowledge sources can be integrated into the RL pipeline by means of different Guided RL methods as sketched in the following. The formulation of the learning problem can benefit to a large extend from expert knowledge, e.g., by careful selection of the observable space, the formulation of a dense reward function, or providing abstracted action spaces for the RL agent. In the same vein, the algorithmic formulation of the learning strategy can benefit from expert intuition, by e.g., selecting an offline training set, designing parallelized learning schemes, or integrating demonstration data into the learning process. Moreover, world knowledge typically has a strong relation to providing structure to the learning task ahead, by e.g., learning more and more complex tasks or splitting one large task into several subtasks that are learned sequentially. Lastly, in order to bridge the sim-to-real gap, diverse knowledge sources can be useful to improving the system models, randomizing the environments or designing adaptation models in order to increase the realism of the simulated training environments. By providing a structured overview, this taxonomy can be seen as a modular toolbox and hopefully will help researchers in selecting appropriate methods for their specific learning problems ahead.

© Fraunhofer IML

Evaluation – When to Select Which Methods

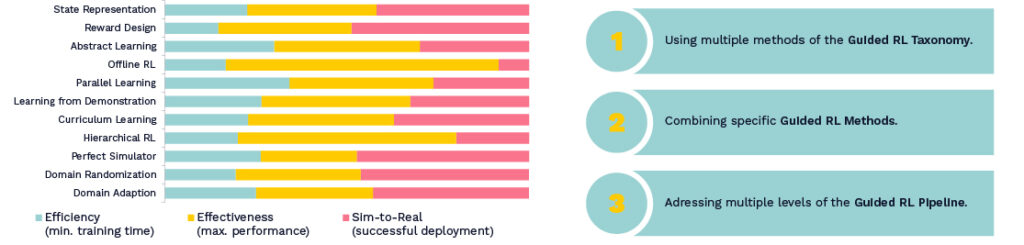

Starting off with the motivation of simultaneously improving the efficiency, effectiveness, and sim-to-real transfer of the learning process, an evaluation study has been conducted over more than 100 recent research papers to analyze the effect of the corresponding Guided RL methods in a quantitative way. Based on the introduced taxonomy, all presented Guided RL methods have been analyzed and normalized to show their relative contributions (see Figure 3) to reduce the training time (efficiency), improve the policy performance (effectiveness), and successfully transferring a policy to the real robot (sim-to-real). Overall, the results of this evaluation study can be summarized by three main insights, that briefly will be discussed in the following. First, it is observed that using multiple methods of the Guided RL Taxonomy can help to simultaneously improve the efficiency, effectiveness, and sim-to-real. Second, not only the number of adopted methods turns out to be important, but also specific combinations turn out to amplify each other’s effect. For instance, positive correlations can be seen between domain adaptation and domain randomization for sim-to-real transfer and parallel learning and domain randomization for data-driven scalability. Lastly, it turns out that exploiting multiple levels of the Guided RL Pipeline can yield more efficient and effective training for real-world robotics deployment.

© Fraunhofer IML

Summary

To wrap it up, Guided RL describes the idea of integrating additional knowledge into the training process as path towards data-efficient and effective robot learning. The introduced taxonomy of Guided RL can be seen as a modular toolbox for integrating different sources of knowledge into the training pipeline. Lastly, we have seen that by adopting multiple Guided RL methods, combining specific methods, and exploiting multiple levels of the Guided RL pipeline, one can simultaneously improve the efficiency and effectiveness for deploying trained RL policies to the real world. In the next blog post, we are going to explore how Guided RL practically can be applied, by discussing the first real-world robotics challenge: Learning dynamic locomotion for a two-wheeled robot! Stay tuned …

For more details on the methodological approach, a comprehensive state of the art review, and future challenges and directions, you can check the related journal publication in the IEEE Robotics and Automation Magazine (IEEE-RAM) that is available under the following link (open-access): Guided Reinforcement Learning: A Review and Evaluation for Efficient and Effective Real-World Robotics | IEEE Journals & Magazine | IEEE Xplore