Forgetting is a fundamental part of our daily lives. We forget appointments, forget the PIN of our bank card, or forget Kevin home alone when we go on vacation. These can all have catastrophic consequences. In the following, we briefly explain the purpose of this seemingly faulty function of our memory. We then consider forgetting in the context of Machine Learning and explain why it could become a new fundamental requirement for models in practice.

Why do people forget?

To answer this question, we need to clarify which processes in our brain are responsible for forgetting and what purpose they serve. Researchers in psychology, neurobiology, and psychopharmacology have been studying this for over 100 years. One of the most widely accepted explanations for the cause of forgetting is the interference theory. This describes the process of forgetting as the competition between existing memories and recently acquired information. Forming new memories can be hindered or even prevented by already existing associations (proactive interference). More relevant, however, is retroactive interference, where recalling older information is hindered by recently acquired information. For example, it is more difficult to recall necessary knowledge during an exam if you recently learned something about a different subject. The recently learned material retroactively interferes with the exam-relevant information.

Closely related to interference theory is the concept of consolidation. This describes the process where new memories or information need time to solidify after being acquired. The degree of consolidation significantly determines how easily a memory can be recalled later. How well information is consolidated depends on various physical and mental factors or retroactive interference by other stimuli. For example, information is likely to be less well consolidated if it is acquired while drunk or tired.

As annoying as forgetting may be for most of us, it is not a malfunction of our memory but an essential core function. It helps us process the large amount of stimuli we encounter every second, remove unnecessary details from specific information, and thus develop general concepts. Forgetting is a crucial factor in how well we can adapt to new situations. From a Machine Learning perspective, this phenomenon is closely related to the generalization ability and overfitting of models.

Forgetting in Machine Learning

The concept of forgetting is still underexplored in the field of Machine Learning, with most publications addressing what is known as catastrophic forgetting or catastrophic interference. As the name suggests, forgetting is treated as a negative attribute here. A model forgets knowledge about previously processed data when new data is processed. This phenomenon is particularly problematic for models that learn to solve various tasks sequentially (multi-task learning). Intuitively, catastrophic forgetting can also be seen as a failed consolidation of knowledge due to retroactive interference.

Few existing scientific publications address how forgetting can be intentionally used in the Machine Learning process to remove already learned information. But why would we want to intentionally forget something? Let’s consider a small example.

In 2018, the General Data Protection Regulation (GDPR) came into force in the EU, which among other things, formulated the right to be forgotten (link in German). Similar regulations exist in countries like the USA and Japan. Simply put, organizations that collect personal data must also delete it upon request. With a legal basis like the GDPR, forgetting information becomes a fundamental requirement for models in practice. It has already been shown that attacks on trained Machine Learning models can infer training data, even if it has been deleted. This makes it clear that simply deleting collected data is not enough to truly forget personal information. An obvious solution would be to retrain the model regularly, excluding the deleted data from the training process. This ensures that the model no longer contains any information about the deleted data. However, since this approach is very costly in practice, there is a need for a method to remove the data from the model without retraining it. In other words, we need a methodology in Machine Learning that allows us to intentionally forget specific information.

Strategies for intentional forgetting

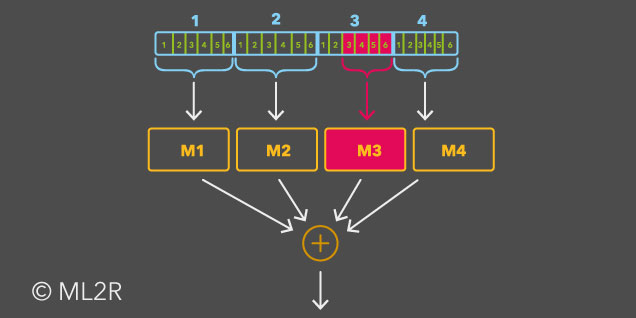

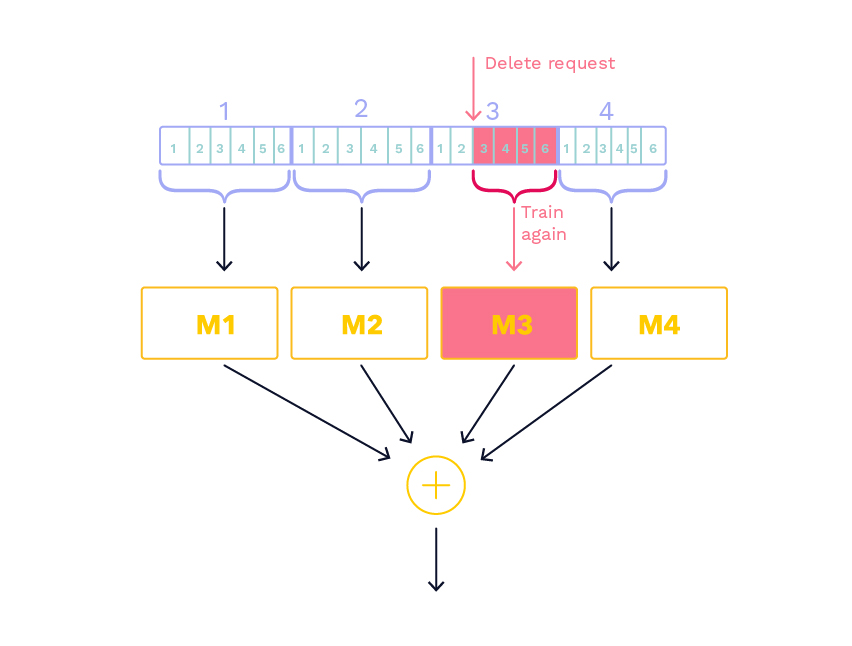

The first and probably most intuitive strategy for intentional forgetting is to drastically reduce the effort of retraining. A specific implementation of this strategy is the SISA (Sharded, Isolated, Sliced, Aggregated) training. Here, the data is divided into approximately equal parts (sharded) and used to train multiple copies of the model. Each of these models is trained independently of the others (isolated). Each shard is further divided into smaller parts (sliced), which are gradually added to the training process. Finally, the results of the individual models are combined for a joint prediction (aggregated). When a deletion request is made, only the submodel that saw the corresponding data during training is retrained. The training of the submodel can restart from the point where the relevant data point was added.

Example illustration for SISA (Sharded, Isolated, Sliced, Aggregated) training. If there is a deletion request for a data point in the third shard and third slice (marked in red here), only M3 needs to be retrained. Training is resumed at the point where the third slice is added. Slices 1 and 2 do not need to be retrained.

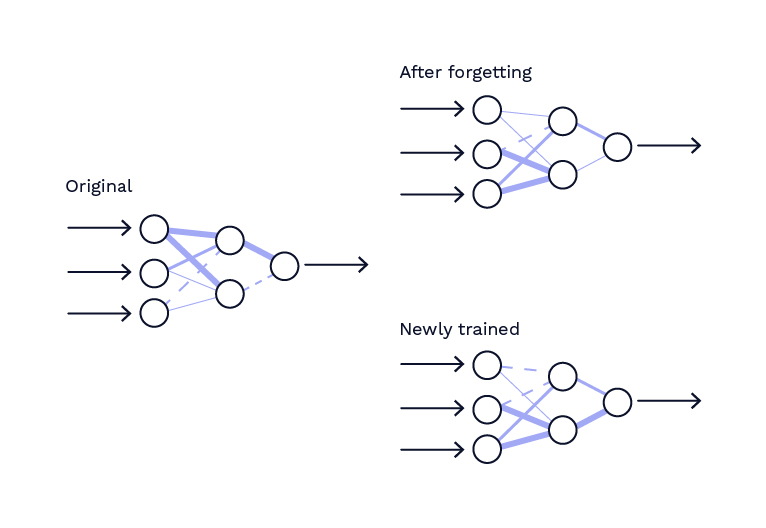

The second strategy is based on the concept of differential privacy. After forgetting, the model should be indistinguishable from a model that has never seen the data to be forgotten. At the same time, the model should not lose any information about the remaining data.

By forgetting information, we want to achieve a model that differs little or not at all from a model that was trained without the sensitive data. The similarity of the models is measured by the parameters, here represented by the strength of the edges.

How the model parameters need to be adjusted to meet both conditions is determined using the remaining data. This strategy avoids retraining altogether by adjusting the parameters once. However, this usually does not achieve a perfect result. There remains a residual risk that the information to be forgotten can still be recovered from the model. Two well-known approaches that follow this strategy are Certified Data Removal and Fisher Forgetting.

Conclusion and Outlook

We have seen that forgetting is an important fundamental function that allows living beings to abstract from their experiences and better apply them to new situations. From a Machine Learning perspective, targeted forgetting is currently always considered from the viewpoint of data protection and privacy, not least due to legal frameworks like the GDPR. As an alternative to retraining, we have explored two basic strategies for forgetting that reduce or even eliminate the effort of retraining. However, if we reconnect machine learning with neuroscience, it quickly becomes clear that forgetting could potentially play a much more significant role. In the future, biologically inspired forgetting methods could be developed, allowing us to apply models even better in highly dynamic scenarios. In the field of reinforcement learning and robotics, solutions could be found for problems under sudden constraints on executable actions. Lastly, forgetting could also play an important role in the further development of more general learning models, such as GPT-3.