Is Artificial Intelligence (AI) a “post-theoretical science”?

In a highly regarded essay, Laura Spinney raises the question of whether we are approaching a “post-theoretical science.” Facebook’s models predict user preferences better than many psychologically grounded market research projects, and AlphaFold, a neural network developed by DeepMind, accurately predicted the spatial protein structures from the sequenced amino acids of a molecule. In May 2020, OpenAI introduced the language model GPT-3, holding the record for the largest neural network created up to that point with 175 billion parameters. This year, a “Generalist AI” called Gato (article in German) was introduced, capable not only of engaging in conversations with humans but also mastering computer games and controlling a robotic arm to build towers with building blocks as instructed. However, none of these models reveal how they arrive at their results. Spinney notes that today, so much data has accumulated, and computers are already much better than humans at recognizing complex correlations, that our theories in the form of formulas and equations would oversimplify reality.

Against this backdrop, a new research topic has emerged in AI, systematically addressing the apparent deficiency of opacity (for more on this topic, refer to our posts in German on AI fairness and explainable ML). A new specific approach to increase the transparency of fully automatically generated texts is being developed, analogous to the well-known human approach in a scientific discourse: claims are substantiated by citations from works of other scholars. Combining language models with search engines allows an AI system to substantiate generated texts in the same way.

Do language models “understand” what they have learned?

In the summer of 2021, Donald Metzler made the following assessment:

“Pre-trained language models, by contrast, are capable of directly generating prose that may be responsive to an information need, but […] they do not have a true understanding of the world, […] and crucially they are incapable of justifying their utterances by referring to supporting documents in the corpus they were trained over.”

A significant drawback is that AI models provide no justifications or explanations for the texts they produce, generated through the interaction of often billions of parameters. These parameters calculate correlations between numerically coded linguistic terms. There is no substantive interpretation created in this process. To generate texts that are not only meaningful but also factually accurate, it requires more than just the language model itself. Therefore, experts are currently working on the synthesis of language models and search engines.

Language models can find and evaluate relevant texts

DeepMind introduced the Retro model, which gradually conducts searches in a database of 5000 billion words based on the given starting text and the previously generated output texts. This is approximately as much text as the entire Library of Congress in the United States contains.

Retro operates differently from most internet search engines. It does not perform keyword searches but rather searches by comparing numerical vectors that encode the meaning of a list of words. This allows the model to find suitable texts with different formulations, achieving more precise search results.

These search results are analyzed by the model along with the text generated so far. Retro only comprises 7 billion parameters, making it much more cost-effective to train than GPT-3. Nevertheless, Retro achieves top performance in most benchmarks.

Retro has two crucial advantages: On the one hand, Retro can draw upon a much larger pool of information at the text generation stage without significantly increasing computational costs. This makes it possible to selectively retrieve information during text generation that can be used to reduce errors and inaccuracies. On the other hand, the information base can be kept up to date as with a web search engine, continuously adding new and current information without the need to retrain the model.

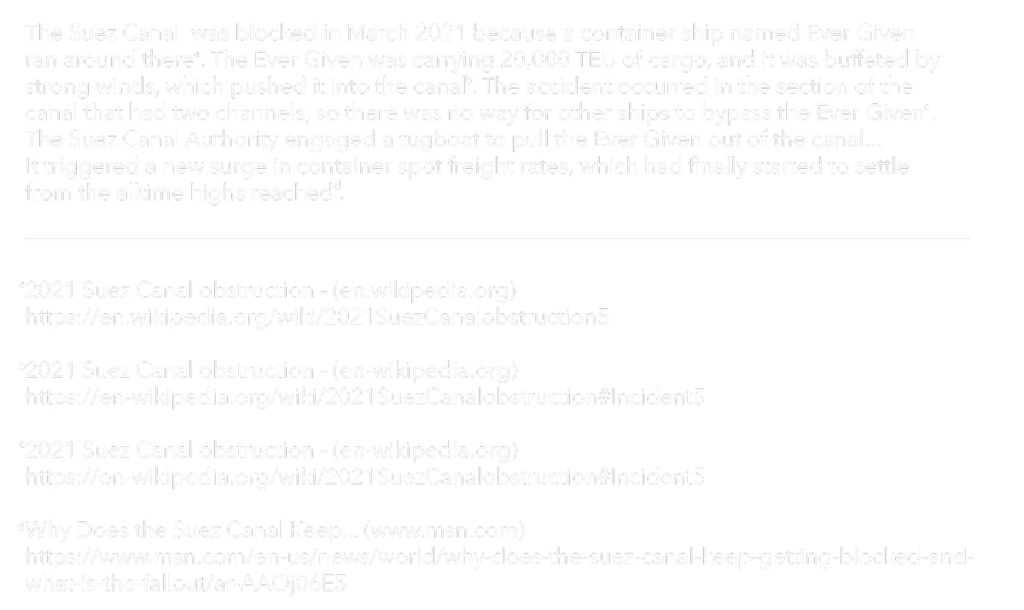

Figure 1: For the question “Why was the Suez Canal blocked in March 2021?”, the WebGPT model performs a series of queries to the Bing search engine. From the question and the answers returned, WebGPT generates an answer that evaluates the text passages found. In addition, references to the documents are inserted, which serve as evidence for the statements and explain them.

Citations supporting facts

A similar approach is pursued by OpenAI’s WebGPT. The system aims to mimic the process of an individual conducting a web search to answer a question. The developers start with the pre-trained GPT-3 model and customize it to instruct the Bing search engine, such as performing a web search in response to a query. The input includes the question, the information compiled so far, the currently found webpage, and some additional details.

For this task, GPT-3 is adapted through further training to both closely imitate the search steps of human instructors and optimize the probability that the found answers are well-rated by the instructors. In the application phase, a series of such search sequences is automatically performed for each query, and the best result is selected automatically.

The data used for this task consists of questions and answers from the ELI5 (Explain Like I’m Five) benchmark, which requires answering complex questions with detailed texts and explanations. Comparing the model’s responses to the highest-rated answer from the ELI5 test dataset, the model’s responses are preferred by human readers in 69% of cases.

Figure 1 shows an answer generated by WebGPT to the question “Why was the Suez Canal blocked in March 2021?”.

Vision: The digital text assistant

In summary, the described models present a synthesis of long-successful search engines and modern language models. Jacob Hilton, the developer of WebGPT, envisions a “truthful text assistant” that provides users with answers to their questions at the level of human experts. However, referencing external sources doesn’t solve all problems. What makes an internet document reliable? Which statements in a text need to be supported, and which are considered common knowledge? In these aspects, current language models are still in their early stages, but there are ways to improve them. For instance, there already exists a “Web of Trust” on the internet, which derives the reliability of websites, among other things, from user reviews.