For millennia, the night sky has sparked the imagination and curiosity of humanity. Astronomy involves the systematic observation of our sky with the goal of gaining a better understanding of our galaxy and the surrounding universe. Cosmic radiation, especially gamma radiation released during events such as the birth of a new star, provides important insights into cosmic processes. When gamma radiation reaches the Earth’s atmosphere, it interacts with the atoms present, triggering a cascade of interactions in the Earth’s atmosphere that falls to the Earth’s surface like a rain shower. We perceive this shower as bluish Cherenkov light, resembling the Northern Lights. Telescopes located on remote mountains are used in ground-based gamma astronomy to measure the physical interactions between high-energy electromagnetic radiation and the Earth’s atmosphere.

The collaboration between physicists and computer scientists in modern gamma astronomy has been established for a long time and has repeatedly led to groundbreaking results. Astrophysics presents an exciting application area for computer science, where large amounts of data must be analyzed and processed rapidly. Computer scientists can solve specific problems for physicists, later generalizing these solutions to other challenges.

On the Path to Intelligent Gamma Ray Classification

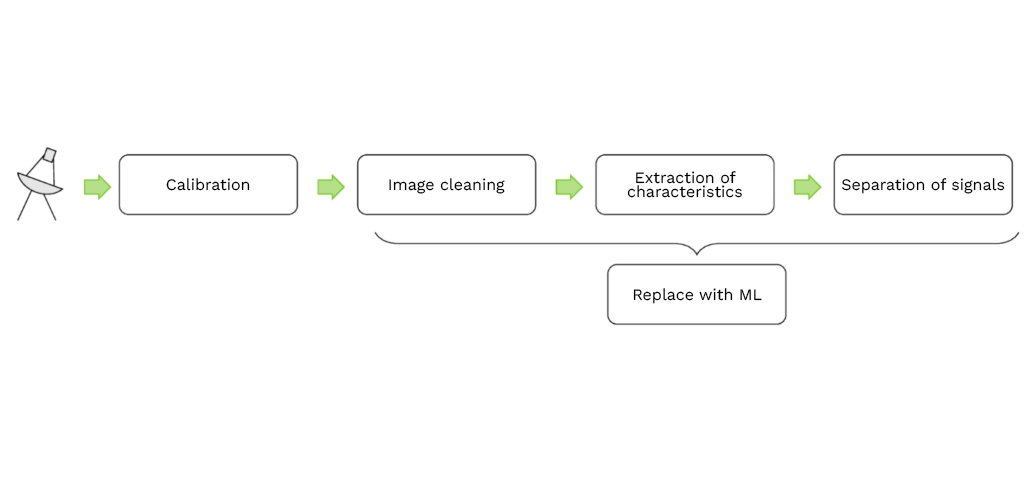

The research groups of the Chair of Artificial Intelligence and the Chair of Particle Physics at TU Dortmund have been working together for years within the FACT project. The “First G-APD Cherenkov Telescope” (FACT) is located on Roque de los Muchachos on La Palma (Spain) and is a telescope for measuring gamma radiation. The FACT telescope is equipped with 1,440 sensors covering an area of about 9.5 square meters, continuously observing the sky. The telescope records about 60 measurements per second, resulting in a data rate of about 180 MB per second. This leads to approximately 5 TB of raw data per night. To enable physicists to examine the data, it must first be preprocessed for human eyes. This preprocessing chain was developed and refined through interdisciplinary collaboration between physicists and computer scientists. In general, such a preprocessing chain consists of four subtasks, which are further divided into a total of 80 substeps within the FACT project:

- Calibration: The 1,440 sensors of the telescope react sensitively to environmental changes, such as temperature fluctuations. Therefore, the sensors must be adjusted to obtain consistent measurements under different conditions.

- Image Cleaning: Any measurement errors from the telescope, such as damaged sensors or noisy measurements, are corrected and, if necessary, removed to ensure the minimum quality of the measurement data.

- Feature Extraction: After calibration and cleaning of the data, it can be represented as images. Physical characteristics, such as the size or duration of a shower, are then extracted from these images for subsequent classification.

- Classification: Once the physical properties are extracted, the current recording can be evaluated. Initially, it is classified whether the recorded shower can be attributed to the background radiation of the universe or if it is indeed a shower. This task is particularly challenging, as only about one in 10,000 recordings can be assigned to a Cherenkov radiation shower.

The classic processing chain of measurement data in the context of FACT. The last three steps are now to be replaced by a standardized model.

To Overcome Challenging Infrastructure

Like most telescopes, the FACT telescope operates on remote mountains to provide an unobstructed view of the universe, far from the city. This means that both the energy and space for telescope hardware are limited, and there is rarely a stable communication connection to the data center. In fact, some telescopes are operated in a way that raw data is initially stored on-site on hard drives and then periodically picked up by people every few weeks. This approach delays data analysis and makes it challenging to align the telescope in a timely manner with exciting events in the sky, as these events may only be discovered weeks later in the data. The goal of the researchers at TU Dortmund is to bring data analysis closer to the telescope and ideally perform (pre)analysis of the data in real-time directly at the telescope. The researchers have decided to bypass the lengthy preprocessing chain as much as possible and replace it with a single classification model. To achieve this, the developed method must be able to handle real, possibly noisy measurements without preprocessing, while also consuming little energy and keeping pace with the high data rate of the FACT telescope.

Gamma Ray Classification with Small Devices

The researchers initially focused on finding a model that could process the high data rate at the telescope and be robust against measurement errors. To do this, the researchers chose to use Binarized Convolutional Neural Networks. Binarized Convolutional Neural Networks are a special form of neural networks for image recognition that are specialized for small, resource-efficient devices. Classical neural networks use real-valued weights, usually stored as floating-point numbers, requiring a large number of floating-point operations for classification. Floating-point operations require a lot of computation time and thus a lot of energy, making them unsuitable for FACT. Binarized neural networks, on the other hand, use only two different weights “-1” or “+1,” which can be stored as a single bit. Binarized neural networks thus eliminate the need for any floating-point operations, significantly reducing computation time and energy consumption. In a series of over 1,100 experiments, the researchers finally found a suitable model that is fast enough for application at FACT and is simultaneously robust against measurement errors. This model performs better on both simulation data and real data from the Crab Nebula and is in many orders of magnitude faster than the classical approach with lengthy preprocessing.

To subsequently deploy the found model at the FACT telescope, the researchers developed a tool to generate a hardware description for the models. The hardware description shows the structure of a computer chip built specifically for running the model. This description is first simulated and tested in software and then used to build the corresponding hardware. To implement the hardware, the researchers use Field Programmable Gate Arrays (FPGAs) , which accept and implement any hardware description. FPGAs can be easily integrated into the camera sensors of the telescope and then programmed later (“in the Field”) with the appropriate description. This approach allows embedding a significant portion of data analysis directly into the camera sensor, with the flexibility to exchange it later with new descriptions. The analysis can thus occur in parallel and in real-time with the actual measurement.

The work of the researchers provides an important building block in the analysis of astronomical data and, consequently, the understanding of our universe. Due to the shortened analysis chain, telescopes can be aligned in real-time with events, and the data can be immediately analyzed by physicists. The significance of this work extends beyond physics and can also be applied in other areas of our daily lives. The underlying technology is largely independent of the data from a telescope and can be applied, for example, to medical data such as X-ray images or images from magnetic resonance imaging (MRI), allowing patients to receive immediate feedback on examination results.

More information in the corresponding paper:

On-Site Gamma-Hadron Separation with Deep Learning on FPGAs

Sebastian Buschjäger, Lukas Pfahler, Jens Buss, Katharina Morik & Wolfgang Rhode. European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), 2020 (Publication still pending, Presentation).