The field of Computer Vision deals with the task of extracting information from digital images and videos and understanding their content. It is often referred to as image recognition. In industry, there are numerous applications for Computer Vision, especially for automating time-consuming tasks. Examples include sorting shipments by destination addresses, inspecting produced goods for damage, and autonomous driving.

Machine Learning techniques, such as Support Vector Machines (SVMs), have long been used in Computer Vision. In recent years, deep learning-based methods have been increasingly applied in many areas, achieving outstanding performance in various problem cases, such as:

- Image Classification: Assigning an image to one or more categories to make decisions about the image as a whole –for example, determining whether a workpiece is damaged or identifying the type of vehicle in the image.

- Object Detection: Recognizing and locating (multiple) objects and people in images into so-called rectangular “bounding boxes.” This allows positions of objects in the image to be mapped to real-world positions and objects to be counted.

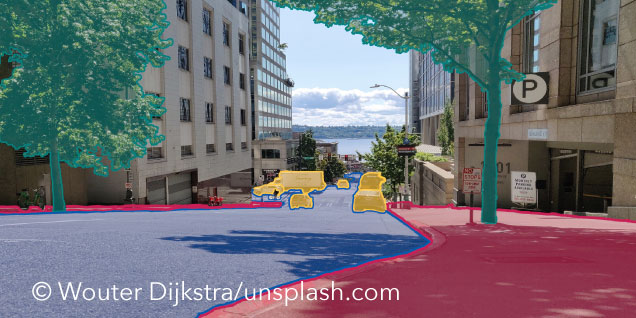

- Image Segmentation: Assigning pixel-accurate areas of the image to specific categories, enabling the recognition of entire areas and polygons in the image, such as where a road runs and where the sidewalk begins.

How do humans see the world?

Human vision is very powerful, effortlessly recognizing various objects, shapes, movements, and faces. While the structure of the human eye and the process of light capture are well-researched, the interpretation of these sensory perceptions primarily occurs unconsciously. The brain processes many non-linear and relative relationships.

Figure 2: An example of human image processing ability using an optical illusion: Although fields A and B have exactly the same brightness, the human brain perceives field B as significantly brighter (relative correlation).

The preceding Figure 2 illustrates such a relative relationship, highlighting the challenge for computer-based image processing programs. Fields A and B have the same brightness, and thus the same pixel values for a computer. However, the human brain perceives Field B as brighter because it focuses more on the comparison with neighboring fields than on absolute values. Such effects and others, like Gestalt laws, which describe rules in the perception of related elements and are especially important in UI/UX design, do not need to be explicitly considered in image processing programs to achieve good results. However, they can serve as inspiration for the development of new methods and for better understanding and analyzing existing procedures when aiming to replicate the performance of human vision.

Machine image processing

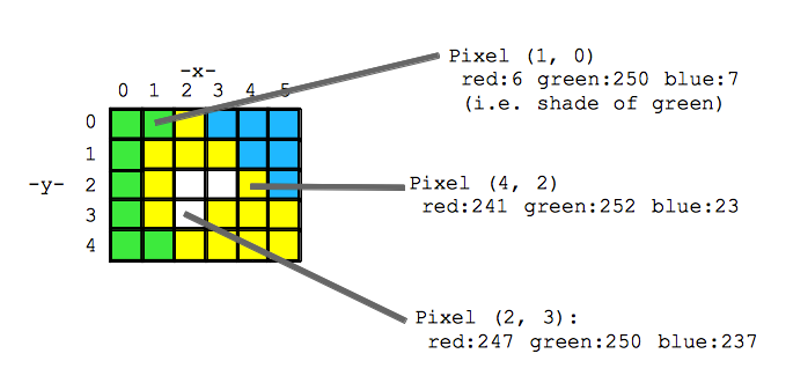

To process images with computer programs, primarily matrix-like data structures are used. For color images processed in the RGB color space, for example, the matrices consist of the dimensions W, H, and C. W and H represent the position of the pixels in the width and height of the image, and C describes the color intensity of the pixels for the colors red, green, and blue.

Figure 3: Example of the representation of a 5*6 pixel RGB image in the computer with the RGB values of the pixels at different positions.

The individual pixel values are usually represented with 8 bits –taking integer values from 0 to 255–and are often converted to other value ranges for better computational properties. To extract useful information from these pixel values, filters can be applied. These consist of so-called kernels, or masks, which are moved step by step across the image, usually performing calculations on neighboring pixels via convolution. The result is a new image, or more precisely, a new matrix.

To illustrate this, consider a filter that detects edges, i.e., strong differences in intensity between neighboring pixels: the Prewitt filter.

Figure 5: To illustrate the convolution of a small image section with a Prewitt filter. The individual elements are multiplied according to their positions and finally added up. To ensure that the result retains the same dimensions as the original image, additional zeros have been inserted here. This is also known as “zero-padding”. The result is then squared to make the filter independent from direction.

Starting from these “edge images,” various shapes can be detected through geometric calculations, for instance. Besides simple filters, more complex methods exist to calculate so-called image or feature descriptors that describe local (i.e., small image sections) features. Examples of such methods are SIFT and HOG.

Knowing which filters, image descriptors, and further methods are needed for specific use cases is highly relevant. This is where deep learning shows its strength, as suitable filters and features can be independently learned by DL models from datasets.

Image recognition with convolutional neural networks

A major advantage of deep learning methods is “end-to-end” learning. Here, a typically multi-step, manually selected pipeline consisting of data preprocessing, (potentially multiple) feature extraction stages, and a prediction method is replaced by a single model. A deep learning model can autonomously learn the necessary preprocessing steps and features from a (sufficiently large) dataset, which it needs to make predictions.

For image recognition, Convolutional Neural Networks (CNNs) are used. These have special convolutional layers that function similarly to the filters mentioned above. The individual parameters of the filter masks are learned by the model during the training process and are therefore not predetermined. This allows the model to learn precisely the features it needs to optimize the target metric, such as classification accuracy. This means that based on initially simple features, like edges and colors, increasingly complex features, like faces or tires, can be recognized step by step.

Figure 6: Example of learned features in a popular CNN model. This visualization of the features produces images that roughly represent what the filter recognizes. In the first layers, very general features such as edges and textures are recognized. The later layers recognize increasingly complex objects, such as flowers and leaves or the heads of certain dog breeds, including the correct composition of ears, snout and eyes.

CNNs also have the advantage that very large models with over 100 convolutional layers now exist, and due to their complexity, these models can gain more predictive power from increasingly larger datasets.

Conclusion

Deep learning and CNNs allow developers of image recognition systems to focus on collecting diverse datasets, selecting appropriate neural network architectures, and optimizing model parameters. Deep learning models can be developed, trained, and tested as a whole, giving them an advantage over traditional methods, where a pipeline of suitable feature extraction and prediction methods must be developed and tested step-by-step for each application. In many cases, deep learning models achieve better predictive performance than more traditional methods.

However, the use of deep learning also presents challenges: Model decisions are difficult to analyze, making errors challenging to detect. Moreover, it is unclear if deep learning methods can solve specific problem cases beyond the collected data.

We must keep these limitations in mind when using deep learning for Computer Vision tasks. Overall, deep learning-based methods are an excellent first choice for solving diverse image recognition use cases.

Other sources:

CS231n: Convolutional Neural Networks for Visual Recognition

Feature Visualization. Olah, et al., Distill, 2017, Link

Computer Vision: Algorithms and Applications (2. Aufl.).

Richard Szeliski, Springer Science & Business Media, 2022, Link

Digital Image Processing. Rafael Gonzalez, Richard Woods, Steven Eddins, Gatesmark Publishing, 2020, Link