Automatic detection of contradictions in texts is a very challenging task—especially in languages other than English. For a Machine Learning (ML) system to recognize contradictory statements, it requires a significant amount of human-like text understanding. Nevertheless, if a system successfully solves this task, it should not be too difficult for it to understand other aspects of language (such as causality and semantic similarity of sentences). We examine this problem, also known as “Natural Language Inference,” using the German language.

An ML system that detects contradictions

There are already numerous applications for contradiction detection. For instance, one could identify fake news by comparing online posts with reliable news sources. In finance and auditing, such a model could be used to detect inconsistencies within an annual report or between multiple financial documents (see also “Towards Automated Auditing with Machine Learning”). It could also be used as an automatic evaluation tool for the output of other ML systems, for example, to assess the performance of a chatbot or a voice assistant application.

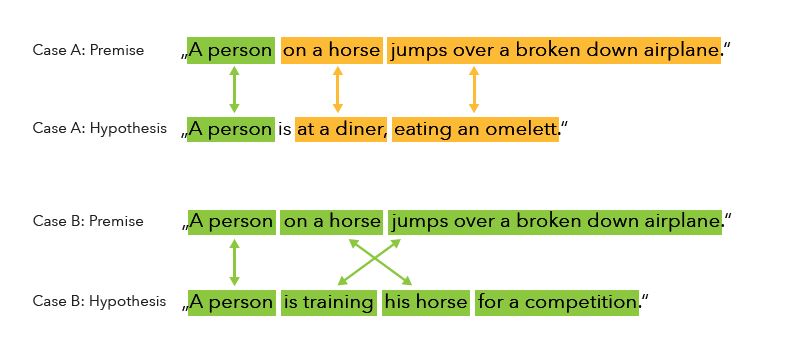

The goal of our research is to develop an ML system that can predict whether two given German sentences or paragraphs are contradictory. We want to determine which learning paradigm achieves the best performance for this task and whether the models can handle different types of data. These two text snippets are usually referred to as “premise” and “hypothesis.” Since we had limited training data in German, we machine-translated a large portion of English datasets (SNLI). In these datasets, the premise and hypothesis are related to an image. The premise is a detailed description of the image, while the hypothesis was written by a commentator. Approximately one-third of the examples were identified as contradictory, while no contradiction was found in two-thirds of the cases.

Example of two premises and hypotheses

Vector representations for premises and hypotheses

A key problem in solving this task is to find good vector representations for premise and hypothesis that encode all the information a model needs to predict a contradiction. In any case, the final representation is passed to the classification model, which outputs a prediction of the class (“Contradiction” or “No Contradiction”). For this purpose, we compared two different paradigms:

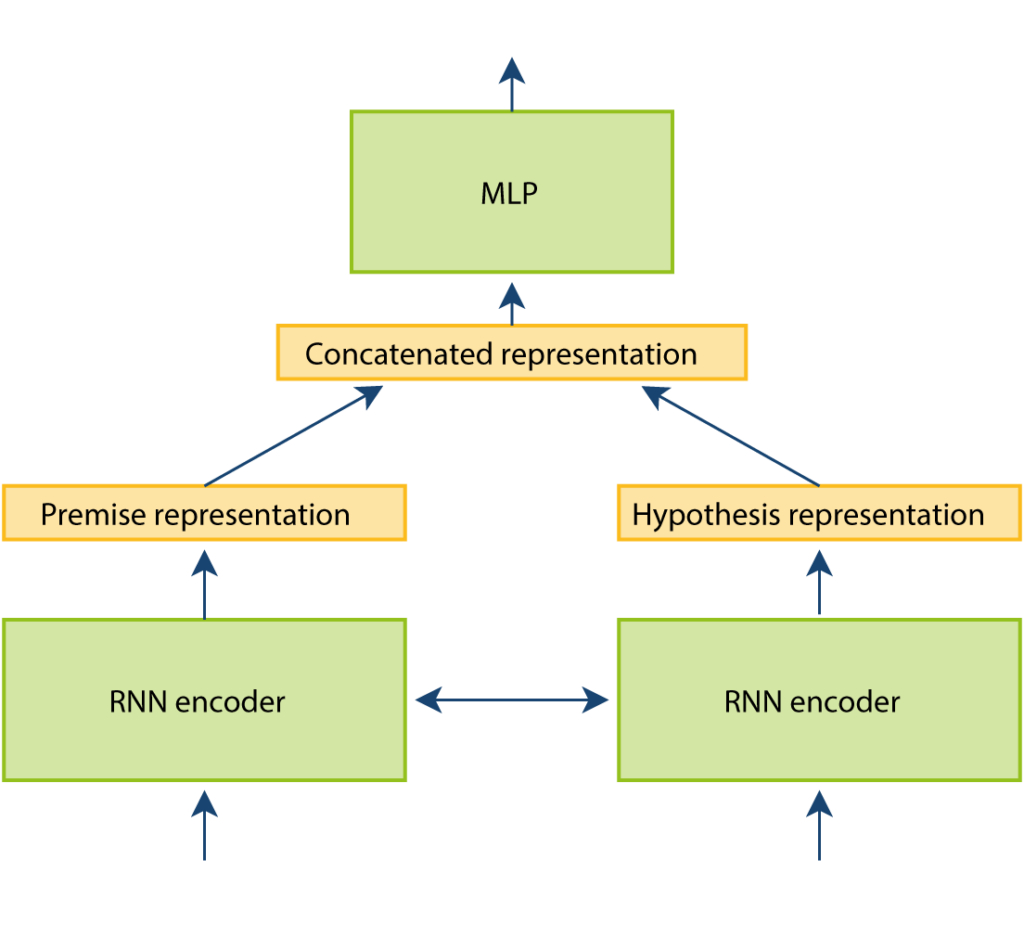

Model 1: Recurrent Neural Networks (RNNs) without attention

In this example, a recurrent neural network was trained to create vector representations for premise and hypothesis. The RNN reads the paragraph as a list of tokens (words) and updates its hidden state with each word it sees. The final output is the last hidden state after reading the entire sequence. This output can then be interpreted as a representation for the paragraph. In this first approach, the representations for premise and hypothesis are calculated separately and then concatenated into a long vector. This output is processed by a multilayer perceptron (MLP), a simple neural network without recurrent connections.

Simplified representation of the workflow for model 1 (without attention)

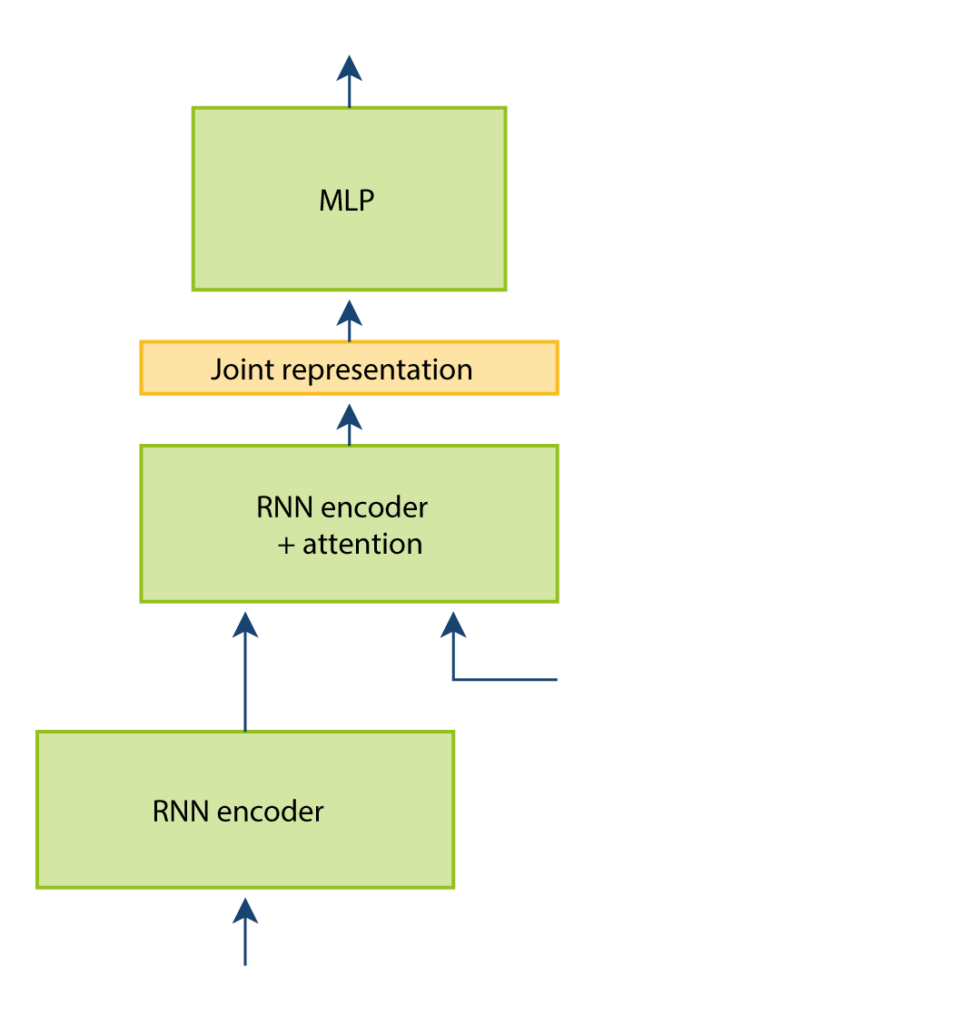

Model 2: Recurrent Neural Networks (RNNs) with attention

The second approach involves creating a pipeline of two RNNs. While the first RNN reads the premise and passes its last hidden state to the second RNN, the latter continues reading the hypothesis. It calculates so-called “attention” values between each word in the premise and each word in the hypothesis. These values indicate which words relate to the same entities in both sentences and enable subsequent computational steps to focus on these. The output of the second RNN is a joint representation for premise and hypothesis.

Simplified representation of the workflow for Model 2 (with attention)

RNN-based models outperform other approaches

One of our main findings is that RNN-based models outperform all other data encoding methods. The results also show that the machine-translated datasets are similar to the original data, suggesting that data quality has not suffered from the translation. There is still room for improvement, as a look at two prediction examples from both models shows: both models are competitive, so there is no clear “winner” in this competition. The attention-based model can recognize contradictions linked to specific words (Example 1), but it also makes false predictions, for example, when word alignment between sentences is not helpful or is misleading (Example 2). When deeper world understanding is required, both paradigms struggle with these cases. Therefore, in an empirical application, one must consider which type of contradiction is most typical for the text being analyzed before choosing a model.

Conclusion

We can conclude that we have created a well-functioning initial benchmark system for contradiction detection in the German language. It remains to be seen whether the models can be generalized to a broader range. In our further work, we plan to investigate various approaches and datasets, as well as some aspects of Informed Machine Learning.