Think about the last time you found yourself struggling with the meaning of a complex article or document. Many of us have faced this situation before, but there are many groups of people that struggle to understand even everyday written language. For this reason there exist simple versions of everyday language that aim to break down language barriers. In this blog post we will give an introduction to the topic of text simplification. Focusing on why it is so important to make written language accessible, touch upon the connections between text simplification and readability assessment, and sketch the different approaches to automated text simplification.

Break down language barriers: text simplification and Machine Learning

There are many situations in which we are confronted with documents or texts that are difficult to understand. For example, when we think about legal documents or a patient diagnoses. Maybe there are some distant high school memories of reading Shakespeare, too. Now imagine you are a language learner, have reading difficulties or face cognitive impairment. In those cases, even everyday written language can be hard. With the use of simple language we can improve the accessibility of information for a wide range of people by accommodating the needs of different user groups. This is important, because the understanding of complex and everyday text can be a barrier to education, healthcare, and access to critical services.

But what does the topic of text simplification actually have to do with Machine Learning?

The research field of text simplification is closely related to the field of readability assessment, which in turn makes use of Machine Learning (ML) methods. The aim of readability assessment is to automatically classify texts according to a predefined level of difficulty, which is where those ML methods come in. We would like to highlight two commonly used readability metrics: the Flesch-Kincaid Readability Test and the Gunning Fog Index.

The Flesch-Kincaid Readability Test calculates the score based on the ratios between the number of words in a sentence and the number of syllables in the words. It provides a readability score that corresponds to a specific grade level required to understand the text. The lower the grade, the simpler the text. It was originally developed to assess the difficulty of technical manuals.

The Gunning-Fog Index works similarly in providing an estimation for the required grade of the reader by looking at the average number of words per sentence and then considering the percentage of complex words used. Both metrics were developed for the English language and the American school system, but for the Flesch-Kincaid Test there exists a formula for the German language as well. Since these readability metrics only consider frequency and length based information, they only measure the difficulty of the text in these dimensions. However, it must be said that there are other dimensions and corresponding methods. They all have their own advantages and disadvantages and can be applied in different contexts, depending on the specific requirements and objectives of the text analysis.

Keep it simple: what do we mean by text simplification?

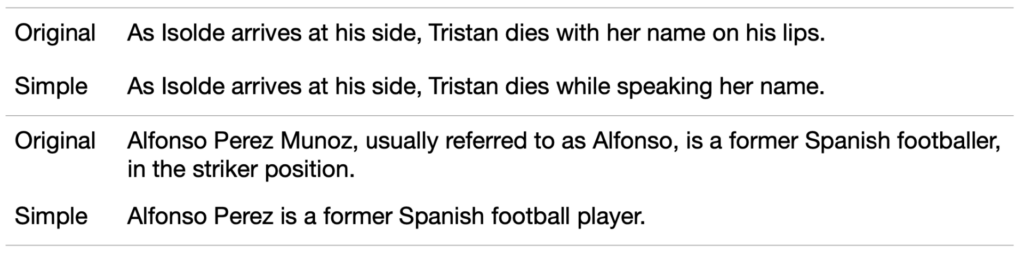

Text simplification describes the process of reducing the lexical and syntactic complexity of a written text. That means we want to use common words and a straightforward sentence structure. In detail the translation from everyday language to simple language involves different operations like deletion, rewording, insertion, and reordering. Furthermore, to simplify matters, additional sentences are often added to provide context to a difficult concept. This strategy is used whenever the replacement of a difficult word is not sufficient to make its meaning clear. Think about the sentence of „You can pay by card“. This cannot be simplified by replacing the words with synonyms or modifying the sentences structure. But if you’re unfamiliar with the concept of paying with a plastic card instead of cash, you will need some explanations. As a rule, human translators have the freedom to decide which words need to be replaced or can be omitted or need to be supplemented with additional information. They will focus on conveying the main points of the original text. While this is fairly simple for human translators, it remains one challenge for automated text simplification to guarantee that the contents of the simplification match the original sentence.

Various forms of plain language: what’s the difference?

By now we have explained why text simplification is useful and what methods exist for assessing the level of difficulty of texts. But how do we manage to translate everyday language into simple language and are there different forms of simplification?

Simple language must meet certain parameters and guidelines in order to be counted as such.

In English, the most popular forms of simple language are “Basic English” and “Simple”/“Learning English”. Both forms target an audience of language learners. Basic English describes a heavily formalized language that suggests a simplified grammar and a vocabulary of only 850 core words. The latter uses a more extensive vocabulary and is quite common as it is used for the Simple English Wikipedia, another version of the online encyclopedia in simple language. Both certainly describe forms of simple language but are rather tailored towards language learners.

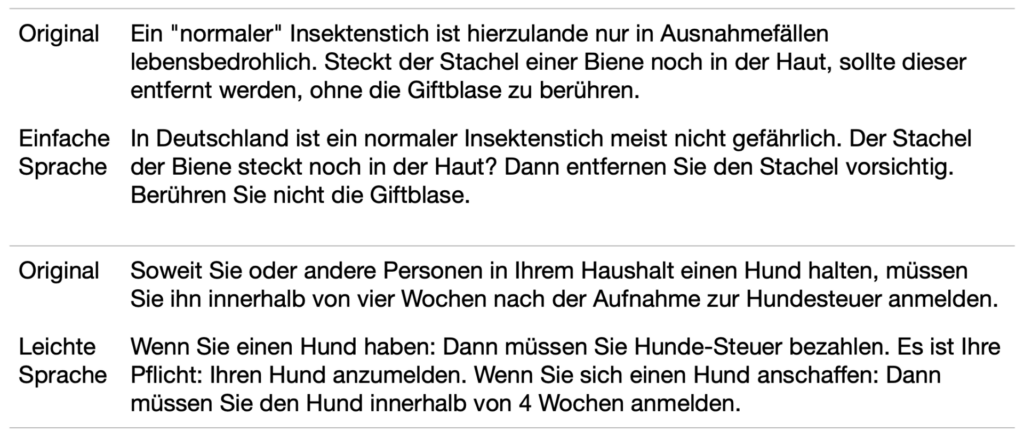

In German the two most popular forms of simple language are “Einfache Sprache” and “Leichte Sprache”, plain and simple German, respectively. Analogously, the difference between the two is the degree to which the expressiveness of the language is restricted. Einfache Sprache is designed to make expert content available to lay people without necessarily catering towards the specific needs of people with dyslexia or cognitive impairments. Leichte Sprache on the other hand is heavily restricted and even implements special formatting to improve readability. This formatting includes the visual separation of composite nouns and single sentences, i.e. writing only one sentence per line. Further, Leichte Sprache usually contains extensive contextualization and explanations for different concepts. Even though we distinguish between the two forms of simple language in German, there are no specific rules to implement them in order to achieve these forms of simplification. There are only few sources of formalization, one valuable resource in German is Maaß 2020. Notably, the author describes the balancing act of formalization approaches between accessibility and acceptance. Looking at Leichte Sprache and Einfache Sprache we can easily see that while the former is very accessible, it is only used by a rather small group of people, whereas the other is easily readable for most of us, but not as accessible.

Back to Machine Learning and the question of how we can automate text simplification

In the area of Natural Language Processing (NLP), text simplification has been a long-standing research topic and there are various approaches to making complex text more accessible.

We can classify these approaches in three categories:

- Rule-based approaches belong to the earliest methods and involve linguistic rules upon which sentences were simplified. Sentence splitting and substituting complex terms with simpler synonyms are commonly used. Not only were these rules hand-crafted, but they also relied on dictionary-based lookups for the lexical substitutions. One possible strategy for semantic simplification could be as follows:

Given an input sentence, it is first explicitly tokenized (i.e., broken down into its individual components such as words and punctuation marks) and then tagged with part-of-speech tags (i.e., each word is classified into a grammatical class like e.g., verb, adjective, adverb, etc.). Based on the words and their tags, synonyms are looked up in a thesaurus and used to replace the original word if the synonym occurs more frequently in a large text than the original word. In order to maintain grammatical correctness, some further details must be taken into account. Notice, that this strategy does not consider the syntactic complexity of the sentence. - Machine Learning Models can be used for text simplification by treating the problem as a translation task. This requires a parallel dataset with sentences in everyday language and their simplified versions. Then different neural network architectures can be used to learn the translation from one sentence to another. The expectation is that the model learns all the rules and operations explicitly defined in the rule-based approaches simply by showing the model pairs of sentences in everyday language and simplified language.

- Lastly, Transformer-based Large Language Models like GPT-3, which better capture intricate language nuances by considering the context of a sentence, effortlessly produce coherent sentences. This is one of the reasons why they have quickly gained such popularity. They can also be used for text simplification, although a well-crafted prompt is required to achieve the desired level of simplification. Moreover, users must take special care to ensure that the simplification reflects the original content of the input sentence, i.e. remains factually correct.

Which ML method is the most suitable and where can I get a data set in plain German?

To conclude this introduction to text simplification, it is clear that linguistic accessibility is a matter of course to many groups of our society. Despite the ubiquity of ChatGPT and other Large Language Models in science and media, there are several advantages to using traditional Machine Learning models such as Recurrent Neural Networks (RNNs) and Long Short-Term Memory Networks (LSTMs). For example, they are usually smaller and easier to train from scratch. As mentioned above, treating text simplification as a translation task, we can use well-established ML techniques. Consequently, we need a parallel dataset with sentence pairs in everyday language and simple language. And since we recognize the need for inclusive solutions, we must also point out that the existing datasets focus predominantly on English. Other languages are often neglected. To make the dataset landscape more diverse, we are introducing a new, aligned dataset for Simple German. Curious? In the following blog post we will discuss its creation process and focus on the technical side of sentence alignments. So stay tuned!

Here you can find our aligned dataset for Simple German: https://github.com/mlai-bonn/Simple-German-Corpus