Data is crucial for Machine Learning. Due to the potential impact on society, big data technologies have stirred significant excitement. Metaphorically, big data can be understood as the fuel for the rocket of Machine Learning. A rocket cannot fly without fuel. However, if you have enough fuel for the rocket, would it be possible to reach any destination?

The syllogism, the simplest form of symbolic logical inference, is a mathematical representation of thinking. How syllogisms can be precisely executed by neural networks remains an open question in the research of Artificial Intelligence and Machine Learning. Surprisingly, it is not possible to let Machine Learning systems learn all types of syllogisms, regardless of the amount of training data available. Essentially, training data is used to establish associations between input and output. However, in syllogisms, the association of one type weakens the association of another type. One workaround is to avoid learning associative relationships and instead learn a meaningful representation of the input’s meaning.

Learning syllogisms with training data

A syllogism describes a catalog of logical conclusions and always consists of two premises leading to a conclusion. The underlying principle is explained with the following example: Given the two premises (1) all Greeks are humans and (2) all humans are mortal, we conclude that all Greeks are mortal.

Fundamentally, the statement of a syllogism has four formats: (1) all X are Y, (2) no X is Y, (3) some X are Y, (4) some X are not Y. The combinations of these four formats in premises and conclusions result in 24 different syllogism structures for decision-making. The question here is: Can a Machine Learning system learn these 24 simple decision-making processes with sufficient training data?

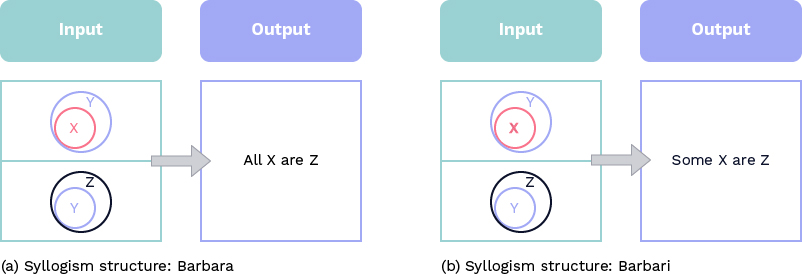

A supervised neural network with Euler diagrams as input and a symbolic vector as output.

To answer this question, researchers created a neural network and trained it with enough training data (see Abstract Diagrammatic Reasoning with Multiplex Graph Networks, Investigating diagrammatic reasoning with deep neural networks). In total, they trained the neural network with 88,000 syllogism tasks and achieved an accuracy of 99.8% for 8,000 new syllogism tasks. The above image shows the architecture of the network. The input of the neural network are two Euler diagrams. All possible combinations of Euler diagrams exhaust the types of syllogisms and associate inputs with logical inferences. However, this method suffers from two limitations:

- Training data cannot simultaneously associate consistent relations and logical inferences with the input. Although the system knows that all Greeks are mortal, it is simultaneously unsure whether some Greeks are mortal.

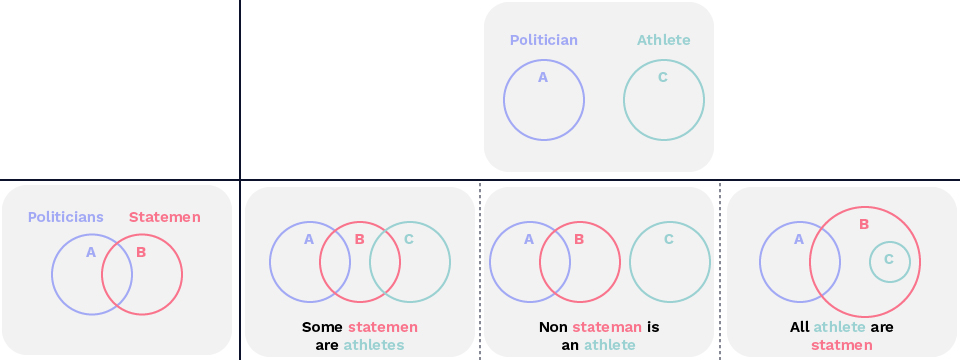

- A single combination of two Euler diagrams cannot represent the respective assertion. This is explained with the following example: From the graphical combination “some politicians are statesmen” (overlap) and “no politicians are athletes” (separation), three possible assertions arise: (1) some statesmen are athletes, (2) no statesmen are athletes, (3) all athletes are statesmen. However, the correct syllogistic assertion would be: Some statesmen are not athletes.

The graphical combination of “A overlaps B” and “A separates C” results in three possibilities between B and C: “B overlaps C,” “B separates C,” “C is part of B”.

Learning syllogisms: training data alone is not enough

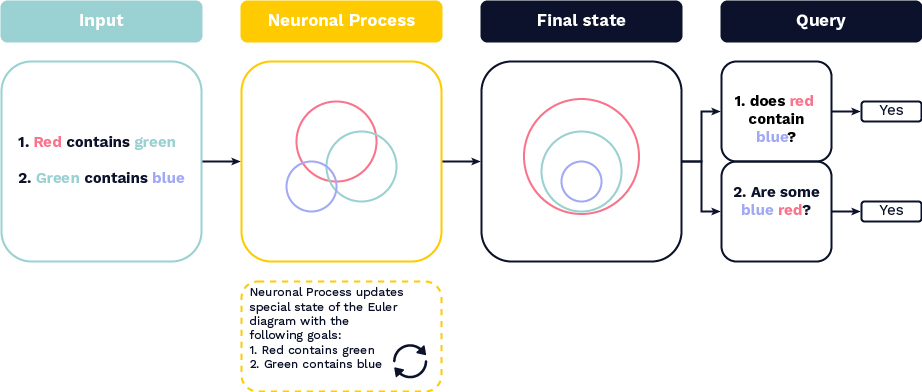

In contrast to the training data approach described above, we intuitively believe that the two inputs/premises contain enough information for the conclusion. Therefore, further training data should not be necessary. To investigate this, we developed a new neural network to create Euler diagrams for the two symbolic statements as input. The figure below shows: The unsupervised network represents each entity in the statement as spheres in high-dimensional space and it learns an Euler diagram that accurately captures the meaning of the two inputs. The truth value of a conclusion is determined by inspecting the Euler diagram. This leads to a novel Machine Learning method that embeds meanings of the inputs instead of building associative relationships between the input and the output. In experiments, this neural network achieved an accuracy of 100% for the same 14,000 syllogism tasks covering all 24 types of syllogisms.

A new unsupervised neural network learns an Euler diagram as the representation of the meaning of inputs.

But would a supervised neural network have the same accuracy as our approach described above? The effectiveness of the method developed at the Lamarr Institute is evident when compared to other Machine Learning approaches for learning syllogisms. For instance, a supervised neural network developed by researcher D. Wang, which uses training data, achieved only 76% accuracy for the same 14,000 tasks. Error analysis shows that the training data cannot cover all 24 types of syllogisms. Based on this neural network trained with data, a system can be trained on the one hand to learn: If all X are Y and all Y are Z, then all X are Z. On the other hand, a system with training data can be trained to learn the following: If all X are Y and all Y are Z, then some X are Z. Yet, it cannot learn both conclusions simultaneously. The two associations weaken each other, as shown in the graphic below. In an unsupervised neural network developed in our study, such cases do not occur, as it can accurately represent all syllogistic structures.

We have seen that, regardless of how large the training data is, there is no supervised neural network that can infer all 24 types of syllogisms. Therefore, the presence of the proverbial fuel for Machine Learning alone does not enable arbitrary achievement of goals. Instead, joint research suggests that unsupervised neural networks can precisely learn a semantic representation, as long as it has a spatial configuration. The unsupervised neural network developed by us constructs a spatial structure for any syllogistic input. Thus, the meaning of the inputs of all syllogisms can be learned. Here, we present Euler diagrams as the representation of the meaning.

The accuracy of the learned Euler diagrams guarantees the inference of syllogisms and explains the errors. Our experiments on syllogisms show that this unsupervised neural network nearly reaches the accuracy of symbolic logical inference. With this, we attempt to provide a new perspective on trustworthy AI and explainable AI, creating structure where data is powerless.

For more information, refer to the associated publication:

T. Dong, C. Li, C. Bauckhage, J. Li, S. Wrobel, A. B. Cremers: Learning Syllogism with Euler Neural-Networks. 2020, PDF.