Deep neural networks are an essential component of autonomous driving, achieving unparalleled performance in a range of tasks, particularly image-based environmental perception. However, they are susceptible to random or intentional disturbances, complicating their use in safety-critical applications. Such disturbances can be natural, like overexposure, fog, snow, or artificially and intentionally created, such as adversarial attacks.

Intentionally induced errors in predictions are usually obvious to human observers because humans intuitively apply multiple rules based on their knowledge and experience to understand what is happening in a given scene. Neural networks in their current form aim to learn these rules implicitly from data. This requires the collection and annotation of large amounts of input and poses the risk of assuming false correlations. Integrating scene knowledge into the training or inference of neural networks seems like a solution. However, this knowledge ranges from considerations of scene geometry to general knowledge and rules of human behavior, making it a complex challenge. There are not only several competing approaches to integrating knowledge but also multiple ways to represent this knowledge itself.

Probabilistic soft logic for knowledge representation

We investigate a method for representing knowledge in the form of relationships, described as logical rules between identified objects in a traffic scene, and analyze how these relationships can be used to enhance the robustness of data-driven environmental perception. While several existing research lines exclusively use domain knowledge to create a model, our goal is to combine the data-driven approach with high-level knowledge within the inference process.

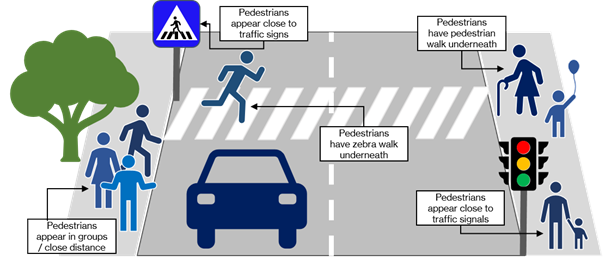

To understand and think about traffic scenes, most people rely on relationships between objects. Assuming all objects are identified, shared knowledge — describing both behavior and features — can be delineated in the form of rules.

In various traffic scenes, humans typically use general and empirical knowledge about the behavior and features of objects to infer their types and relationships within the scene. This also applies to unseen scenarios. This general knowledge, considering different features and relationships of objects, can partly be encoded as a set of simple rules. However, the way humans think about their environment cannot be based on strict Boolean logic, where rules are either true or false. An object with a crosswalk underneath it and near a traffic sign is highly likely to be a human, but it could also be another object, such as a dog or construction site obstacle. To integrate such high-level human knowledge into a neural network in the same “uncertain” manner, we need to define logical rules with “soft” truth values. For this purpose, we adapt the Probabilistic Soft Logic (PSL) framework to define and learn relationships between objects in the environment. We chose PSL as a framework for knowledge representation for two reasons:

- First, it allows the flexible use of soft truth values, suitable for defining both strict and loose rules.

- Second, it can be fine-tuned based on data, meaning high-quality knowledge can be directly obtained from given annotations.

The overarching goal of using PSL for camera-based perception in autonomous driving is to utilize easily extractable parts of general knowledge about the traffic scene and represent them in a form that allows integration into neural network inference.

Steps for describing the environment as rules. An example of a rule for this frame with selected objects could be: “Stands_on_pedestrian_path ⋀ Stands_near_traffic_lights → Class_is_pedestrian”.

However, we first need to identify the objects we want to infer about, as well as their relationships, which are encoded in selected predicates. Then, we define a set of rules containing additional relational knowledge about the area of interest. This knowledge is characterized by not being covered by annotated data and thus can be used to support and validate the perception task. When defining an initial set of rules, the objects to be connected must be carefully selected: Both their behavior description and extraction from raw data should be simple.

For our study, we selected pedestrians, traffic signs, and traffic signals as three types of objects for which we want to derive classes. We use an oracle to extract object entities, ignoring additional challenges arising from unreliable object extraction. The oracle is mimicked by the connected components found in the ground-truth segmentation, with additional smoothing to avoid broken objects due to occlusions.

We develop an initial set of rules, whose weighting (i.e., how likely they are to be true) we refine later. We then base the logical inferences for an object class on semantic features, the objects’ surroundings, and their relationships. The surroundings of objects are described based on observations, such as what is under or behind the object. We also integrate color as a semantic feature, as it is particularly important for traffic signs and signals. The rules defined using the color predicate are, for example, inspired by the officially established color combinations of common German traffic signs. The relationships between objects are indicated as distance values based on depth measurements.

In the final stage, the knowledge obtained from probabilistic rules must be combined with the predictions of the neural network, in this case, the semantic segmentation masks. For our conceptual demonstration, we follow a simplified approach where the results obtained from the network are integrated as prior assumptions into the rule-based framework.

Overall, the practical approach can be described as follows:

- Identification of objects to infer about and extraction of these objects from sensor data (oracle using ground-truth semantic segmentation)

- Construction of the rule set using expert and general knowledge

- Preparation of required information from lower-level sensors for rule inference (depth, colors)

- Learning the weighting of the rules using a training set of recorded camera images

- Performing inference with the neural network and rules on the test set of images for validation, possibly using prior network outputs

We evaluated the described setup based on the natural distortions of images. Initial experiments show the feasibility of the described pipeline and its positive impact on the robustness of segmentation predictions.

Conclusion

By using human knowledge, we draw information from various areas: from sensor inputs, such as the color of an object, to scene properties, such as relationships and distances between objects. This approach allows us to incorporate additional information into data-driven model predictions and improve the reliability of predictions through inferences.

This study demonstrates the benefits that shared knowledge can have for the reliability of a Machine Learning system. At this early stage, we have neglected the tedious step of identifying the entities that the PSL framework decides upon. In practice, this information must be gathered from various, most likely not entirely reliable sources, such as additional sensors (e.g., LIDAR) or alternative processing methods. Another important step is the involvement of human experts in rule formulation. The rules used in this work are formulated with general knowledge about driving situations and environments, but both more specific rules and those that adjust a particular aspect of recognition require several iterations during the construction process.