Random numbers are essential for many applications in the field of Machine Learning (ML). They are needed for stochastic optimization procedures, to sample data points from the distributions of generative models, or simply to initialize the parameters of artificial neural networks. The initialization of such a network forms the starting point of parameter optimization and significantly impacts how long the model needs to be trained and how effective it will be afterward. Therefore, a reliable source of high-quality random numbers is an important tool, not only in the field of Artificial Intelligence but also in many other areas of natural sciences, such as encryption methods and simulations.

But what does “high-quality” mean here? Intuitively, random seems to be random: A series of numbers is random if you cannot predict which number will come next. However, one can make various statements about all previously seen numbers and thus make assumptions about the properties of the yet unseen numbers. If you flip a coin a million times, you will observe that about 500,000 flips show heads and about as many show tails. If you assign heads = 1 and tails = 0, the average of all flips will be very close to 0.5, and this value will get more accurate the more times you flip the coin. If, however, you observe that the average consistently lies at 0.4 – meaning tails appear significantly more often than heads – you can assume that the coin is biased, or that another factor influences the outcome, such as the height of the flip or the starting position of the hand. Statistics offer methods to quantify such deviations from an expected distribution, such as distance measures like the Kullback-Leibler divergence or the Hellinger distance, or through statistical tests like the Kolmogorov-Smirnov test.

Pseudorandom numbers

When trying to generate random numbers using a computer, one faces a problem: A computer is a deterministic machine that executes fixed sequences of commands. It is not possible to generate true random numbers with such a machine since these numbers are produced by a program and are therefore by definition not random but predetermined by the program. To still generate numbers that behave like random numbers, computers contain programs that use special algorithms to produce seemingly random numbers – so-called pseudorandom numbers. Starting with a number as the initial state – also known as a “seed” – these seemingly random numbers are derived from the current internal state through sequences of arithmetic and bit operations. Although they look like true random numbers and possess many of their properties, they can be deterministically reproduced with knowledge of the initial state of the program. In particular, pseudorandom number generators produce only a finite sequence of different numbers before the initial state is reached again, and they start to repeat. The length of the pseudorandom sequence generated by an algorithm before it repeats is an important characteristic of its quality and is called the “period.” Well-known and frequently used algorithms include Xorshift and Mersenne-Twister, the latter having a period of 2^19937 − 1, a number with 6001 digits.

Quantum random numbers

Quantum computers are currently in the spotlight of research. The ideas on how to leverage the effects of quantum mechanics for Machine Learning are creative and diverse. While many researchers attempt to embed entire ML models into quantum computers using the gate model, others are exploring alternative approaches to enhance classical ML methods with quantum effects.

The key property that distinguishes a qubit (quantum bit) from a classical bit is that a qubit can take on not only the states 0 and 1 (the so-called basis states) but also infinitely many mixed states. Such a mixed state |ψ〉 is called a superposition and can be described by the expression |ψ〉 = α|0〉 + β|1〉. Here, |0〉 and |1〉 denote the basis states, and α and β are complex numbers with the property |α|² + |β|² = 1. The so-called Born rule from quantum mechanics states that a qubit in state |ψ〉 will take on the basis state 0 with probability |α|² and the basis state 1 with probability |β|² = 1 − |α|². We see that measuring a quantum state behaves very similarly to a coin flip, only that the probability for heads or tails is variable over the parameters α and β. If we set α = β = 1/√2, then the quantum state corresponds exactly to a fair coin flip, and we observe both basis states with a probability of 50%. This state, also known as |+〉, is particularly easy to produce in the gate model of quantum computing since it arises from applying the Hadamard gate H – one of the fundamental gates – to a qubit in the basis state |0|.

By repeatedly putting a qubit into this state and measuring it, we obtain a series of binary random numbers, such as a sequence like 10011100110111010110. . . Since these numbers are generated by quantum mechanical effects that cannot be explained or simulated by classical physics, they can be used as a random source for the aforementioned applications, such as initializing neural networks. Scientists hope that the high quality of the random numbers will allow machine learning models to be better initialized and thus trained faster and to a higher quality.

Quantum randomness in practice

This hope seemed to be confirmed recently when researchers from Birmingham used quantum random numbers to initialize several models, including neural convolutional networks. They observed that the models achieved slightly higher accuracy on various classification datasets compared to initialization with pseudorandom numbers.

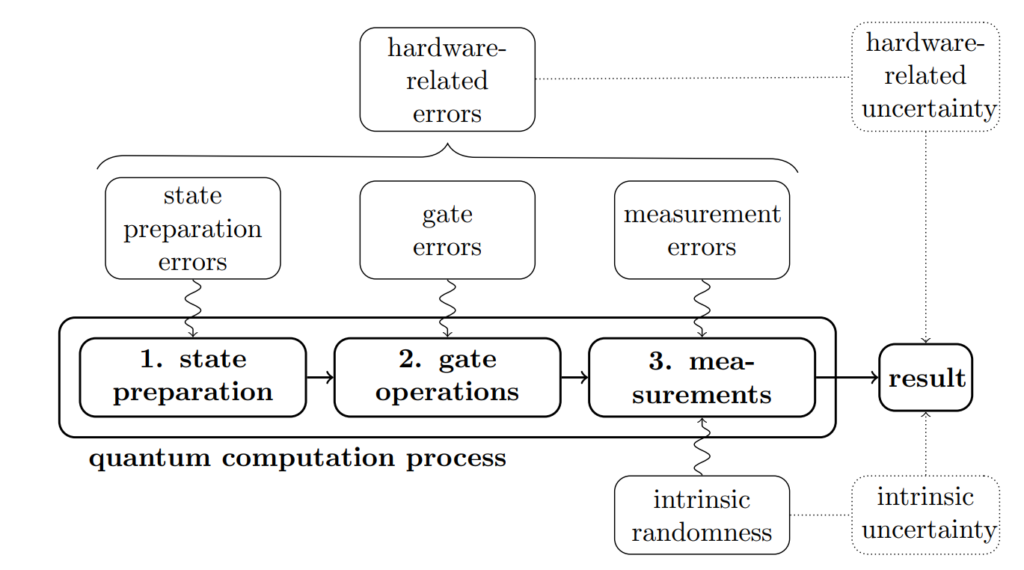

Although quantum computers are being intensely researched and developed, today’s devices do not perfectly replicate the previously described mathematical quantum computing model. Qubits are represented using various physical mechanisms, such as microwave radiation, superconducting materials, or photons. There are many sources of error that can distort the result [Fig. 1]. In their current research, scientists from ML2R (now the Lamarr Institute) investigated whether the results from Birmingham could be reproduced and statistically validated. To this end, they used an IBM quantum computer to generate a large number of quantum random numbers between 0 and 1 by applying the Hadamard gate and measuring many qubits.

Various sources of errors that can distort or falsify the expected result of an experiment on a quantum computer.

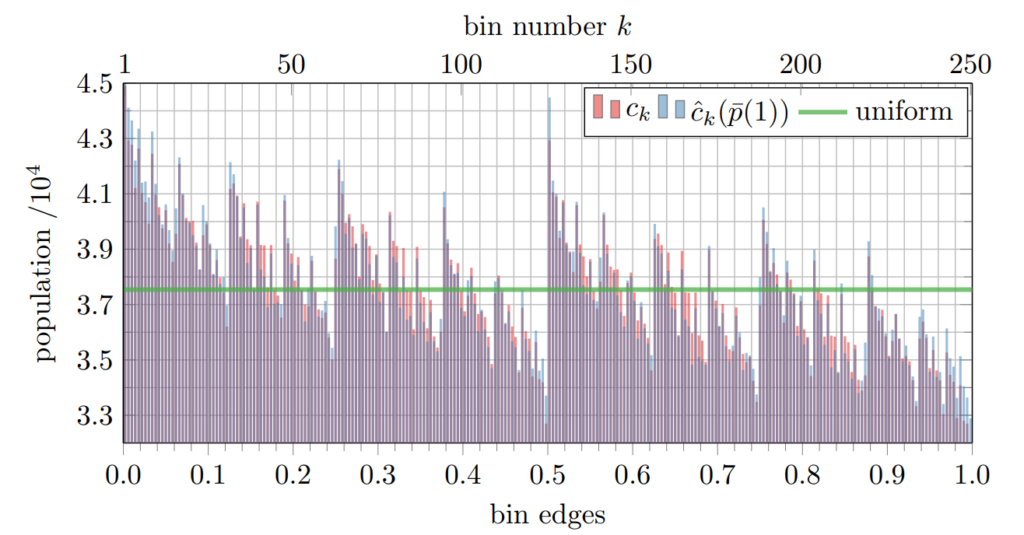

They found that the numbers generated in this way are not completely random but rather follow a specific distribution, as shown in [Fig. 2]. Clearly, there are numbers that occur much more frequently than others, which contradicts a uniform distribution one would expect from completely random numbers. This distribution arises when each bit does not have a 50% probability of being 1, but rather slightly less: An average probability of 48.88% was measured. It turns out that quantum random numbers – at least when naively generated according to the ideal mathematical model – are significantly less random than those generated by pseudorandom number generators.

Observed distribution of random numbers generated on an IBM quantum computer (red bars). The green line shows a uniform distribution, as would be expected for perfect random numbers. The blue bars show a simulation of the distorted quantum random numbers generated by pseudorandom numbers.

In addition to these self-generated random numbers, the researchers also used publicly available random numbers generated from an optical quantum computer, which are of significantly higher quality. Both with these and the self-generated quantum random numbers, no significant effect on the training of ML models was observed in direct comparison to pseudorandom numbers. Thus, the researchers were able to show that – at least for the model classes used – a quantum random source does not lead to the observed better performance in learning.

Conclusion

Potential applications of quantum effects for Machine Learning are diverse. Due to their inherently stochastic behavior, qubits can be used as a source of (true) random numbers. An initial hope that these numbers would lead to better results in training ML models was disproven. This is likely due to the high quality of modern pseudorandom number generators and the technical hurdles of quantum computing, which only allow a distorted realization of the theoretically perfectly random numbers.

There is still hope that these distorted distributions could lead to new insights: If it turns out that the initialization of certain model classes with specific distributions leads to significantly better performance, this could be used to replicate such distributions with pseudorandom numbers. The ML2R (now the Lamarr Institute) researchers have already shown that this is possible in principle by simulating the distribution from [Fig. 2] without quantum computing. However, as long as quantum computers are as error-prone in terms of the quality of their outputs as they are today, it cannot be argued that the “true physical randomness” of qubits alone has a measurable impact. The quest for a breakthrough in quantum computing in Machine Learning continues.

More information in the associated paper:

On the effects of biased quantum random numbers on the initialization of artificial neural networks

Raoul Heese, Moritz Wolter, Sascha Mücke, Lukas Franken, Nico Piatkowski, 2021, PDF