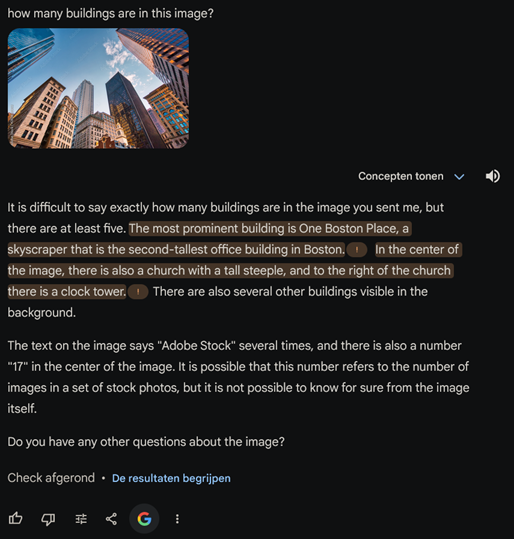

Large language models (LLMs) have become more capable, reliable, and available in the past years. ChatGPT has been made accessible to the world via a simple and familiar chat interface. It accrued 1M users in 5 days – many other apps took a lot longer as can be seen in the figure below. More recent versions can use not only text, but also images to interact with the user. Using different modalities like this is what makes LLMs even more powerful and will make them an essential part when working with an AI assistant in the future. An AI assistant is not yet an AI that is capable of performing work all by itself, but it can support a human in all kinds of tasks by generating initial versions of work that the human can filter, curate and adjust. We will look at some more of recent developments in AI regarding LLMs and inspect some possible future directions LLMs could go into, including multimodality.

Source: https://www.statista.com/chart/29174/time-to-one-million-users/

What is a Large Language Model (LLM)?

The term ‘Large Language Model’ (LLM) was coined by models that can work with human language growing larger and larger and thus becoming better at the task they are supposed to perform: predicting human language. Models like ChatGPT and Gemini are essentially very good at autocompletion when we only look at their text capabilities. If a model receives a passage of text with the task of summarizing it, the following words with the highest probability of appearing are the words of a summary. Because these modern models work with text-based prompts, we can just ask them to do virtually anything, and they will output meaningful answers. For this behavior to emerge, the AI must train on billions of examples which requires weeks of training on hundreds of high-end GPUs.

Traditionally, training on more data and using a larger model means better performance. As more data is used to train models to improve the state of the art, they also grow larger in size. So, Large Language Models are the most recent iteration of researchers and engineers trying to make Artificial Intelligence (AI) understand and use human language. The most common form of input for an LLM is text, as it is abundantly available and easy to process. During training, LLMs undergo tasks such as predicting the next word in a sentence, a process that has contributed to the development of models like ChatGPT through extensive exposure to chat histories.

While text remains the primary input modality, LLMs also have the potential to incorporate other modalities such as audio, although this requires additional processing resources.

What is a Modality?

Modality refers to the type of data that a model receives. Possible modalities include audio, text, images, and video (which is inherently multimodal, combining audio and a sequence of images). Historically, most AI models have been designed to perform a single specialized task using a single type of data. For example, a model trained to distinguish between images of cats and dogs receives visual input (an image) and performs classification based on this input alone. This is a unimodal model.

In contrast, a multimodal model integrates and processes multiple types of data simultaneously. For instance, if a model is provided with an image of a cat or dog, an audio sample of an animal sound, and a text description, and it utilizes this diverse information to enhance its performance, it is considered multimodal. By incorporating different data types, multimodal models can achieve more comprehensive and accurate results.

The development of multimodal models represents a significant advancement in AI research. These models are capable of understanding and generating responses that consider context from various data sources, mimicking human cognitive abilities more closely. For example, in natural language processing (NLP), a multimodal model can generate more nuanced and contextually appropriate responses by integrating visual or auditory cues alongside textual input. Moreover, multimodal AI has practical applications across various domains. In healthcare, for instance, a multimodal system can combine medical images, patient records, and clinical notes to improve diagnostic accuracy. In autonomous driving, vehicles equipped with multimodal AI can process visual data from cameras, auditory signals, and even textual information from road signs to make safer driving decisions.

The integration of multiple modalities poses challenges such as increased computational complexity and the need for sophisticated algorithms to effectively fuse different types of data. However, the potential benefits of more intelligent and context-aware AI systems make this an exciting area of ongoing research and development.

The Benefits of Multimodality

Different modalities should complement each other, much like how text and audio can enhance understanding in a translation task. Humans constantly use multiple modalities; while vision is our primary sense, we rely on other senses to navigate the world and solve complex problems. We are highly sensitive to incoherence between modalities we perceive – for instance, if the audio in a video lags behind the visuals, it distracts us and detracts from the overall experience. Therefore, for multimodal systems to be effective, the different modalities (image, audio, text, etc.) must be coherent and synchronized.

Modern LLMs undergo extensive training processes, starting primarily with text-based pre-training before incorporating additional modalities. This phased approach allows models to first master single modalities individually and later combine them to form more complex, multimodal (two or more modalities) tasks. This can be compared to how the material in school gets progressively more complex as the grades progress.

A current limitation in models like ChatGPT arises when they are fed multimodal information in a purely text-based format. For instance, integrating text, audio, and images into a single text prompt requires separate models to transcribe audio and caption images, which can introduce errors and limit the effectiveness of the combined information. Using multiple models in a chain like this is a cascaded approach and is a quick solution but AI research is moving away from these kinds of approaches in favor of using truly multimodal models. Directly processing multiple modalities within a single model is a more robust approach, enabling richer and more accurate outputs.

Exploring Multimodal Language Models: Gemini by Google DeepMind as an Example

Multimodal Language Models (LLMs) represent an exciting frontier in Artificial Intelligence research, seamlessly integrating various modes of input to enhance understanding and response generation. One exemplary model illustrating this capability is Gemini by Google DeepMind. Users can effortlessly upload images and inquire about its content, asking Gemini to identify the objects within (though note, as of the current version, images containing people are not supported). By posting multiple questions, users can explore the model’s limitations, identifying areas where it struggles to perceive or lacks comprehensive knowledge of the world. Essentially, Gemini enables users to conduct image searches by providing a picture and asking a question. Furthermore, this capability extends beyond visual inputs, as Gemini can also process audio, and even more, multiple modalities simultaneously.

Modern Large Language Models (LLMs) understand language well and can relate it to our everyday world. They use specific components in their structure to encode the information provided, helping them generate clear and meaningful responses, rather than just producing random word sequences.

Another interesting application is “Be My Eyes”, a mobile app made to help people with vision problems by describing images. Instead of depending on volunteers, the app plans to use AI technology, making it easier for users to access information and be more independent.

Trends in Multimodal LLM Research and Potential Future Developments

Expanding on the example provided above with Gemini processing an image and a question to produce a text-based answer, let’s consider further possibilities. What if we tasked the model with editing the image and generating an audio description of the modifications we desire? While Gemini isn’t currently equipped for such tasks, they’re not too distant from what’s already achievable. It’s easy to imagine numerous practical enhancements a slightly more robust version of existing models could offer. If we pursue this line of thought, we might envision an AI virtually indistinguishable from a human, capable of undertaking various tasks, perhaps even for hire on platforms like ‘fiverr.’.

This gradual advancement of AI becomes more humanlike, closely mirroring our own capabilities. Humans take in different types of data all the time. We can see, taste, smell, touch and hear. OpenAI have demonstrated speech-to-speech reasoning with a humanoid robot. The robot can see what is in front of it, describe the scene around it, manipulate objects if asked to do it and reason about the world it perceives. In some sense, this use case of a robot interacting with the world like a human is also an LLM with various modalities.

The reason for the explosive usage of ChatGPT was not its capabilities, but the simple and familiar chat interface provided by OpenAI. It made it easy to use it and AI that wants to gain wide adaptation needs to do the same. If we cannot communicate with something, why would we use it? This is why LLMs are a popular trend and will stay relevant for basically ever, because whatever AI brings in the future, it needs to communicate with us and for that, it must do that with at least one of our languages.

An interesting step will be an LLM that acts as an agent. An agent does not simply receive and input and produces an output once but runs in a kind of iterative process and is able to adjust its actions and thoughts to solve a problem. An example of that is the AI Devin that can solve programming problems by looking at the errors its code produces, googling for solutions and incorporating the search results for a new attempt at running its own code.

Conclusion

LLMs are a pivoting point in Artificial Intelligence, as they allow us to interact seamlessly with AI in human language for the first time. The addition of many different modalities into these models will make them able to perform more and more complex tasks that will approach the capabilities of humans in the future. LLMs will transform how we interact with AI (and already did) and lead to an increasingly growing ubiquitous usage of AI.