Introduction to Multilingual Instruction-Tuning

In the rapidly evolving landscape of large language models (LLMs), instruction-tuning on multilingual data has emerged as a key area of focus. This study, conducted within the OpenGPT-X project – which aims to develop a European multilingual LLM tailored to the needs of businesses and research–, explores the impact of multilingual instruction-tuning on polyglot model performance, addressing three main challenges:

- Data Availability: Where can we get multilingual instruction-tuning data?

- Data Composition: How to instruction-tune a multilingually pre-trained model?

- Model Evaluation: How to evaluate these models effectively?

This led us to the key problem statement:

Given a multilingual model – that is, a model that was extensively pre-trained to a large fraction on non-English documents – what language composition of conversations works best to enable the model to follow instructions across languages?

Creating Multilingual Datasets for Instruction-Tuning

With this central question in mind, the requirement for datasets across the targeted languages became evident. While the Bactrian-X dataset offered a great quasi-parallel resource – leveraging GPT-3.5’s responses to translated instructions – the at that time highly discussed LIMA paper suggested, the Superficial Alignment Hypothesis, a small but high-quality dataset for instruction-tuning suffices to learn to follow instructions. The authors ensured the high quality of LIMA, as the dataset was manually curated. Thus, we created and published Lima-X by translating the instructions and answers to obtain a parallel dataset for English, German, Spanish, French, and Italian. For fair comparison we also down-sampled Bactrian-X, leading to the dataset Bactrian-X-small.

Experimental Setup and Training Strategy

Given these parallel and semantically parallel datasets, we carefully designed an experimental setup to compare different dataset compositions for effectiveness in multilingual training. The selected datasets allow us to investigate different aspects:

- Impact of Dataset Nature: Comparing the same sized manually curated dataset (Lima-X) versus the synthetically generated dataset (Bactrian-X-small).

- Impact of Dataset Size: With Bactrian-X-small containing 1,030 samples and the full Bactrian-X dataset comprising 64,000samples, we can assess how increasing the volume of data drawn from the same distribution impacts cross-linguistic performance.

- Impact of Language Composition: Assessing monolingual datasets versus multilingual and semantically parallel dataset compositions like ENDEFRITES (meaning: English (EN), German (DE), French (FR), Italian (IT) and Spanisch (ES)) and semantically non-overlapping dataset compositions like ENDEFRITES-sampled, ensuring a fair comparison with monolingual datasets.

- Impact of Predominant Language (English): Investigate the influence of including or omitting English in the datasets (comparing ENDEFRITES with DEFRITES and ENDEFRITES-sampled with DEFRITES-sampled), as this is the dominant language during pre-training

- Impact of Model Size: The experiments were conducted using two model checkpoints: a 7B parameter OpenGPT-X checkpoint trained on 1 trillion tokens, and the larger 8x7B Mixtral model.

We train each model on each dataset composition and dataset as shown above, except for Mixtral-8x7B-Bactrian-X, as the compute resources available did not suffice to train the large model on the large dataset. This results in 45 different models to compare.

Evaluation Methodology

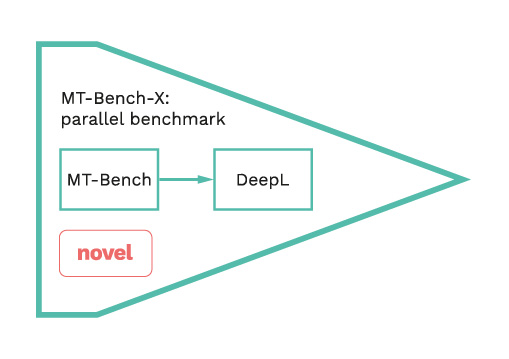

Addressing the challenge of effective evaluation in a multilingual context, we adopted the de facto standard at that time, MT-Bench, and extended it to create MT-Bench-X. The original dataset consists of 80 two-turn questions, with ten examples across eight categories:

- Math

- Coding

- Reasoning

- Extraction

- Writing

- Roleplay

- STEM, and

- Humanities

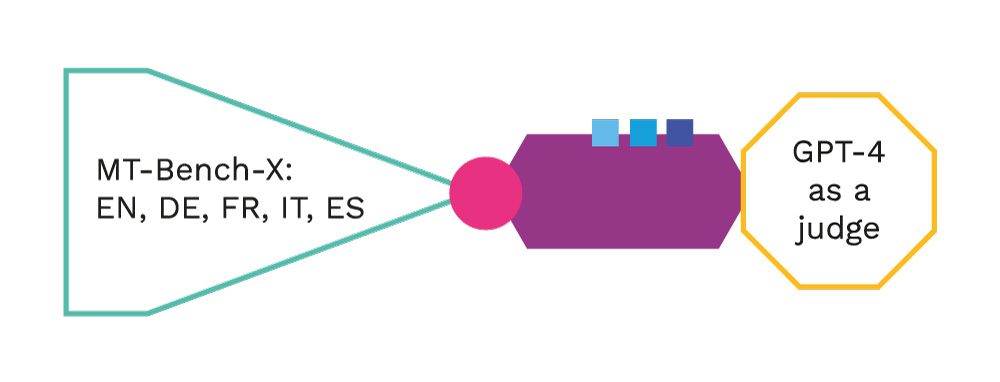

To extend this dataset to multiple languages, we added 320 additional examples by translating the questions and references from English to German, French, Italian, and Spanish. This formed the new evaluation benchmark, MT-Bench-X, specifically designed for multilingual instruction-tuning. Given the importance of proper evaluation, and recognizing that human evaluation is the gold standard, we manually checked and curated the translations. Additionally, we conducted a human evaluation study for German to correlate the scores of MT-Bench-DE with human judgment scores.

The MT-Bench family of benchmarks uses the concept of LLM-as-a-judge, i.e. asking a powerful LLM to evaluate the potentially different answers generated in an open-ended way by the model under evaluation. For complex questions, the correct answer is provided to the LLM-as-a-judge.

Results and Analysis

We evaluated all model variants trained on the different language mixtures in all languages supported by MT-Bench-X, which led to the following results.

Monolingual Evaluation

The results in the Figures above show that models trained on monolingual datasets in languages like German (Bactrian-DE), Italian (Bactrian-IT), and Spanish (Bactrian-ES) underperform compared to the multilingual Bactrian-ENDEFRITES model. At the same time, Bactrian-EN stands out, likely due to the higher volume of English data in its pre-training. Interestingly, Bactrian-FR outperforms Bactrian-ENDEFRITES in French benchmarks. Overall, no clear advantage is observed for models tuned on the fully parallel mixed-language data in monolingual evaluation. Bactrian-X-small performs similarly but at a lower level compared to Bactrian-X.

Mixtral-8x7B models, being larger, consistently achieve higher scores than the 24EU-7B models stemming from the OpenGPT-X checkpoint. While instruction-tuning on cross-lingual datasets tends to improve monolingual performance for some models this effect remains inconsistent across languages when using mixed-language fine-tuning strategies like DEFRITES or ENDEFRITES. Overall, there is no clear pattern in monolingual performance.

Cross-lingual Evaluation

When comparing model performance across evaluation languages by the average MT-Bench-X scores, a clear pattern emerges: cross-lingual instruction-tuning takes the lead. If we examine this trend more closely in the following figure, where we subtract the mono-lingual performance of models trained on monolingual data compositions from the cross-lingual performance of models trained on multilingual data, we see that cross-lingual tuning consistently outperforms monolingual approaches in most cases.

The Figure compares the performance of models trained on parallel language mixtures versus monolingual datasets.

Each bar represents the percentage improvement of a multilingual instruction-tuned model ((EN)DEFRITES, (EN)DEFRITES-sampled) compared to a monolingual model (EN, DE, FR, IT, ES) on the dataset variant (BX: Bactrian-X, BXs: Bactrian-X-small, LX: Lima-X).

Key Observations

- Multilingual Tuning Enhances Performance: For 24EU-7B-based models, multilingual tuning with ENDEFRITES-sampled boosts the performance of Lima-X and Bactrian-X-small, but not Bactrian-X. Models trained on DEFRITES-sampled data perform worse than their monolingual counterparts, probably due to smaller sample sizes. Mixtral-8x7B models show consistent gains from parallel instruction-tuning, with few exceptions

- Dataset Size Matters: Larger datasets, like full-sized ENDEFRITES and DEFRITES, improve performance across languages, particularly for Bactrian-X and Lima-X.

- Inclusion of English is Beneficial: Including the dominant pre-training language (ENDEFRITES vs. DEFRITES) enhances tuning outcomes. Down-sampled variants underperform, likely due to lower signal-to-noise ratios in Bactrian-X.

- Model Size Influences Results: Larger models like Mixtral-8x7B consistently archive higher scores, with few exceptions.

Mixtral-8x7B’s larger size likely compensates for noise in smaller datasets like Lima-X, with maximum performance gains of up to 9.9% (0.99 points) for Lima-ENDEFRITES versus Lima-IT.

Overall, fine-tuning on parallel data improves multilingual instruction-following for mid- to large-sized models, outperforming monolingual training.

Synthetic vs. Curated Training Datasets

To isolate the impact of the dataset nature, we down-sampled the synthetic Bactrian-X datasets to match the size of the human-curated LIMA-X datasets, creating Bactrian-X-small. As shown in Figure 10, the synthetic Bactrian-X datasets outperformed LIMA-X at both dataset scales. A clear trend emerges when comparing LIMA-X with models tuned on Bactrian-X-small in Figure 9: cross-lingual performance improves with parallel, Bactrian-X-based instruction-tuning. These findings highlight the advantages of using synthetic, semantically parallel datasets for multilingual tuning.

Challenging the Superficial Alignment Hypothesis

We explored the Superficial Alignment Hypothesis, which suggests that only a few examples per task are needed to teach a model to follow instructions. However, our findings challenge this notion. The following Figure shows that:

- Mid-Sze Models Require Extensive Data: Models of the same scale tuned on Bactrian-X-small consistently outperform those tuned on the curated LIMA-X datasets.

- Lager Models Show Different Trends: With the larger Mixtral-8x7B model, we see high performance across both synthetic and curated data, implying that the Superficial Alignment Hypothesis may become more effective with larger models or more advanced pre-training.

Human Evaluation Insights

We extended the findings of Zheng et al. (2023) for German and analyzed the similarities and differences between human evaluation and evaluation with GPT-4 as a judge. We identify disparities between human evaluations and those generated by GPT-4 in multilingual chat scenarios. More details can be found in our paper.

Key Takeaways

- Parallel Datasets Enhance Cross-Lingual Performance: Our findings highlight the benefits of instruction-tuning on parallel datasets, showing improvements of up to 9.9% in cross-lingual instruction-following ability compared to monolingual corpora.

- Synthetic Data Outperforms Curated Data: Synthetic Bactrian-X datasets down-sampled to the size of curated LIMA-X showed that Bactrian-X consistently outperformed LIMA-X, highlighting the clear advantage of synthetic, semantically parallel datasets for improving cross-lingual performance in multilingual tuning.

- Superficial Alignment Hypothesis Challenged: Our results show that extensive instruction-tuning datasets are necessary for mid-sized multilingual models but not necessarily for larger models, thus challenging the generalizability of the Superficial Alignment Hypothesis.

- New Resources Published: We publish the novel multilingual training resource Lima-X and the multilingual evaluation resource MT-Bench-X, contributing valuable tools to the NLP community.

Future Work & Limitations

While our study provides valuable insights into multilingual instruction-tuning of large language models (LLMs), it has certain limitations. We focused on exploring multilingual instruction-tuning techniques rather than pushing for state-of-the-art performance. Additionally, our research was confined to Germanic and Italo-Western languages, leaving the generalizability to other language families untested.

Future work should aim to extend instruction-tuning methodologies across a broader range of languages to enhance the global performance of multilingual LLMs. Investigating multilingual multi-turn datasets could offer deeper insights into complex instruction-following capabilities. Moreover, improving the cost-efficiency and accuracy of automatic multilingual evaluation methods will significantly benefit the Natural Language Processing (NLP) community by making advanced evaluation more accessible.