Our world is home to more than 7,000 languages and an even greater number of dialects and accents, reflecting the extraordinary cultural and linguistic diversity of human societies. Yet this richness is unevenly distributed: a small number of languages are spoken by the vast majority of the global population, while most languages have far fewer speakers and many face the risk of extinction.

The map above depicts that most of South-East Asian countries and many African countries are rich in language diversity. The well-known Greenberg’s diversity index (LDI), which is a probability that two people selected from the population at random will have different mother tongues, has changed its course on a global scale as more and more languages are become extinct. But it is not so straight forward. It’s also worth noting that language classification can be subjective, and different scholars may group languages and dialects differently. For example, some consider Hindi and Urdu to be two separate languages, while others consider them to be dialects of a single language, Hindustani.

Overall, while it’s difficult to provide an exact number, there are likely tens of thousands of languages, dialects, and accents spoken around the world, reflecting the rich linguistic diversity of human societies. More information and data representation about languages such as country-wise data, number of living vs. extinct languages and different language families in the world can be found here: Ethnologue.

Over the last three decades, Natural Language Processing (NLP) has shifted from rule-based methods to data-driven and deep learning approaches — yet most research and commercial tools focus on just 20 of the world’s 7,000 languages. The rest are classified as low-resource languages (LRLs), often hindered by limited datasets, scarce computational resources, and complex dialectal variations.

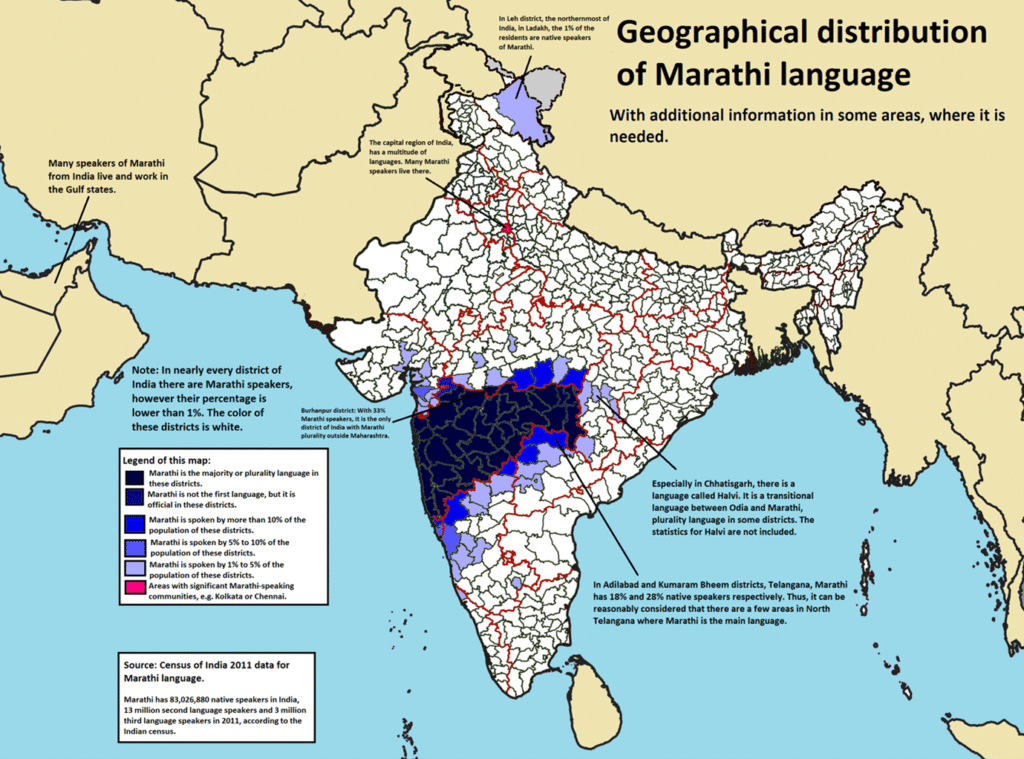

This blogpost is all about the challenges and opportunities of building ASR systems for low-resource languages, exploring linguistic diversity, data scarcity, and the role of model fine-tuning in bridging this gap. Using Marathi as a case study, we will discuss why supporting LRLs is both a technological challenge and a cultural responsibility.

A Personal Perspective: Growing Up Multilingual in a Low-Resource Language Context

I grew up in a small city in India, a country with diverse cultures and a long history of various civilizations. Today, with over 22 official (institutional) languages and about 424 living indigenous languages, it is highly likely for a person growing up in such a place to be bi- or multi-lingual. My mother tongue is Marathi, however, I acquired proficiency in both English and Hindi through my education. English and/or Hindi can serve as common languages across many states and regions in the country. I have consistently appreciated engaging with various languages and their colloquial nuances, and I am often intrigued by foreign scripts encountered during my travels.

My curiosity instilled a deeper interest in me to investigate the importance of low-resource languages in technology.

This post draws upon both my technical expertise and personal experience to address the development of more inclusive Automatic Speech Recognition (ASR) Systems for globally underrepresented languages such as Marathi. It explores the implications of classifying a language as “low resource” and examines how this designation affects its representation in digital technologies. Marathi is one of several Indian languages including Assamese, Bengali, Gujarati, Kannada, Urdu, Malayalam, Punjabi, Tamil, Oriya, and Telugu. Nevertheless, linguistic research and computational development at leading Indian universities over the past decade, combined with increased funding from both governmental and private entities driven by the global expansion of Artificial Intelligence, have contributed to substantial enrichment of Natural Language Processing data for many of these languages (see papers & links: [1], [2], [3], [4], [5]).

However, as we transition to the broader technological context, the reality about low-resource languages surfaces when one compares state-of-the-art models or tools that work accurately for English, French, Spanish etc.

In Natural Language Processing (NLP), effective human-computer interaction depends on the availability of extensive digital data. Languages that lack extensive digital resources, such as large volumes of annotated ASR data including text and audio, are classified as Low Resource Languages (LRLs). This scarcity is typically due to historical and structural factors rather than the inherent characteristics of these languages. Within India’s digital landscape, Hindi and Tamil have substantial representation in speech tools, interfaces, and ASR datasets. Urdu and Bengali (Bangla), though being originated and nurtured for long in India, are also national languages of neighboring nations, and therefore have accelerated their digital imprint in the global market. Marathi – despite its rich literary history and being spoken by over 85 million people – often has limited data availability and support for digitizing its linguistic resources. Existing voice assistants and ASR systems continue to face challenges such as mispronunciations and inaccurate transcripts. Even advanced ASR models, like Whisper, trained on multilingual benchmarks, encounter difficulties with dialectal diversity and script-specific features. Consequently, interactions with technology in Marathi may not be as effective or seamless as those in English or Hindi.

Recap: What We Know About Fine-Tuning ASR

In the first part of this blog series, we conceptualized how fine-tuning ASR models works. We explored that, using domain-specific data, pre-trained speech recognition models can be adapted to specific domains, accents, languages, and environments without the need for training from scratch. Using an analogy with the universe’s fine-tuned parameters, we emphasized the critical role this process plays in making speech technology effective for diverse and localized applications, i.e. from clinical environments to regional dialects.

As we have come to know, fine-tuning allows ASR models to be more adaptable and accurate, particularly in challenging scenarios, such as recognizing heavy accents or children’s speech. The blog post also highlighted key methods and the advantages of fine-tuning in creating cost-effective, domain-specific solutions.

Building on that foundation, this second part takes the discussion a step further.

We’ll dive deeper into the challenges that arise when fine-tuning ASR models, especially for low-resource languages. We’ll look at the unique difficulties posed by limited data and regional dialects and explore strategies to overcome these obstacles, making ASR technology more inclusive and effective in underrepresented languages. This leads to the critical question: how much is the real ‘data’ cost involved in this? Let’s find out…

Why ASR for Low-Resource Languages Matters: Access, Equity, and Everyday Use

Automatic Speech Recognition (ASR), apart from its instrumental use in live recognition or captioning to videos, is regarded as a convenient tool for expediting notetaking or facilitating hands-free communication. However, for numerous communities, particularly those in regions with low literacy rates or limited digital fluency, voice serves as an important interface for accessing technology. In other words, for many people, voice is the gateway to the digital world.

For example, millions of individuals in today’s “Digital India” depend on spoken instructions and regional voice assistants to utilize services related to healthcare, education, and emergency response.

When speech-based systems are unable to accurately interpret these languages, they risk becoming inaccessible and unusable for those who rely on them most. Within these contexts, the development of robust ASR for low-resource languages represents not only a significant technical undertaking but also a critical component of promoting fair access to digital services.

From this societal perspective, we now move to the technical core: how fine-tuning enables such inclusion.

A Technical Blueprint: Fine-Tuning in Context

As we already know, fine-tuning involves adapting a generic ASR model to perform better in specific, often narrowly defined, contexts. Rather than starting from scratch, we take a pre-trained model – like OpenAI’s Whisper – and retrain it on new, domain-specific or language-specific data.

The general ASR fine-tuning pipeline includes:

- Data Preparation: Sourcing and cleaning relevant audio-text pairs, normalizing transcripts, trimming or segmenting clips, augmenting if necessary.

- Data Preprocessing: Preparing training and validation batches and processing those through feature extraction (log-Mel spectrograms), tokenization and returning train ready encoder-decoder components.

- Model Training: Choosing a pre-trained checkpoint, configuring hyperparameters, loading training and validation batches, using features like gradient clipping and learning rate scheduling with appropriate optimization method.

- Evaluation: Measuring accuracy via word error rate (WER), or custom domain metrics, and iteratively refining the model until the desired accuracy has been achieved.

This approach is efficient, scalable, and especially useful in low-resource settings where compute budgets and data availability are limited. nt, scalable, and especially useful in low-resource settings where compute budgets and data availability are limited.

Fine-Tuning ASR with Whisper

Whisper model, released in September 2022, is a transformer-based, “weakly supervised” ASR system trained on 700,000 hours of multilingual data that quickly gained popularity for its transcription, translation, and post-processing capabilities, as well as its adaptability to low-resource languages with domain-specific data.

This adaptability is particularly important when working with languages like Marathi, where standard datasets are small and dialects are diverse.

To fine-tune Whisper, numerous resources are available, including platforms like HuggingFace (see also: HF-blog, Medium 1, Medium 2). These tutorials walk through detailed steps on fine-tuning the Whisper model with additional datasets, often using open-source datasets such as CommonVoice, Multilingual-Librispeech, Google Fleurs, LibiriSpeech. However, in real-world applications, custom datasets specific to the domain of interest may be required.

The first step is to thoroughly investigate these datasets to ensure their usability and cleanliness. Factors such as signal-to-noise ratio (S/N) in the audio, audio format and sampling rate, spoken word-to-text alignment, text encoding (especially for ultra-low-resource languages using non-ASCII scripts), and audio duration must all be considered. Whisper’s architecture is optimized for audio lengths between 3 to 30 seconds, so trimming or concatenating audio files is often necessary. Additionally, using audio augmentation techniques can improve robustness and help the model generalize better.

Outlook on our Case Study on Fine-Tuning Whisper for the Low-Resource Language Marathi

To illustrate the real-world challenges of fine-tuning ASR for low-resource languages, we take Marathi as an example. In the next blog post, we’ll dive into a fine-tuning demo using the Marathi training corpus from Mozilla Common Voice and the Hugging Face Whisper fine-tuning notebook. The focus will remain on highlighting the challenges of fine-tuning a low-resource language rather than presenting a full technical tutorial.

Stay tuned for the next part of the series – where we’ll explore, using Marathi, the role of tokenization and the key challenges that come with it.