“In the supermarket, square tomatoes are available from today.”

“Sweden ends the offer of PCR tests.”

Are these news true?

What might seem inconspicuous at first glance can have serious consequences in some cases. At the beginning of the COVID-19 pandemic, the news spread on social media that drinking bleach could cure a Corona infection. As a result, several people had to be medically treated urgently due to the harmful effects of bleach.

Due to their rapid spread and potentially negative consequences, fake news are considered one of the greatest challenges of our time. An important research field is the development of Artificial Intelligence that can counteract fake news. In this article, we highlight what fake news are, Machine Learning approaches against fake news, the limitations of these methods, and what more needs to be done.

What we understand as fake news

When we only have a text in front of us, we often cannot judge whether there is a manipulative intention behind a statement. In this article, we generally understand “fake news” to mean misinformation. Detecting misinformation is complicated by two factors: necessary knowledge and context. Let’s consider the following examples for explanation:

- (A): Yesterday, there were 3000 new COVID-19 cases in Germany.

- (B): Today is Friday.

To verify the statement in (A), necessary knowledge is required. For example, information from the Robert Koch Institute needs to be accessed. The second statement (B) may seem inconspicuous at first glance. But on all days except Friday, it is false and thus strongly context-dependent. In the course of the blog post, we will see why both statements pose a challenge for Machine Learning methods.

Manual approaches

With the abundance of information we consume every day, it is exhausting and simply impossible to research and verify every statement. We receive support from fact-checkers who have taken on this task. In addition, there are products like NewsGuard, which offers a browser plugin displaying the rating of news sources. These ratings undergo a manual verification process and therefore take time. Hence, it is not possible to check every statement immediately. For this reason, we have sought ways to technically support the detection of fake news using Machine Learning. Approaches to this will be explained in more detail below.

How can we approach fake news detection with Machine Learning?

The first possible approach aims to classify certain websites as trustworthy or untrustworthy based on selected features. Certain features, such as web traffic, the presence of a verified Twitter account, or textual information, can influence the decision. However, a website can be trustworthy and still publish articles containing false information. Sources provide an initial indication but are not sufficient for a clear assessment.

In contrast, the second approach uses the actual content of a news article. In most cases, the problem is also implemented as a classification with a binary assignment of “fake” or “not fake.” Some datasets are available for this task.

Challenges: Data and context

Classification occurs by first transforming the text into a machine-readable format, and then during the training phase, a mapping from text to class is learned (see also the blog post “What are the types of Machine Learning?“). On a test dataset, a classification accuracy in percentage can be outputted. For example, an exemplary accuracy of 90% means that the ML method, on a curated dataset, can differentiate between “fake” and “not fake” in 90% of cases. However, such numbers distort the challenge in actual deployment. Often, a model trained in this way cannot generalize. For instance, if we train a model on political texts, it is challenging to apply it to medicine. Overall, the model can only be as good as the underlying data allow it to be. This becomes clear again when we look at the examples above. If the model does not have the data from the Robert Koch Institute as a basis, it will not be able to make a correct assessment of (A). And even if the model has access to this information, it cannot necessarily make a correct assessment since the term “yesterday” is related to the date of the news. This brings us to example (B). Here, it becomes evident that in fake news, context poses a challenge. If the model does not have the date added as a feature, it has no basis for a suitable decision.

However, another challenge remains: When we want to scale and apply a model to various domains and text types, how can we ensure that we provide our model with sufficient context and knowledge? This is not possible with our current state of research.

Classification can make sense when we enrich our model with additional knowledge (“Informed Machine Learning – Learning from data and prior knowledge“), the application domain is clearly defined, and we expect that a classification is possible from purely textual information. This is the case, for example, when breaking down the problem into smaller tasks such as detecting hate speech. In our approaches, we instead consider the problem from two additional perspectives: (1) Providing information and (2) Quality assessment.

Providing trustworthy information with Machine Learning

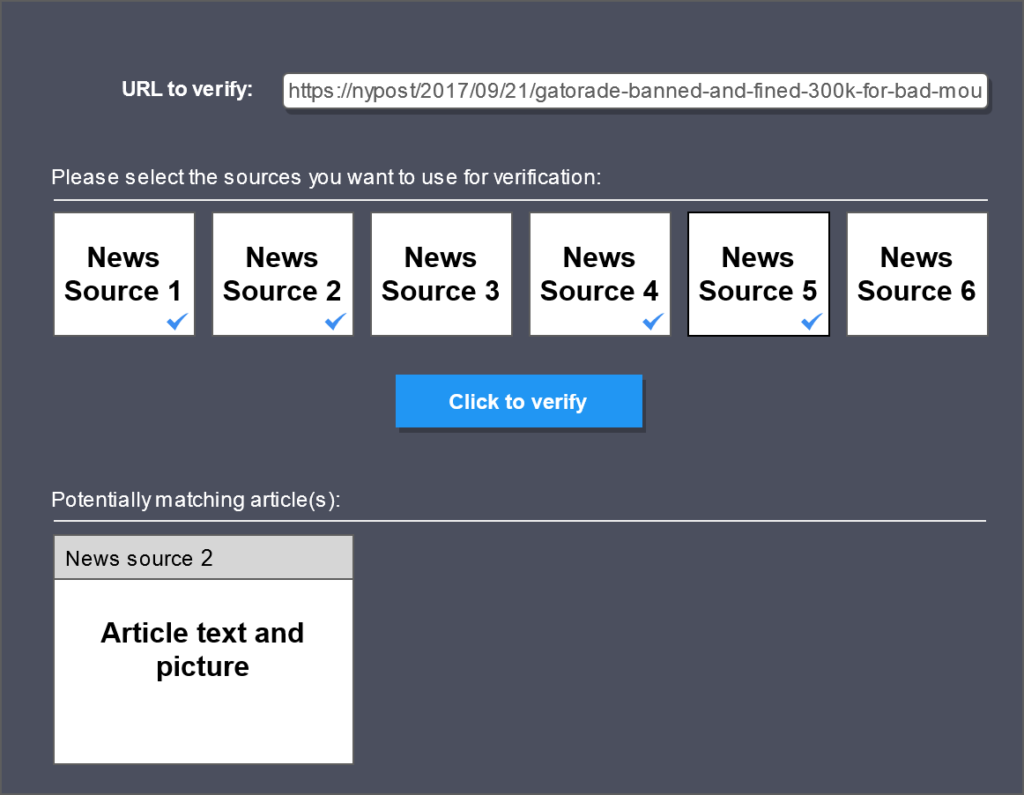

The idea of the “Fake News Detector” is to provide readers with additional information so they can better verify or falsify the informational content of an article. Instead of modeling the task as binary classification, we consider it as information retrieval. This is formulated as an unsupervised procedure.

Users can enter the URL of a news article in the mask, select trustworthy sources and the system then returns whether it can find similar articles.

Technically, the system operates as follows: In the first step, information such as key terms is extracted from the news article, and based on this information, similar news articles are searched for using a Search API. The candidate list resulting from the search is further narrowed down to relevant articles by calculating the semantic distance of the article to each candidate text. Lamarr scientist Vishwani Gupta is working on evaluating the system using different distance measures and datasets in her doctoral thesis. The advantage of the system is that it relieves users of the need of researching similar articles, while still leaving the decision about the trustworthiness of an article to them.

Support for quality assessment of news

We can also reframe the problem in a positive light: What makes a good news article? Medien-Doktor has developed a criteria catalog in German for evaluating news articles. The catalog consists of a total of 15 criteria, including criteria such as “Risks and side effects are illuminated,” “There are signs of exaggeration of illness,” and “There is a conflict of interest.” Each criterion is rated as “fulfilled,” “not fulfilled,” or “not applicable” and textually justified. Using methods of natural language processing (NLP), we can provide assistance in the evaluation process based on previously evaluated articles.

In our research, we have assessed for all criteria to what extent they can be modeled with Machine Learning and assigned them to possible NLP procedures. The modeling task is not trivial because the mere occurrence of words in the text is not sufficient to provide an evaluation. In many cases, background knowledge, as described above, is necessary. In our current research, we are particularly concerned with the criterion of “understandability” and how one can assess the readability of a document.

Machine Learning is not a patent solution against fake news because most learning models do not have sufficient knowledge or context to detect misinformation. Nevertheless, with the help of Machine Learning, we can contribute by providing information and performing classification of subproblems. This ensures that the developed solutions are applicable to different news topics. Machine Learning helps us to some extent in curbing fake news. In the end, we are the ones consuming the news. When was the last time you questioned a piece of news?

More information in the associated publication and the corresponding video:

Supporting verification of news articles with automated search for semantically similar articles Vishwani Gupta, Katharina Beckh, Sven Giesselbach, Dennis Wegener, Tim Wirtz, Proceedings of the Workshop Reducing Online Misinformation through Credible Information Retrieval (ROMCIR), 2021, Link

IFLA Talk: Fake News & Its Impact on Society, Lecture 3 Recording