With the help of explainable AI (XAI) methods, developers can increase the interpretability of AI decisions. Even though XAI has so far been aimed primarily at experts, an expert group of the CTAI has determined: Explainable AI – in conjunction with other measures – can also contribute to the information and education of consumers and citizens.

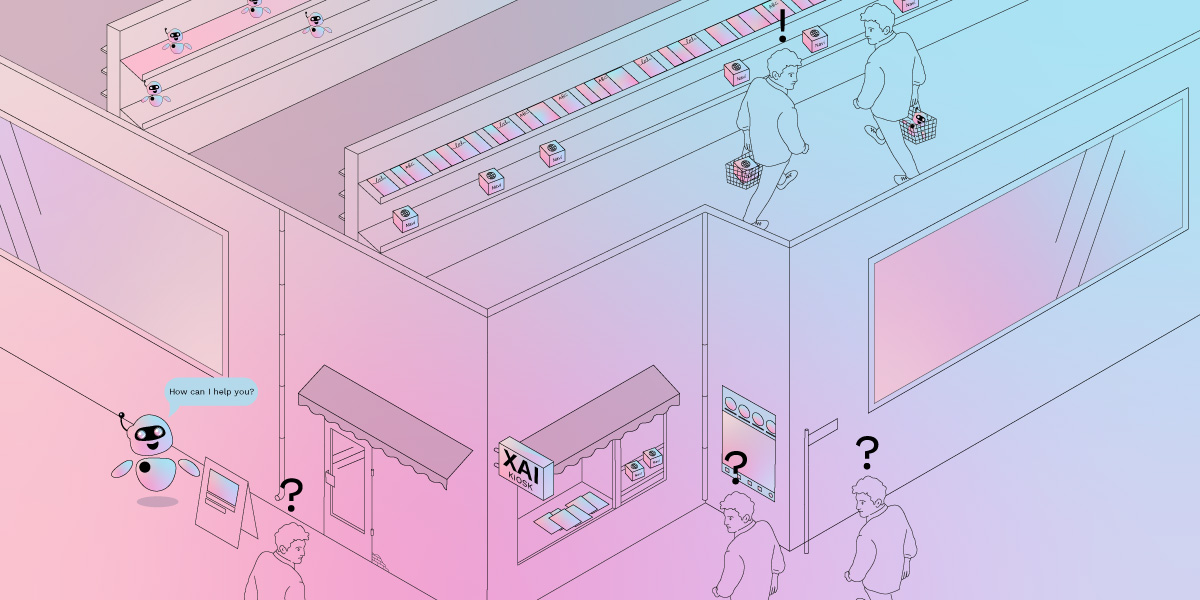

Consumers use AI-based applications every day – whether it is social media platforms, AI-supported chatbots, facial recognition on their smartphones, or navigation systems. And even if citizens and consumers do not use AI systems themselves, they can be affected by their results: For example, when large companies use AI tools to filter job applications or insurance companies use AI to calculate the risk of a certain disease.

If the use of an AI tool has an impact on the lives of citizens, companies and public bodies should inform them about the use of AI. Furthermore, citizens should also be informed about how the results of an AI application have come about. Only in this way will citizens have the opportunity to identify and take action against disadvantages, such as AI-mediated discrimination (Baeva, Binder, 2023). In addition, civil society organizations should receive information on the interpretability of AI results as well, since AI-mediated discrimination in particular is difficult to determine on the basis of individual cases (Baeva, Binder, 2023).

Explainable AI for better interpretability of AI results

However, AI systems are often so complex that neither developers nor users can understand their results and procedures. That’s why these AI applications are also called black-box models. In the research area of explainable AI, scientists are working on methods that show which data or assumptions have led to a certain result of an AI application. This can be well illustrated by a Counterfactual Explanation: A Counterfactual Explanation describes the circumstances under which the result of an AI application would have been different.

Here is an example: A company uses an AI system to sort incoming applications with regard to their suitability for a position. One person’s application is classified as unsuitable by the AI application. A counterfactual explanation analyzes which factors would have to change in order for the AI system to issue a positive result for this applicant. This could be work experience: If the person had ten instead of nine years of work experience, the AI application would have classified the applicant as suitable.

XAI and the traceability of AI results can contribute to greater transparency of AI applications. According to the German Ethics Council (2023), the degree of transparency that is actually necessary for certain AI systems depends on the context of use. For some contexts a maximum level of explainability should be ensured by explainable AI, whereas in other areas a human “plausibility check” of AI results might be sufficient. However, a full transparency of so-called black-box models is currently “neither for laymen nor for experts” producible (Baeva, Binder, 2023). If such transparency is required for a specific application area, the use of a complex AI system would have to be dispensed with altogether in this case.

Nevertheless, methods of explainable AI can significantly increase the interpretability of AI results. Currently, these methods are primarily aimed at developers and experts. Under certain conditions, however, XAI can make the results of systems more interpretable for consumers as well – this was the conclusion of an expert group of the Center for Trustworthy Artificial Intelligence (CTAI).

Under which conditions does explainable AI help with the information of consumers?

Explanatory methods can help consumers to better understand the results of AI applications. For example, if an AI is used to support credit granting, counterfactual explanations can show the influence of certain factors on the outcome of an AI system. Affected individuals can thus understand, whether their salary, place of residence or age, for example, led to a loan rejection.

Simple presentations

The explanations must be presented in a low-threshold manner, so that the use of XAI methods contributes to the information of consumers. One example would be an interactive, digital input form in which users can change input data, such as age, place of residence or salary, as they wish. In this way, they can understand which factors have an influence on the result of the AI application and thus on the chances of being granted a loan – even if they do not have expert knowledge of how the AI technologies work. To ensure that the explanations work for consumers, they have to be evaluated with and by users.

Explainability requires correctability

In addition, consumers should be able to correct the inputs and/or results of AI applications. Correctability affects three different levels:

- Input data: Consumers can correct wrong input data. This concerns, for example, the entry of an incorrect age in an AI system that bank employees use to assist in credit scoring. Explanations through XAI methods should disclose such incorrect input data and offer interfaces for correction.

- Personalization: Users can personalize the results of an AI application, for example the news feed of a social media application. A possible personalization increases the usability of such applications.

- Control function: Consumers can complain about the results of an AI system to independent bodies, for example, in the case of suspected discrimination in the granting of credit or by facial recognition software. In addition, research institutes, anti-discrimination bodies or non-governmental organizations should also gain access to the data basis of AI systems and explanations through XAI methods. Only in this way can civil society organizations perform their social control function and uncover AI-mediated discrimination that individuals cannot identify.

Explanations should be extended with recommendations for action

Explanations based on XAI methods do not in themselves give consumers sufficient options for action. Therefore, in addition to an explanation, providers of AI systems should inform consumers about what they can change themselves so that the AI system calculates a different result. It is crucial that the recommended action is feasible. In the case of loans, for example, it can be helpful for applicants to know whether even a small increase in salary is sufficient for them to be granted a loan. The recommendation to change one’s age, on the other hand, makes no sense. The implementation of recommendations for action is not possible to the same extent for all people, but depends on overall social factors and power structures: For example, depending on the profession and employment, it is only possible for some people to negotiate a higher salary. Such factors should also be taken into account when formulating recommendations for action.

XAI-methods and explanations must differ depending on the application and context

Depending on the use case, a combination of several XAI methods and representations can be helpful to make AI results more interpretable. It is critical that explanations do not contradict each other.

Moreover, some information needs of consumers are better met by other formats than by XAI methods: For example, companies could provide information about the stored data of health app users as a simple overview. This can already help to identify errors or incorrect information in the collected data and to take action against it if necessary. XAI methods are not required for such a presentation.

Explanation good, all good?

Explanations of the use and results of AI systems enable consumers and citizens to use AI applications in a more self-determined and confident manner. In addition, they can better defend themselves against disadvantageous or incorrect decisions made, for example, by employers, medical professionals or public authorities on the basis of AI results.

According to the recommendations of the CTAI expert group on consumer information, companies – as AI providers – are primarily responsible for the implementation and comprehensibility of explanatory measures. Scientists should continue to research reliable XAI methods and pursue an interdisciplinary approach in research projects in order to develop human-oriented explanatory approaches rather than purely technical ones. Policy makers have an obligation to provide sufficient resources for the development and improvement of such methods. In order for explanations to actually reach consumers and citizens, mediating organizations, such as consumer protection centers, also play an important role (Müller-Brehm, 2022).

Nevertheless, the responsibility for the ethical deployment and use of AI applications must not be outsourced to consumers. Instead, further measures are needed to protect consumers and citizens. This includes, for example, ensuring that civil society perspectives and the interests of consumers are sufficiently taken into account in the AI regulation currently being negotiated at the European level. Accordingly, all citizens and consumers should have the opportunity to inform themselves about the use and functioning of AI applications – but they are not obligated to do so.

This blog post is based on the thesis paper “Potenziale von erklärbarer KI zur Aufklärung von Verbraucher*innen“. The thesis paper is the result of the expert group on consumer information of the Center for Trustworthy Artificial Intelligence (CTAI).

About the CTAI

As a national, impartial organization that serves as a gateway between the worlds of science, business, politics and civil society, the CTAI informs the public about a number of consumer-relevant issues, fosters public debate on the topic and develops tools for the evaluation and certification of trustworthy AI.

Further information from the CTAI on the topic of transparency of AI systems in German:

- Briefing zur KI-Verordnung: Braucht es mehr Informations- und Auskunftsrechte beim KI-Einsatz? (2023)

- Briefing zur KI-Verordnung: Kennzeichnung von KI (2023)

- Thesenpapier: Wie können KI-Systeme in der Medizin erklärbar und nachvollziehbar gestaltet werden? (2023)

- Missing Link: Warum brauchen wir Transparenz? (2022)