After discussing how machines learn in our first post in the “ML Basics” series, in this post in the series we will look at the types of Machine Learning. Here, a paradigm defines how an algorithm learns. We examine paradigms without looking at specific algorithms for learning. The choice of paradigm depends on the task and the type of data. For a paradigm to be used, it must be implemented as a method. In this blog post, we introduce five types of Machine Learning: unsupervised, supervised, semi-supervised, active, and reinforcement learning.

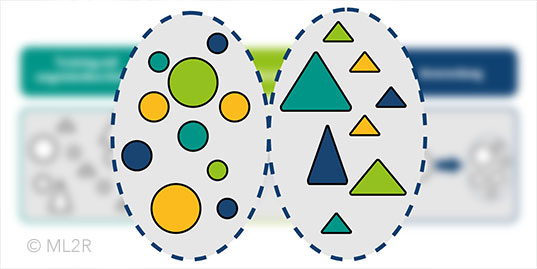

Unsupervised learning: In unsupervised learning, the algorithm receives no feedback during the learning process. In this case, the algorithm tries to detect inherent structures in the data and learn from them. Unsupervised learning methods include all similarity-based methods, such as cluster analysis, which groups similar objects together, or some dimension reduction methods. An everyday example of a dimension reduction is a shadow, providing a two-dimensional representation of a three-dimensional object. If we think of data as high-dimensional objects, we can also project them into lower dimensions, reducing complexity.

Supervised learning: Supervised learning is characterized by the fact that active feedback is possible for each training example. The feedback is acquired independently by an algorithm, assuming that the training data includes the correct answer for each data point. A classic example of supervised learning is the training of classification or regression models., which are widely used and versatile. Many questions can be formed as true-false questions, such as predicting whether a customer will quit. All questions with a fixed number of answers are covered by classification problems. While regression models are used, when a task involves predicting a numerical value, such as temperature.

Semi-supervised learning: In semi-supervised learning, feedback is provided for some examples, similar to supervised learning, while others lack feedback. For example, this is often the case when identifying fraud. For all detected fraud, it is known to be fraud. For all other data, the probability of fraud is low. However, there is a likelihood of undetected fraud hidden within the data.

Reinforcement learning: Reinforcement learning differs from the previous forms of learning in that feedback is not provided for every data point, instead, feedback is often given only after many steps. This is a classic scenario in many games, where positive feedback is received for winning and negative feedback for losing. In such cases, feedback is only available at the end of the game and is then calculated back on all the moves made. To learn a good strategy, a game must be played multiple times.

Active (active learning): In some use cases it is either impossible or very expensive to obtain the correct answers for all data points. A classic example is recommendation systems. No customer is willing to rate thousands of movies to get suggestions that are suitable for him. Therefore, recommendation systems work differently: with each suggestion the system provides, and from which the customer selects a movie, the system gains feedback about the customer’s preferences. By actively selecting which suggestions a potential customer is shown, the system also actively decides. More information on Active Learning is available in our blog post “Intelligent Labelling with Active Learning”.