Machine Learning (ML) and data mining have become integral parts of our everyday lives. As a result, the associated energy consumption is increasingly coming into focus. The research field of Resource-aware ML aims to reduce the energy required for both the development and use of ML while maintaining the quality of the results. In this article, we explain the challenges faced by researchers and the solutions that have already been found, using autonomous driving as an example.

In order to find approaches for saving energy, we look at the entire development cycle of ML applications. Here, we often think of data scientists preparing data and training a variety of models in order to ultimately find the best model for a specific task. However, once a good model is found for a specific application, the focus changes from model training to continuous model application by a large number of users. Due to this vertical distribution of the model, the energy required for model application is often higher than the energy required for the actual model training.

Example autonomous driving: High energy consumption for ML application

Here is an example from the practice of autonomous driving: To control the car, Tesla Autopilot uses a Deep Learning model, which is executed with the help of a chip that consumes around 57 W and is specially developed for this application. The German Federal Motor Transport Authority estimates that in 2018 (i.e. before the coronavirus pandemic), all German drivers drove a combined total of around 630 billion kilometers in their cars. The average speed is approx. 45 km/h, which results in a combined total driving time of approx. 14 billion hours. If you now want to use Tesla’s Autopilot for all of these journeys, you get a combined energy consumption of approx. 0.79 terawatt hours per year. This is roughly equivalent to the annual energy production of the largest hydroelectric power plant in Germany. In other words, you need an entire power plant to provide a single feature that uses Machine Learning.

Development cycle for Resource-aware Machine Learning

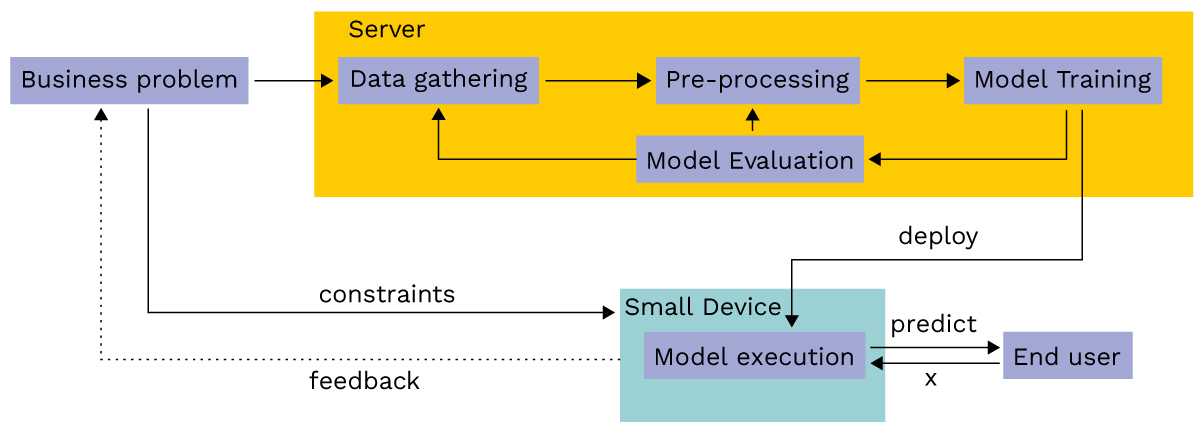

The research field of Resource-aware Machine Learning is concerned with how this energy consumption can be reduced. To this end, we first take a look at the development cycle of an ML model in the figure below: We start by analyzing the business problem, i.e. the use case. This results in the requirements for the model (e.g. accuracy) and the framework conditions for the applications of the model (e.g. execution in a moving car without an existing internet connection). The search for the best model then begins by training and evaluating a large number of different models. Post-processing of an already trained model has proven to be a special step in Resource-aware Machine Learning. The idea of post-processing is to first find a good ML model for the business problem (e.g. with maximum accuracy) and then to make the model more resource-efficient. Once the best model has been found, it can be deployed on a resource-efficient, small device and used there continuously.

© Dr. Sebastian Buschjäger & Lamarr-Institut

Energy consumption for computing operations and memory access

In order to achieve the most efficient system possible, all steps in this development cycle must be taken into account. In addition to the prediction accuracy of the model, the memory requirements and the number of floating-point operations are crucial here, as Table 1 shows. Taking a simple 32-bit integer addition, i.e. the addition of two integers as a reference (relative energy cost of 1), the addition of two 32-bit floating point numbers is almost 10 times more expensive. The multiplication of two integers (32-bit integer) is more than 30 times as expensive and the multiplication of two floating point numbers (32-bit float) is almost 40 times as expensive as the addition of two integers.

If you now look at the cost of accessing memory, the problem becomes even more serious. Accessing data from the cache is 50 to 500 times more expensive and accessing the main memory can be up to 6000 times more expensive than a simple addition. We briefly return to our previous example of the autonomous car.

Although the exact structure of the Tesla Autopilot is a well-kept secret, it is known that Tesla uses Deep Neural Networks in a so-called HydraNet architecture as the basis for the Autopilot. The smallest networks from the HydraNet family have around 1.2 million parameters and therefore require around 1 MB of hard disk space and a good 52 million (floating point) addition operations for a prediction. They offer an accuracy of only 65% on the popular ImageNet data set. The largest network in the HydraNet family, on the other hand, has around 14 million parameters, which leads to a memory requirement of around 10 MB and demands around 500 million (floating point) addition operations for a single prediction. In return, the prediction accuracy of this model improves to around 75% for object recognition. However, this improvement of around 10 percentage points comes at a high price: The largest model is almost 11 times larger than its smallest brother, which means that we need around 67,200 picojoules more energy per classification.

| Operation | Energy [picojoules] | Relative Energy Costs |

| 8 bit integer ADD | 0.03 | 0.3 |

| 32 bit integer ADD | 0.1 | 1 |

| 8 bit integer MULT | 0.2 | 2 |

| 16 bit float ADD | 0.4 | 4 |

| 32 bit float ADD | 0.9 | 9 |

| 16 bit float MULT | 1.1 | 11 |

| 32 bit integer MULT | 3.1 | 31 |

| 32 bit float MULT | 3.7 | 37 |

| Cache access | 5-50 | 50-500 |

| DRAM access | 320-640 | 3200-6400 |

Open research questions on adapting the ML development cycle

The central goal of Resource-aware Machine Learning is therefore to reduce the memory requirements and the number of floating-point operations. To this end, it is possible, for example, to round floating-point numbers to integers, simulate floating-point operations with integers or use models that do not require floating-point numbers at all. The following further research questions arise in relation to the development cycle described in Figure 1:

- Training the model: Can we train a model that has fewer parameters but the same prediction accuracy as the reference model? Can we train a model that does not require floating point operations?

- Readjusting the model: Can we remove parameters from the model without sacrificing prediction quality? Can we adjust the model so that it requires fewer floating-point operations without changing the accuracy?

- Applying the model: For the specific application of the model, what is the optimal implementation for the given hardware? Can we use hardware features such as tensor processing units for a more efficient application?

Resource-aware Machine Learning as a key technology for the future

Sustainability will be one of the key challenges in the coming decade. As ML models become more and more widespread in our everyday lives, ML itself is also affected. At first glance, the frequent training of ML models consumes the most energy, but due to the vertical delivery of models to a large number of users, the energy required for model application often exceeds the energy consumption of training many times over. For this reason, the application of models in particular must be optimized. An optimized model application also means that models can usually be executed faster, which leads to overall time savings. Since models are applied over and over again during training and during the exploratory phase of training, improved model application ultimately also has a positive effect on the training of new models. Resource-aware Machine Learning therefore helps both training and model application. Overall, Resource-aware Machine Learning is a key technology for the future that can help reduce the energy consumption of ML models while improving the efficiency of the training process.