The applications of Artificial Intelligence (AI) are constantly becoming more diverse. The most prominent applications are probably autonomous driving and personal assistants, as found on smartphones or in smart homes. Other well-known everyday application areas include automatic generation of product recommendations on shopping or streaming platforms (recommender systems), creditworthiness assessment, or identification of insurance fraud. AI can also be used purely in an advisory capacity. For example, it supports doctors in diagnosing skin cancer.

Especially in cases where AI affects people’s lives, it is essential to be able to understand the decision-making process of these models. If the doctor’s diagnosis does not match the algorithm’s diagnosis, this only helps the doctor if the algorithm can also provide a comprehensible justification for its output. The research field of “Explainable Machine Learning” (XML) addresses the problem of explainability in AI. Backgrounds on the necessity and current state of explainability can be found in the blog post “Why AI needs to be explainable”.

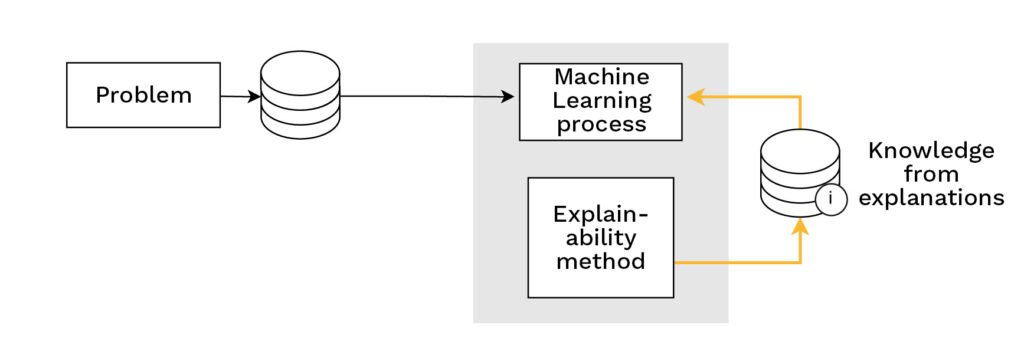

In this article, we discuss three ways in which explainability can be improved through additional knowledge or used for knowledge acquisition in the Machine Learning process.

Explanations are a communication problem

The diversity of AI application areas already suggests that explanations are needed in a variety of contexts. Both the use case itself and the recipient of the explanation are decisive in determining which form and wording of the explanation makes the most sense. If an explanation is not communicated in concepts familiar to the recipient, they cannot understand it. The medical AI from our example could justify its diagnosis by marking an area in a photo showing an abnormal spot on the skin. However, if the diagnosis is to be comprehensible to the patient, the explanation would need to convey additional necessary background knowledge. Current methods of explainability lack the flexibility to adapt to various contexts and recipient groups. Many explanatory methods analyze the decision of the model with the help of statistical procedures. Although these can reveal correlations, no causal relationships can be inferred from them. Context-specific knowledge is therefore still required in order to correctly interpret the output of these explainability methods. If the recipient does not have the necessary knowledge, they cannot use the explainability method.

Context through additional knowledge

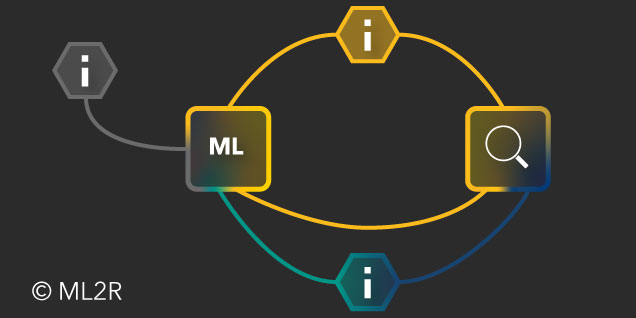

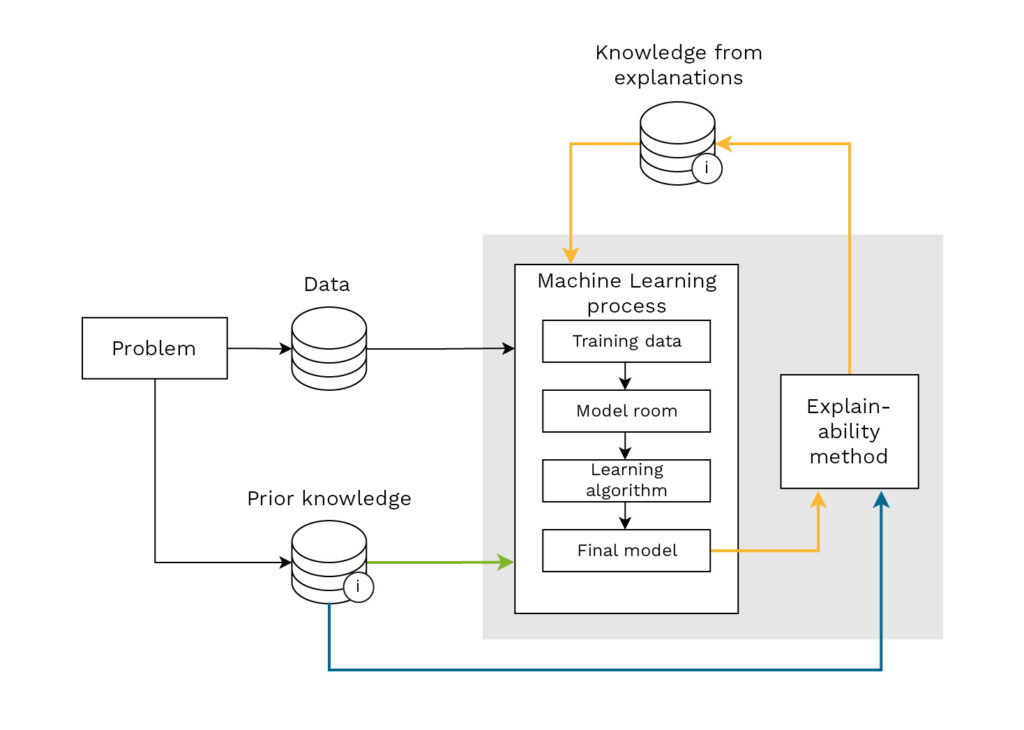

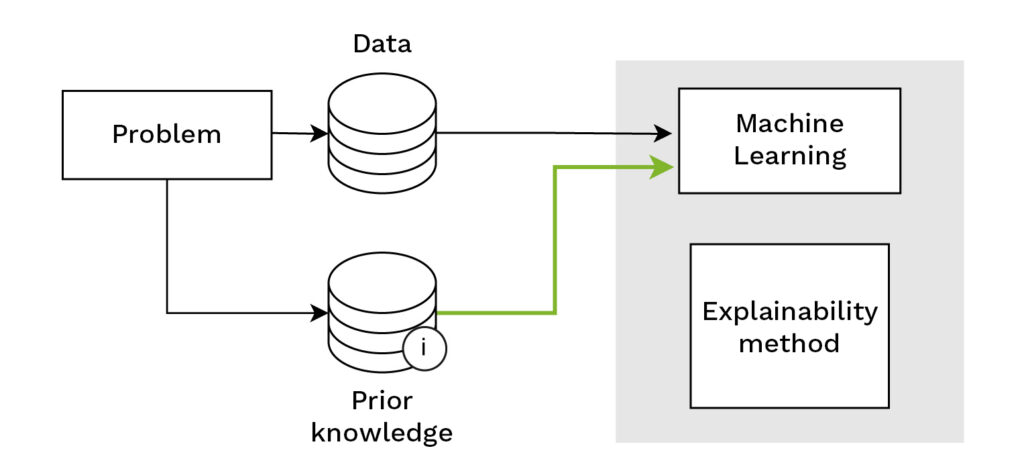

As explained in the blog post “Informed Machine Learning – Learning from Data and Prior Knowledge”, AI methods can be improved by integrating additional information in the form of external knowledge into the learning process alongside the usual training data. This knowledge can take various forms, such as domain expertise, simulations, or knowledge graphs. The blog post describes how external knowledge affects data efficiency and algorithm robustness. We are interested in finding out whether external knowledge can also be used to adapt explainability methods to contexts and recipient groups, thus making them more applicable. In our research, we have identified three ways in which external knowledge and explainability methods can interact. These possibilities are schematically depicted in the figure below by the colored arrows. The figure shows a slightly modified version of the Informed Machine Learning process from the aforementioned blog post. New here, in addition to the color-highlighted arrows, are the “Explainability Method” and the “Knowledge from Explanations”.

Schematic representation of the Machine Learning process including the use of explainability methods. The colored arrows represent possibilities where external knowledge is integrated with explainability methods or how such knowledge can be generated by explainability methods.

Enhancing model explainability through prior knowledge

The green arrow also appears in the figure of the blog post on Informed Machine Learning. The reason for this is that many examples can be found where simply integrating prior knowledge into the learning process improves the comprehensibility of the models, without the need for a dedicated explainability method. The models are encouraged by prior knowledge to rely more on concepts understandable to humans in their decision-making process. Two examples:

- The training data for recommender systems, which typically only work with user histories, were expanded with a knowledge graph containing information about products. The recommendations of the systems were then given based on paths in the knowledge graph. This made it understandable why a product was recommended based on which connections.

- Other methods intervene in the learning algorithm and provide additional feedback to the model during training, which is inferred from external knowledge. For example, there was an application where the algorithm was supposed to identify objects in an image. From a knowledge graph, it was inferred whether it is plausible for the found objects to actually occur in this combination. For example, if a table is recognized, it is more plausible to find a chair than a tire in the image.

Green arrow: The integration of prior knowledge into the Machine Learning process can directly improve the explainability of a model.

Adapting explainability methods through prior knowledge

The blue arrow in the figure encompasses approaches where prior knowledge directly influences the explainability method. These explainability methods usually fall into the category of so-called “post-hoc” (after-the-fact) methods. The name comes from the fact that these methods cannot influence the Machine Learning process and only have access to the final model. A good example of this is “counterfactual” methods. These systematically generate artificial inputs for the model and examine how the model’s output changes. Counterfactual methods can thus answer “what if” questions, providing insight into what would need to change in the input to obtain a different (desired) output.

Blue arrow: Prior knowledge can be used to improve or adapt explainability methods.

For example, if a bank’s automated system rejects a loan application, the applicant could use these methods to find out which of the details on the application, such as place of residence, loan amount, age, or income, would have to change for the application to be accepted. The rules governing the generation of artificial entries are application-specific. Here, the method must be adapted using prior knowledge in order to provide reliable or meaningful explanations. For example, a theoretically possible suggestion by the system to reduce the loan amount to a value below zero so that the application is accepted would not be constructive. Alternatively, the artificial inputs can also be provided interactively by the user directly. In an interactive scenario, the applicant could run through various changes to their loan application themselves in order to find an acceptable alternative to their original application.

Counterfactual methods are therefore suitable for self-determined model analysis and thus offer a promising way of adapting to a specific user and context.

Explanations help to generate new knowledge or make knowledge usable

Orange arrow: The explainability method helps to gain knowledge about the problem or the Machine Learning process. This knowledge can be used for future improvements.

Depending on the application, post-hoc methods can also be described by the orange arrow. In our example, although the applicant has gained knowledge, it will not affect the model. However, if a programmer uses post-hoc methods to investigate their model, they can use the gained knowledge to improve a future learning process. As illustrated by the example in the figure below and in the blog post “Why AI needs to be explainable”, it can be found out whether an object recognizer actually pays attention to relevant areas of the input.

The object recognizer correctly identifies a train in the image on the left. On the right, the image has been colored using an explainability method. The redder the area, the more influence it had on the output of the algorithm. You can see that the algorithm pays less attention to the train, and more attention to the tracks.

Approaches like “Exploratory Interactive Learning” offer a similar but much more direct way of incorporating knowledge into the learning process. In this approach, an explainability method generates an explanation for an output during the training of a model. This explanation is evaluated and corrected by a human. The feedback is then incorporated into the learning algorithm. For example, the model can be guided during training on what to focus on in the inputs. In this way, the explainability method makes the “domain knowledge” of the human usable for the learning process.

We have seen that the interaction of explainability methods and additional knowledge can have multiple effects on the process of Machine Learning and the interaction of humans with models. Interpretable knowledge sources such as knowledge graphs can help models to produce more comprehensible outputs. Interactive methods offer users the opportunity to examine the model independently. On the one hand, users can decide for themselves what they want to have explained, on the other hand, such an approach can also help to gain knowledge that can improve future models. Explainability methods always act as a mediator between the model and the human; used correctly, expertise can be integrated directly into the learning process. By identifying these three points of contact between explainability methods and additional knowledge, we hope to have developed an analytical tool that can be used to develop or improve future and existing explainability methods in a targeted manner.

More information in the associated paper:

Explainable Machine Learning with Prior Knowledge: An Overview.

Katharina Beckh, Sebastian Müller, Matthias Jakobs, Vanessa Toborek, Hanxiao Tan, Raphael Fischer, Pascal Welke, Sebastian Houben, Laura von Rueden. ArXiv, 2021, Link