Even though Machine Learning (ML) can solve important problems today, various research studies have shown that many state-of-the-art Machine Learning models have a tremendous energy demand and thus a significant carbon footprint. For example, the training of current ML language models requires about as much energy as a Trans-America flight – and this is without even considering the enormous complexity behind optimizing hyperparameters in the studies. Especially in the context of an energy crisis and climate change, it becomes increasingly important to examine not only the prediction quality but also the energy efficiency of learned models.

What does energy efficiency mean in the context of Machine Learning?

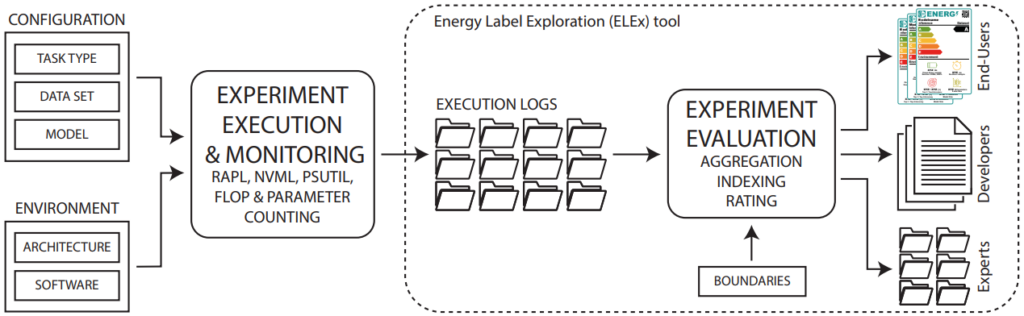

Unfortunately, determining the energy efficiency of Machine Learning is not trivial due to its versatile applications. Scientists at the ML2R (now Lamarr Institute) have developed a characterization of ML experiments: Each experiment consists of a conceptual configuration and a practical environment. The configuration defines the task (e.g., training, classification, testing of properties), an underlying dataset, and a chosen model (with all its hyperparameters). The environment consists of the computer architecture used and the software running on it, which performs the experiment. In practice, configuration and environment mutually depend on each other, as, for example, certain models require specific software, or some datasets can hardly be processed on small computing units due to their size.

The configuration of an experiment determines the metrics that are interesting for an efficiency investigation. Various tasks and datasets have their specific metrics to measure the quality of a model (e.g., top-1 accuracy on validation data). Metrics that deal with limited resources are also interesting, such as model size (in bytes or number of parameters), runtime, or energy consumption. The latter correlates with the required runtime but can fluctuate due to different hardware utilization. There are interactions between most metrics, as increased model complexity, for example, improves accuracy but may also increase resource consumption. Depending on hardware, the consumption of individual computer components must be measured directly using specific tools or estimated from the specification. It’s worth mentioning that the increasingly popular virtualization (e.g., via Docker or Amazon Web Services) also complicates the estimation of real energy consumption.

The framework designed by scientists to evaluate the efficiency of machine-learned models.

From metrics to efficiency

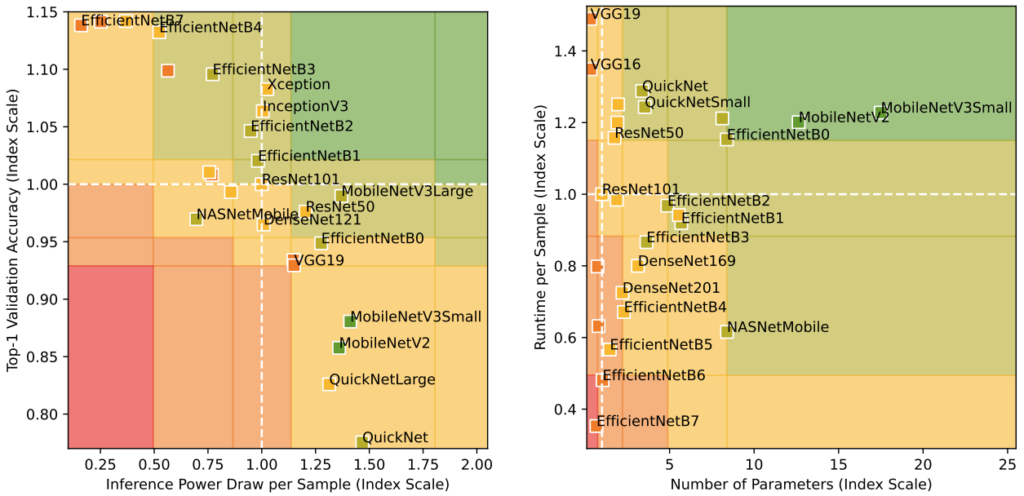

Now, the different metrics need to be related to each other to determine the efficiency of the models. Here, two problems arise: Firstly, similar to an apples-to-oranges comparison, the real sizes behind the metrics are incomparable. Moreover, the scale on which values for metrics are measured can vary greatly (e.g., regarding the runtime of an experiment with or without GPU acceleration). Both problems can be solved by considering only relative index values instead of absolute values. For this, a reference model is defined, to which an index value of 1 is assigned for all measured values. One can then calculate how much better (index > 1) or worse (index < 1) the metrics of another model are in relation to the reference model. For example, a model could be half the size of the reference model (index value 2 for the number of parameters) but also have 20% lower accuracy (index value 0.8). By subdividing the index scale into specific sections, a discrete evaluation (A: Very good to E: Very poor) can then be determined.

The final efficiency category is obtained by summarizing all metric evaluations for a model using a weighted median. Weighting the metrics ensures that strongly correlated metrics (e.g., runtime and energy consumption) have less influence on the overall evaluation. Metrics can also be prioritized more or less depending on the intended use. The use of relative index values and fixed boundaries for discrete evaluations is inspired by the already established energy label system of the EU, which communicates the efficiency of electrical appliances in a very similar way.

Overview of the interactions of different metrics for ImageNet models.

Making expert knowledge understandable

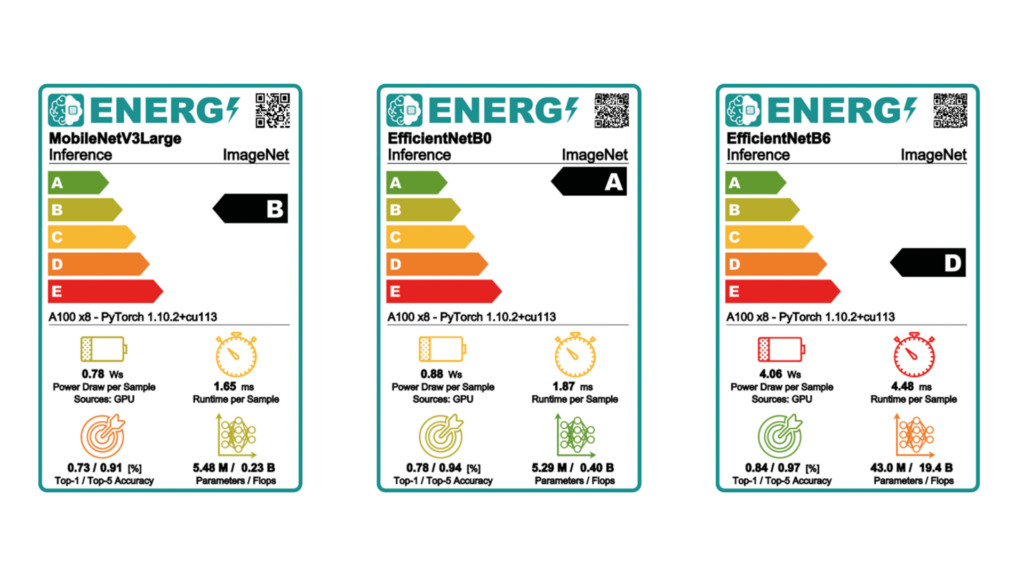

Although the new method for efficiency evaluation of learned models was developed by scientists, future users of Machine Learning methods (e.g., software developers in the industry) who do not have a solid AI expertise should also gain increased access to relevant information. Here, one can also follow the example of energy labels, which significantly simplify and make understandable complex electronic processes and their environmental impact. In a very similar way, the results of an efficiency analysis for Machine Learning can be communicated depending on the target audience. Scientists and experts receive log files and detailed reports, while users receive a label that presents efficiency information such as the respective resource consumption at a glance.

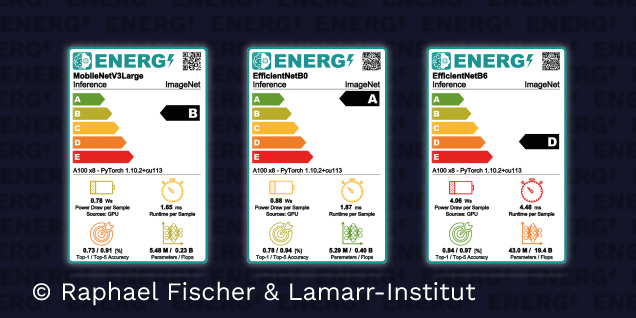

Exemplary presentation of efficiency information in the form of energy labels.

Users can make informed decisions about the use of procedures in this way. The Energy Label Exploration Tool developed at the Lamarr Institute already allows users to obtain a comparable overview of the efficiency of the most well-known ImageNet models across multiple platforms.

More information in the following paper:

Proceedings of the ECML Workshop on Data Science for Social Good Implementierung: https://github.com/raphischer/imagenet-energy-efficiency