The advancements in artificial intelligence (AI) over the past few years have been remarkable, with various fields benefiting from these innovations. One particularly compelling area of AI is Embodied AI, which suggests that intelligence is not merely a function of cognitive processes but is deeply rooted in our physical interactions with the world. Embodied AI refers to AI that is integrated into physical systems, such as robots, enabling them to interact with their surroundings in a meaningful way. In this blog post, we will explore what Embodied AI is, its core principles, and various applications, ultimately highlighting its significance in shaping the future of technology.

What is Embodied AI?

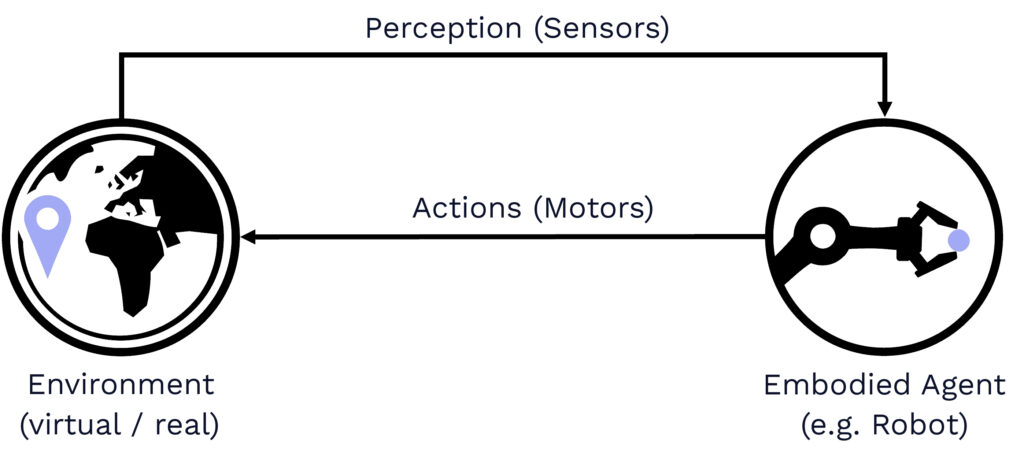

Embodied AI encapsulates all aspects of interacting and learning in an environment: from perception and understanding to reasoning, planning, and execution. Unlike traditional AI models that often operate in abstract, virtual environments, Embodied AI emphasizes the importance of physical presence and interaction. An AI system learns and understands its environment through direct engagement, similar to how humans and animals learn through exploration and interaction.

This approach integrates multiple fields, including computer vision, environment modeling, prediction, planning, control, reinforcement learning, physics-based simulation, and robotics. By combining these domains, Embodied AI systems can improve their behavior from experience, enabling them to adapt and respond effectively to real-world challenges. For instance, a robotic arm designed for assembly tasks not only analyzes visual data but also physically manipulates components, gaining insights into their properties and optimal handling techniques. This holistic approach to learning and interaction positions Embodied AI as a transformative force in the development of intelligent systems that can adapt and thrive in dynamic environments.

In line with the Triangular AI paradigm, our approach to advancing Embodied AI involves adding structure to machine learning approaches to facilitate the perception and planning of complex interactions between agents and their environments. Through this methodology, agents can learn from limited experiences and adapt their behavior accordingly. Simulation plays a critical role in this process by creating a digital reality where agents can learn, relearn, and validate their actions. This practical application of Embodied AI demonstrates its relevance beyond theoretical interest, impacting various domains such as logistics, manufacturing, or Natural Language Processing (NLP).

5 Core Principles of Embodied AI

The fundamentals of Embodied AI can be explained through several core principles:

- Interaction with the Physical World: Embodied AI systems engage with their surroundings, allowing them to gather real-time data and adapt to changing conditions. This interaction is crucial for learning and decision-making. For example, a robotic arm designed to assemble components must physically manipulate objects to understand their properties and how to handle them.

- Perception and Action Coupling: In Embodied AI, perception is not separate from action. Instead, these processes are interconnected. A robot that sees an obstacle must quickly decide how to navigate around it, illustrating the seamless integration of sensing and acting. This coupling allows for more adaptive and responsive behaviors in real-time situations.

- Learning through Experience: Just as humans learn from experiences, Embodied AI systems evolve through trial and error. They refine their actions based on feedback from their environment, leading to improved performance over time. For instance, a robot learning to walk must attempt various movements, receiving feedback on its balance and coordination, and subsequently adjusting its gait.

- Contextual Understanding: Embodied AI systems are often designed to operate within specific contexts. This contextual awareness allows them to make decisions that are informed by their surroundings, enhancing their ability to perform tasks effectively. For example, a robotic vacuum cleaner recognizes the layout of a room and adjusts its cleaning pattern accordingly.

- Multimodal Sensory Integration: Embodied AI systems utilize multiple sensory modalities – such as vision, touch, and sound – to gather information about their surroundings. This integration enhances their ability to perceive and interact with the environment more effectively. For instance, a robotic assistant might combine visual data with tactile feedback to manipulate objects with greater precision.

Exemplary Applications of Embodied AI

Embodied AI has numerous innovative applications that showcase its potential:

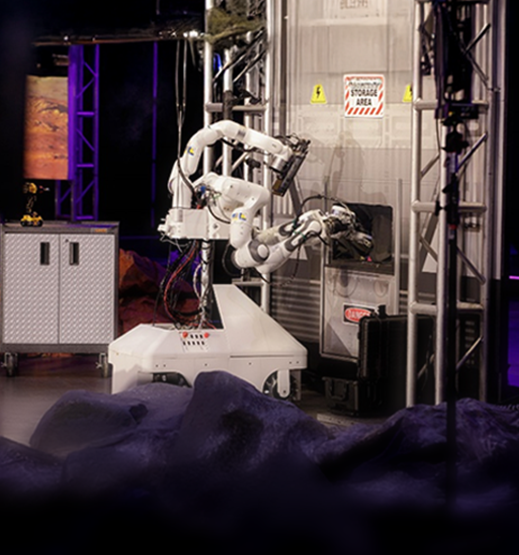

Robotic Avatars: Team NimbRo from the University of Bonn recently won the grand prize at the ANA Avatar XPRIZE competition, securing five million US dollars for their robotic avatar system. This system allows users to virtually put themselves in remote locations through an operator station and an avatar robot connected via the Internet. The avatar robot captures environmental data using sensors, enabling users to interact with objects, communicate, and move intuitively, enhancing telepresence experiences. The technology has applications in assisting individuals in their daily lives, telemedicine, and operating in hazardous environments.

Robot Soccer: The same team, NimbRo, achieved victory at the RoboCup World Championship, defending their title in the Humanoid AdultSize category. Their humanoid soccer-playing robots demonstrated exceptional skills, winning matches with impressive scores. The robots utilize advanced software for real-time visual perception and agile movement, showcasing their ability to maintain balance and optimize kicking movements. This competition not only promotes robotics research but also aims to develop robots capable of beating human champions in soccer by 2050.

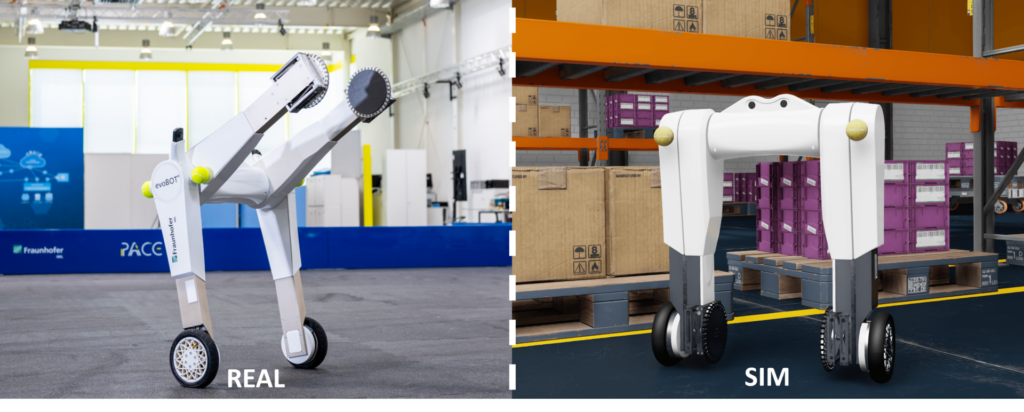

Logistics: In the realm of logistics, the evoBOT robot platform developed at Fraunhofer IML exemplifies the capabilities of Embodied AI. Designed for dynamic locomotion, the evoBOT can navigate uneven surfaces without external counterweights, making it suitable for diverse operational environments. Its modular design allows for various functionalities, such as transporting objects and assisting humans in collaborative tasks. Utilizing Guided Reinforcement Learning (RL), the evoBOT can learn to balance and adapt its movements, thus enhancing flexibility in logistics applications. Further information on reinforcement learning can be found in the related blogpost series on RL for Robotics.

Conclusion

Embodied AI represents a significant shift in our understanding of intelligence, emphasizing the critical role of physical interaction in learning and decision-making. As we continue to explore this exciting field, we are likely to see even more innovative applications that can transform industries and improve our daily lives. The integration of Embodied AI into various sectors not only enhances functionality but also provides a deeper understanding of how intelligence can manifest in the physical world.

At the Lamarr Institute for Machine Learning and Artificial Intelligence, we are committed to advancing research in Embodied AI. By focusing on the holistic integration of perception, planning, and execution, we aim to develop intelligent agents that not only understand their environment but can also interact with it in meaningful ways. This research is vital, especially in regions like Europe, where engineering and robotics are central to the economy.

As we look forward to the future of technology, it is essential to engage with and explore the possibilities that Embodied AI offers. Whether through research, collaboration, or education, we all have a role to play in shaping the evolution of this fascinating field. The potential for Embodied AI to revolutionize how we interact with technology is immense, and ongoing advancements could lead to even more groundbreaking applications that we have yet to imagine.

Find out more about Lamarr’s research area of Embodied AI here.