When we use Artificial Intelligence (AI) for important tasks, we want it to treat all people fairly. Unfortunately, this is often not the case: For example, in 2018, Amazon had to withdraw a system for pre-selecting applicants because it systematically rejected applications containing the word “women” (such as captain of the women’s soccer team). Therefore, there is an active debate on how to design AI systems to support fair decisions. This raises the fundamental question that is neither mathematically nor ethically easy to answer: When exactly is a system fair?

Why are AI systems unfair in the first place?

At first, one might expect that AI systems, which have no prejudices, personal preferences, or agendas, would automatically make fair decisions. However, AI needs data and instructions on what patterns to look for in that data. For instance, a Machine-Learning-based AI for personnel selection can be trained with past application data to identify good candidates. However, these data must be labeled to indicate who the good candidates are. Both the dataset and these labels can reflect our unfair society, as they are influenced by human decisions. Human biases can be part of the dataset if, for example, a hiring program analyzes recommendation letters. They can affect the dataset if such a program is trained mostly with data from white applicants. They can also be reflected in the AI’s decisions if it is trained to imitate human decisions.

What solutions are proposed?

One common reaction to this problem is to withhold information about so-called protected characteristics (such as gender, ethnicity, age). Similar approaches are often taken with human decision-makers: for example, if a hiring manager does not know that an applicant is from Turkey, they cannot discriminate against them on that basis. However, in the case of AI, this approach has a problem: AI systems are strong at discovering complex patterns in large datasets. Membership in a protected group often leaves traces in the data collected, and AI systems can use these traces. An algorithm that does not receive direct information about the gender of applicants might still treat men better than other genders, for example, by filtering out people with absence periods indicative of pregnancy, membership in certain clubs, or engagement in hobbies statistically less popular among men.

A second possible approach is to statistically analyze whether the system treats different groups (such as different genders) similarly. It is generally accepted that such an AI system would not classify every applicant correctly. Sometimes very capable applicants would be rated poorly, and sometimes less capable applicants would be rated highly. In a fair system, these errors should not disadvantage a particular group, meaning that qualified women or non-binary people should not constantly be rejected while less qualified men are invited. At first glance, one might expect that fairness in this sense can be easily checked mathematically. However, there is a problem: there are different plausible criteria for when a system is statistically fair. Mathematically, it can be shown that these criteria cannot be simultaneously met if the proportion of qualified individuals within the different groups is different.

A fair algorithm? Scenario 1

Let’s look at an example with numbers (see Table 1). Suppose a hiring manager uses an AI system to filter applications they don’t need to review. Assume we have an applicant pool of 100 women and 100 men. Of the women, 60 are qualified for the job, while only 20 men are. Let’s further assume we have an algorithm that forwards 48 of the 60 qualified women to the manager and 16 of the 20 qualified men. Additionally, it would pass on three of the 30 unqualified women and eight of the 80 unqualified men. Statistically analyzing this algorithm, we would find that it correctly identifies 80% of the qualified individuals and incorrectly marks 10% of the unqualified ones as qualified, regardless of gender. In this regard, the algorithm is fair. However, looking at the applications on the manager’s desk, 33% of the men (8 out of 24) are unqualified, compared to only about 8% of the women (4 out of 52). Therefore, significantly more unqualified men get the chance for the job compared to unqualified women, both percentage-wise and in absolute numbers.

A fair algorithm? Scenario 2

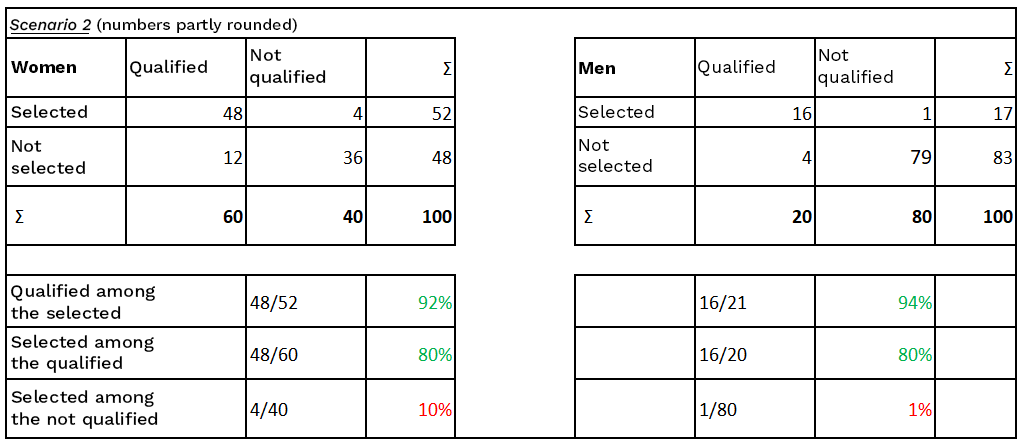

If this is considered unacceptable, a system could be trained where the error rate among selected men and women is similar (see Table 2). One way might be to find a system that, with otherwise similar numbers, selects only one unqualified man. However, this would also change another parameter: while here, 10% (4 out of 40) of the unqualified women are invited, it is only about 1% (1 out of 80) for the unqualified men. In this scenario, an unqualified woman’s chance of having her application accepted by the system is ten times higher than an unqualified man’s. This too can be considered unfair. Due to mathematical dependencies, there is no way to train a system that is balanced in all these respects.

Which of these possible scenarios represents the fair (or at least fairer) approach is not a mathematical question but depends on context and ethical considerations. In our case, one might consider it important that the probability of an unqualified application landing on the manager’s desk is the same for both groups, favoring Scenario 1. Alternatively, one might want to counteract existing inequalities in our society, minimizing the likelihood of jobs going to unqualified men, favoring Scenario 2.

What do we learn from this?

AI decisions are not automatically fair, and there is no simple mathematical way to ensure the fairness of an AI decision. Instead, the search for a fair AI system must be based on conscious, ethically informed design choices that consider the relationships between the data used and existing societal inequalities. Recognizing these challenges is the first and necessary step towards fairer AI systems.