Narratives are a powerful cognitive mechanism for structuring information and enhancing comprehension – a core component of human learning and reasoning. This blog introduces Story of Thought (SoT), a novel framework applying these principles to enhance Large Language Models’ reasoning abilities.

Narratives are also invaluable for simplifying complex concepts across domains, such as science communication and education. By establishing context and connecting ideas, narratives make it easier to grasp relationships that might remain unclear when relying solely on isolated facts or questions.

© V. Sadiri Javadi

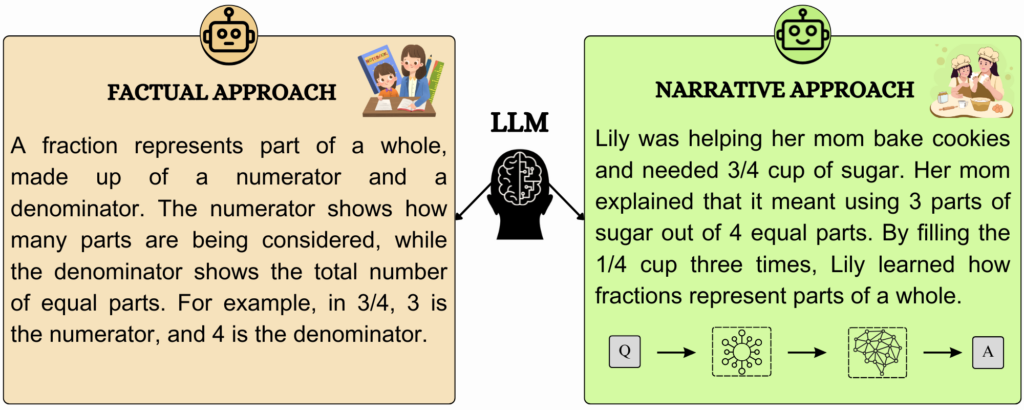

An Example: A conversational agent is tasked with explaining the concept of fractions. In a fact-based approach, the fundamentals are presented directly and concisely. Conversely, the narrative approach embeds the concept within a progressively developed story, enriching learning through context and emotional engagement.

Humans understand the world through stories – we share knowledge, solve problems, and pass on experiences using narratives. This led us to an intriguing idea:

Could the power of storytelling help Large Language Models (LLMs) think more effectively?

In our current research project, we are exploring precisely this question. Our goal is to investigate whether incorporating narrative elements could improve the problem-solving abilities of LLMs like GPT-4 or LLaMA in knowledge-intensive reasoning tasks.

Why Narratives? Insights from Cognitive Science

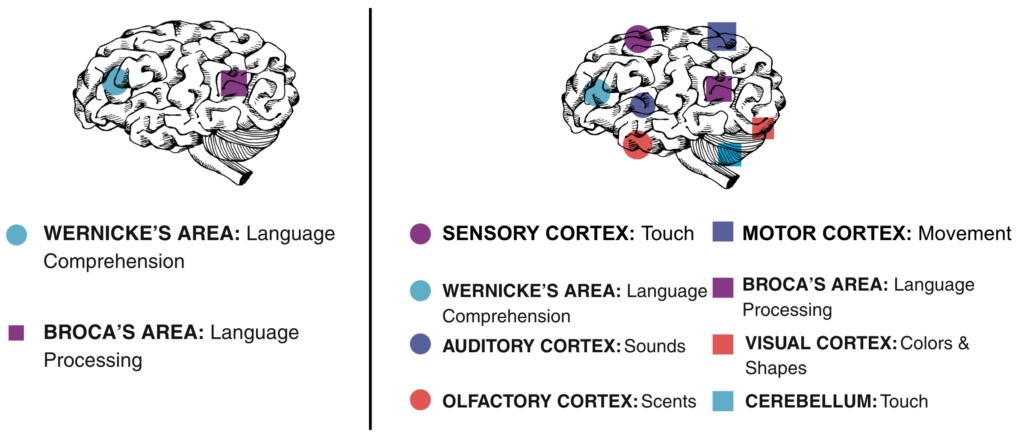

The idea of using stories originates from cognitive science. A “narrative” describes a sequence of events woven into a coherent story. The human brain reacts to stories in a unique way: various regions, from Broca’s area to the sensory cortices, work together to process language and generate vivid mental imagery – an effect known as “narrative transportation.”

Narratives are not only engaging but also exceptionally effective for structuring and retrieving information. They help link abstract concepts to familiar contexts, fostering deeper understanding and better retention.

When applied to LLMs, we hypothesize that narratives could:

- Enhance their ability to identify relevant information,

- Provide a logical structure for complex reasoning,

- Highlight causal relationships and dependencies within the task.

These considerations led to the development of our new framework: Story of Thought (SoT).

Story of Thought (SoT): How Narratives Assist LLMs

For LLMs, problem-solving often resembles a puzzle: disjointed facts need to be arranged into a coherent picture. Existing methods, like Chain of Thought (CoT), help models to break problems into logical steps – an effective but rigid approach. We wondered:

What if, instead of rigid reasoning steps, we could create a story?

This story would not only provide structure but also impart meaning and resolve complexity.

Our approach, Story of Thought (SoT), emerged from this idea. This narrative-driven framework leverages the benefits of storytelling – contextualization, coherence, and relational understanding – to offer LLMs a novel, effective way to solve complex tasks.

How Story of Thought (SoT) Works

To fully harness the power of narratives in problem-solving, we developed a three-step approach:

- Question Clarification: The problem is broken down into its core elements. Using LLMs, we identify the central concepts and subtopics necessary for understanding the issue. This diagnostic phase forms the foundation for the subsequent narrative.

- Generating a Narrative: Building on the clarified components, a structured story is crafted. Techniques like metaphors, analogical reasoning, and progressive disclosure are employed to contextualize the problem, making it more accessible for the model to process.

- Problem Solving: The generated narrative provides the foundation for the LLM to solve the problem. The context and logical structure offered by the narrative significantly enhance the model’s reasoning and problem-solving capabilities.

Practical Evaluation: SoT in Action

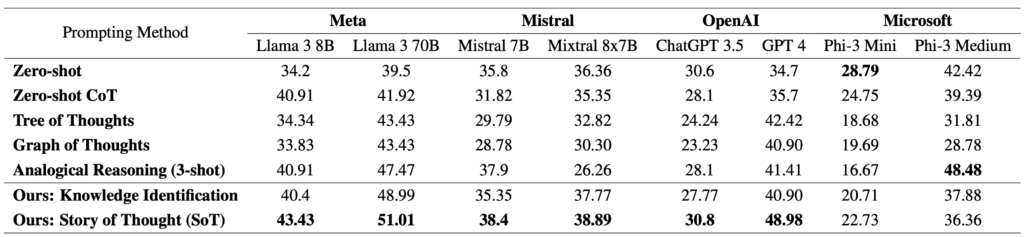

To assess the success of our approach, we tested SoT on two demanding datasets requiring logical reasoning and domain-specific knowledge:

- GPQA: A dataset featuring college-level tasks in physics, chemistry, and biology.

- JEEBench: A dataset based on tasks from the JEE-Advanced exam, covering high-school-level physics, chemistry, and mathematics.

Key Findings:

Performance Boost: Across various LLMs – including versions of LLaMA, Mistral, GPT, and Microsoft’s Phi models – SoT consistently outperformed traditional prompting methods such as zero-shot and Chain of Thought (CoT) techniques. For example, topen-source model Llama 3 (70B parameters) achieved the highest accuracy on GPQA using the SoT method, scoring 51.01%. When using GPT-4, SoT improved accuracy on GPQA by 14.28 percentage points compared to zero-shot prompting.

© V. Sadiri Javadi

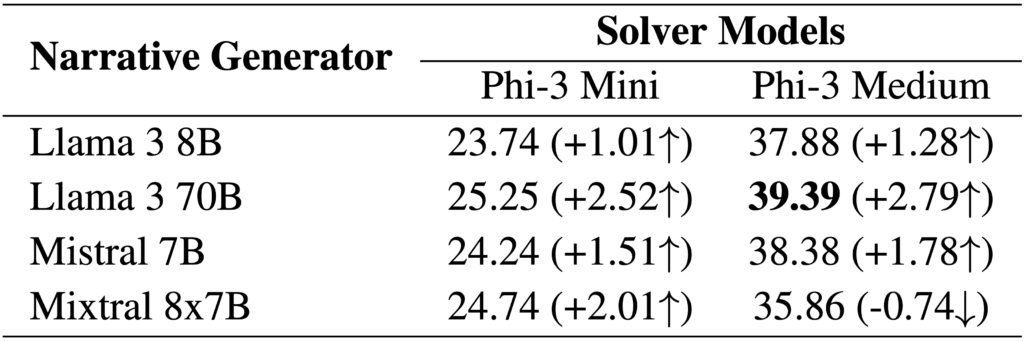

Robustness: Even smaller models benefited significantly from the narrative approach, highlighting its scalability. Notably, narratives generated by more powerful models improved the performance of smaller, less capable ones – suggesting that storytelling could serve as an effective means of knowledge transfer between LLMs.

Domain-Specific Enhancements: SoT showed the most significant improvements in subjects requiring contextual understanding, such as biology and chemistry. Narratives simplified complex relationships, significantly enhancing problem-solving outcomes.

© V. Sadiri Javadi

Outlook: The Future of Story of Thought (SoT)

Our initial results are promising, but they raise just as many questions as they answer. One of the most intriguing questions for us is:

Why are narratives so effective?

Do they simply provide a more structured way of organizing information for LLMs? Or is there a more fundamental mechanism inherent in the structure of narratives that enhances the reasoning abilities of models?

© V. Sadiri Javadi

Limitations and Challenges

We acknowledge that SoT may not be equally effective for all types of tasks. Problems heavily reliant on specific factual knowledge or multiple-choice formats might benefit less from a narrative-based approach. In such cases, a combination of traditional prompting methods and SoT could prove advantageous.

New Horizons for LLMs

The use of narratives in problem-solving not only unlocks new possibilities but also has the potential to elevate the thinking and reasoning capabilities of AI agents to a new level. By enabling LLMs to “think” in narratives, we lay the groundwork for a deeper integration of human cognitive mechanisms into AI systems – a development that could fundamentally transform how we leverage AI in the future.