In this blog post, we introduce fuzzy testing (short: fuzzing), a testing method that allows continuous, automated software testing with moderate time and cost investment. It is suitable for dynamic development and deployment environments and can achieve significantly greater test coverage compared to manual methods. This modern approach, originally developed for traditional software, is increasingly being extended and applied to the testing of AI systems (particularly neural networks). It combines conceptual simplicity with a range of advantageous features, making it an attractive solution for specific AI-related issues we discussed in a previous blog post.

Fuzzing: Testing with randomness

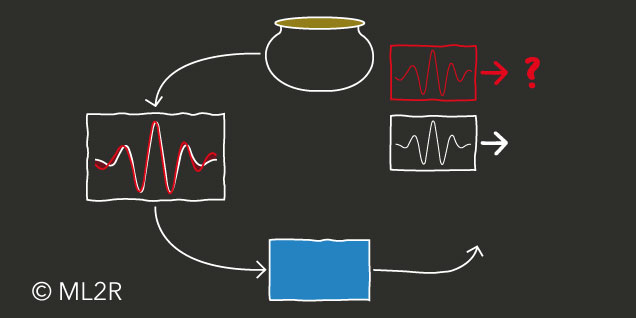

Fuzzy testing is based on an evolutionary optimization strategy and is a promising candidate for addressing challenges particularly significant in the context of AI systems. Starting with a set of pre-selected test inputs, randomly new inputs are generated through application-specific transformations and presented to the AI model. Such a random mutation is considered “interesting” by the algorithm if at least one of the following criteria is met:

- Poor(er) predictions: The model’s prediction is either incorrect or of inadequate quality.

- Increased search space coverage: A suitable measure of the model’s state space coverage is significantly increased by the random mutation. For a neural network and neuron coverage as a coverage measure, this would be the case, for example, if the new example strongly activates neurons that were not or only weakly activated by all other tests.

Since the second criteria incorporates a measure for the coverage of the model’s state space into the evaluation of a test input, this approach is also known as coverage-based fuzzing. All random inputs are collected and evaluated for their interest. At regular intervals, a certain proportion of the less interesting test cases is filtered out.

The image shows a run through the Fuzzing test loop. Starting from a collection of existing test inputs, an input is drawn and altered with an application-specific random mutation. If the prediction of the AI model being tested is incorrect or the test input significantly increases a suitable coverage measure (such as neuron coverage for neural networks), the random mutation is added to the test collection. This process is repeated until the test budget is exhausted or a critical error occurs.

A crucial role in achieving

- testing breadth (how much test inputs can differ),

- testing depth (how densely the search space is covered around individual inputs), and

- testing efficiency

is played by the transformations available as basic building blocks for the random mutations. They influence which possible new inputs can be generated from the existing ones and how long it takes to explore new areas of the search space.

For example, in the case of an image processing model, standard filters from image processing (blur, noise, distortions, etc.) could be randomly combined. The increased testing depth would make it possible to find adversarial examples or, more generally, model instabilities that are, by definition, very close to examples from the training dataset – in the case of adversarial examples, so close that differences from the original are not or barely noticeable to the naked eye.

On the other hand, it would require a practically infinite number of mutations to change an image of a dog into an orange using only such filters and randomness. Tests with high testing breadth, where inputs with such new content must be generatable, require more complex transformations based on (image) generators; mutations in the above example would arise from randomly adding or removing entire image components.

While standard filters from image processing can be relatively easily used to test any image processing model, generative transformations must be specifically assembled or even newly developed for an application case.

Advantages of fuzzy testing

Fuzzing inherently possesses a range of properties that make it a promising candidate for practically implementable, automated AI tests. With continuously optimized, randomly generated inputs, fuzzy testing is:

- Dynamic: The set of test inputs adapts purposefully and without human intervention to changing models and deployment environments.

- Automatable: Fuzzing is an automated testing method that can be applied without close human supervision.

- Practical: Just as the development of a model is often not conclusively finished in practice, Fuzzing can also continue “endlessly.” However, computing time and other resource limitations for Fuzzing campaigns can also be defined.

- Comprehensive: With a suitably chosen metric for search space coverage, Fuzzing promises to provide a quantitative indicator of when a test is sufficiently “complete.” It also allows flexible trade-offs between test quality and effort.

- Integrable: Fuzzy tests can be technically well-integrated into modern development and delivery tools and the corresponding automated processes from testing the current model versions to production operation.

- Versatile: Although currently developed Fuzzing approaches are often tailored to neural networks, the method is generally suitable for testing other learning methods and all types of input data.

Fuzzing thus promises many advantages for broad practical use. However, some research questions still need to be clarified to help the technique gain wider application.

Current research areas in fuzzy testing for Machine Learning

A good fault heuristic, a so-called Oracle, often needs to be specifically chosen or tailored for a particular application case. Generic, and thus (almost) universally applicable, Oracles are mainly suitable for finding critical errors, such as program crashes and computational errors (so-called Smoke Testing). Whether an appropriate Oracle can be found usually needs to be decided on a case-by-case basis and often requires domain-specific expertise.

Choosing a suitable coverage metric is also an active research area. It serves both to guide the automatic search for interesting test examples into the depth of the search space and to indicate the overall completeness of the test campaign. For example, the suitability of the so-called neuron coverage for neural networks is controversial among researchers and is just one of many candidates for a coverage measure. On the other hand, there are established learning methods outside neural networks for which no candidate has been developed yet.

Additionally, selecting suitable elementary transformations can be challenging depending on the application case. It is essential to avoid generating (too) many test cases that lie outside a model’s often vaguely defined application domain. This is important to save resources and costs and to find only those errors relevant for quality assessment beyond pure Smoke Tests. If the set of transformations is too restricted to traverse the search space of all inputs quickly, there is a risk that no test campaign will achieve satisfactory coverage, regardless of the effort spent.

Conclusion

With fuzzy testing, a modern, established method from automated software testing is making the leap from traditional software to the realm of Machine Learning. It combines conceptual simplicity with a range of beneficial features, making it attractive for solving problems specific to the AI field. It remains to be seen for which applications these promises will be fulfilled, but in the area of neural networks, fuzzy testing is already an active research field with several candidates worth looking into.

More information can be found in the related video.