Time series describe the change of a measurement over time. For example, sensor data from a robot or the temperature throughout the day can be interpreted as time series. Time series data are ubiquitous in various applications, from logistics to finance. The goal of time series prediction is to accurately forecast the next, unobserved values from a given time series. Properties of the time series, such as periodicity or trend, can be utilized for prediction. For instance, it is possible to predict temperatures for the next day from historical data or estimate missing sensor data in the event of a short-term failure.

However, the assumption that the future can be reliably predicted from the past often does not hold true in reality. For example, if measured temperatures change significantly over time due to climate change, new measurements are more suitable for prediction than older ones. This phenomenon, where time series properties change over time, is often referred to as concept drift. When a drift occurs, it must not only be detected but also integrated into the prediction mechanism of Machine Learning models.

Not every model is ideal for every prediction

In principle, it is possible to use just one Machine Learning model to predict values of a time series. Alternatively, multiple models of varying complexity can be trained on the same time series. The predictions of the different models can then be combined (ensembling), or the best model from the set of trained models can be selected for prediction. The latter process is known as model selection in research. Since the selection process is often untransparent (even for experts), we focus on making this process more transparent.

An established method for model selection involves creating a competence region for each model. The competence region reflects the time series properties on which the respective model has made particularly good predictions. During the training process of the individual models, we can measure which model made the best prediction in which part of the time series. The characteristics of this time series segment are then added to the competence region of the respective best model. When a prediction is needed for a new time series, the characteristics of the new time series are compared with the competence regions. The model that performed best on similar time series during training is then selected for prediction.

In our work, we go a step further and consider not only the characteristics of the time series in the competence region but also measure which parts of this time series were particularly relevant for the model’s prediction. We achieve this by applying explainability methods, which highlight the most relevant parts of the time series for a model’s prediction.

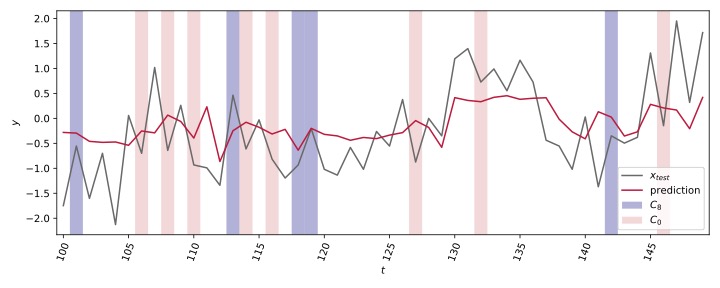

A sample prediction (red) on a time series (grey). The regions of the time series for which either model C8 (purple) or C0 (pink) were selected are shown. C8 was frequently selected at peaks in the time series, as these were prominent in C8’s competence region. Similarly, C0 was often selected at valleys, as these were disproportionately present in C0’s competence region.

Time series properties are not constant

In real application scenarios, the characteristics of a time series often change over time. We respond to this concept drift by initially discarding the competence regions of the individual models when a drift is detected, and then recreating them with updated data of changed characteristics. This allows us to react to changes in the environment and continuously adapt the prediction to new conditions. The speed of prediction is also significantly increased when we do not periodically regenerate the competence regions but attempt to detect the concept drift (drift detection) and only then adapt our models to the new conditions. To do this, we monitor the mean value of the respective time series and define a drift only if this mean value changes significantly over time. With a freely selectable parameter, we can also determine how much the mean value must change before a drift is detected. This allows our method to be adapted to various application areas and datasets.

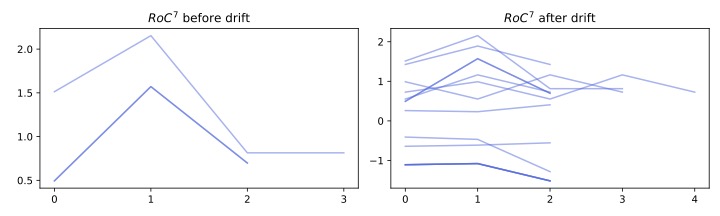

The competence region of a single model before and after drift detection. It can be seen that the characteristics of the time series on which the model performed best change significantly, allowing it to adapt to the new conditions of the time series.

An explainable approach

Model selection, especially of deep neural networks, is often an opaque process. It is usually not interpretable for users why a model was selected for this specific part of the time series. With our approach, we achieve competence regions that more accurately highlight the model’s expertise, allowing the selected model to be better visually compared with the current time series. This not only allows users to identify errors in selection but also increases confidence in the effectiveness of the procedure. Furthermore, our drift detection approach offers an automatic, resource-saving adaptation of models to changing properties of the time series.

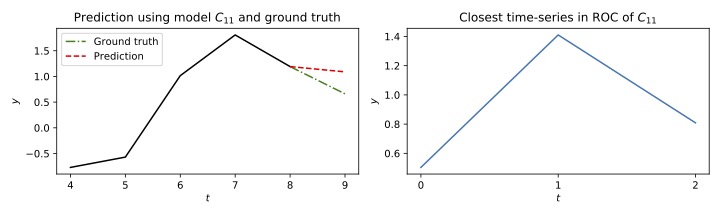

The current time series is shown in black, for which a prediction is to be made at time t=9. Our method selects model C11 for this, as it has a time series in its competence region that shows a strong similarity to the current time series (blue).

More information in the corresponding paper:

Explainable Online Deep Neural Network Selection using Adaptive Saliency Maps for Time Series Forecasting

Amal Saadallah, Matthias Jakobs, Katharina Morik. European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML-PKDD), 2021, PDF.