Deep Neural Networks (DNNs) have shown phenomenal performance, reaching or surpassing human expert-level performance in various domains. Computer vision, which is essential for the success of technologies like autonomous driving or automated medical diagnosis, uses a version of DNNs called Convolutional Neural Networks (CNNs). CNNs have replaced classical Machine Learning modules for complex tasks like Semantic Segmentation or Object Detection. Like other DNNs, CNNs are also black boxes where the underlying function aiming to approximate the real-world distribution of data is learned using training data, and the decision-making process of the DNN itself is not easily interpretable by humans.

When deploying CNNs to real-world applications, Verification and Validation (V&V) plays a significant role in providing safety guarantees to build trust for the products from the user perspective. However, unlike V&V in the software domain, the V&V of DNNs is nontrivial due to their black-box nature. The software can be tested at multiple levels like unit tests, integration tests, and functional tests to ensure no deviation from expected behavior. DNNs, however, cannot easily be tested at the unit level or functional level. Generally, for supervised learning, a test dataset is prepared along with the training dataset from the entire available labelled data. Trained DNNs are then evaluated on these test datasets and benchmarked against other classical, deep learning or human performance using some metric which is aggregated over the entire test dataset. However, these aggregated metric scores do not provide any information about when or how a DNN might fail. Furthermore, there is no conventional way to ensure that the dataset sufficiently covers all aspects of the real-world problem to ensure that the scores represent the real-world performance of DNN.

Issues with aggregated metrics

Let us consider a DNN tasked with detecting pedestrians on images of traffic situations. We can obtain the model’s performance on the test dataset by using Intersection over Union (IoU), a commonly used semantic segmentation metric. Let us consider that we obtain a score of 80%, which is better than existing classical methods. Using only the aggregated score, one would consider deploying such a model in some real-world application. However, there could be subregions in the data space where the model performs worse than the average. Such regions of low performance could be potential weak spots learnt from the training data. We propose to consider human understandable semantic features that describe the scene as subregions of the data and calculate the performance metric by grouping over such semantic features.

Figure 1 shows a representation sketch of our example where we see the IoU along the semantic dimensions – skin color of pedestrian, which indicates a bias in the data. The color of the points in the image indicates the pedestrian’s skin color, and we can quite quickly notice that the model performs consistently poorly for dark-skinned pedestrians. This test can be extended for all possible semantic dimensions, which are safety-critical according to a human expert. While we expect independent and identically distributed sampling in test datasets, real-world does have temporal and semantic consistency. Suppose models are systematically weak for specific semantic dimensions in one scene. In that case, they will probably be weak for future scenes, and therefore, it is essential to identify such weak spots and fix the underlying issue. We call this semantic testing.

Semantic Testing

As one can imagine, generating labelled data for supervised learning is a time and cost-intensive task. Obtaining scene and semantic information in addition to labels would further complicate the labelling process. Certain semantic features can be extracted from automated classical image processing techniques using the raw images and labels, but there is a limit on how elaborate this approach can get. However, we can automate the data generation, labelling, and scene descriptions extraction processes using computer simulators.

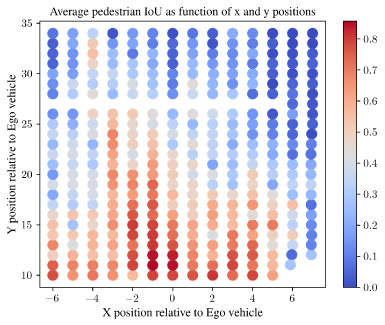

For our work, we use one such open-source simulator for autonomous driving called the CARLA simulator. While it is possible to already extract raw images and labels directly, we had to make some modifications to the source code to extract the metadata of the scenes (code available on GitHub). Using our modified CARLA simulator, we create a large dataset of raw scenes, semantic segmentation labels and metadata of the scenes and use these to train a semantic segmentation DNN called DeepLabv3+. We perform various evaluations across semantic dimensions to study where weak spots in the model could occur. We can extend it further by generating special test sets as shown in Video 1, where we change a single semantic dimension in each image, for example the pedestrian position and evaluate the performance as shown in Figure 2. We can see that the performance is not uniform. As expected, pedestrians closer are detected better than pedestrians farther away. However, we can notice that there are other factors influencing performance apart from distance because the performance is not symmetric.

Figure 2: Top-down view of the scene in video. Each point represents the position of pedestrian. The color represents the performance (IoU) of model for pedestrian class at that position.

Takeaways

Semantic testing offers a more granular and interpretable approach to testing DNNs. With semantic testing, one can answer questions of the type: Is the pedestrian detection model going to work for (i) all real positions a pedestrian could be in or are there certain locations where there is a higher chance of failure. (ii) are there clothing types which are difficult for a model. However, semantic testing when evaluated at single dimensions is still limited in its scope. Low performance along certain semantic features might not necessarily be due to that semantic feature itself but due to interplay between multiple semantic features, for example location + contrast + occlusion. In such situations, identifying which semantic features are important for the performance and which are not important is not trivial. Our future work looks at generating such an “importance matrix” of semantic dimensions that influence the performance.

Further information in the respective paper:

Semantic Concept Testing in Autonomous Driving by Extraction of Object-Level Annotations from CARLA.

Gannamaneni, Sujan, Sebastian Houben, and Maram Akila. Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021. PDF.